Appendix A Optimization Methods

“Since the building of all the universe is perfect and is created by the wisdom creator, nothing arises in the universe in which one cannot see the sense of some maximum or minimum.”

– L. Euler

In this chapter, we give a brief introduction to some of the most basic but important optimization algorithms used in this book. The purpose is only to help the reader apply these algorithms to problems studied in this book, not to gain a deep understanding about these algorithms. Hence, we will not provide a thorough justification for the algorithms introduced, in terms of performance guarantees.

A.1 Steepest Descent

Optimization is concerned with the question of how to find where a function, say , reaches its minimum value. Mathematically, this is stated as a problem:

| (A.1.1) |

where represents a domain to which the argument is confined. Often (and unless otherwise mentioned, in this chapter) is simply . Without loss of generality, we assume that here the function is smooth111In case the function is not smooth, we replace its gradient with a so-called subgradient..

The efficiency of finding the (global) minima depends on what information we have about the function . For most optimization problems considered in this book, the dimension of , say , is very large. That makes computing or accessing local information about expensive. In particular, since the gradient has entries, it is often reasonable to compute; however, the Hessian has entries which is often wildly impractical to compute (and the same goes for higher-order derivatives). Hence, it is typical to assume that we have the zeroth-order information, i.e., we are able to evaluate , and the first-order information, i.e., we are able to evaluate . Optimization theorists may rephrase this as saying we have a “first-order oracle.” All optimization algorithms that we introduce in this section only use a first-order oracle.222We refer the readers to the book by [WM22] for a more systematic introduction to optimization algorithms in a high-dimensional space, including algorithms assuming higher-order oracles.

A.1.1 Vanilla Gradient Descent for Smooth Problems

The simplest and most widely used method for optimization is gradient descent (GD). It was first introduced by Cauchy in 1847. The idea is very simple: starting from an initial state, we iteratively take small steps such that each step reduces the value of the function .

Suppose that the current state is . We want to take a small step, say of distance , in a direction, indicated by a vector , to reach a new state such that the value of the function decreases:

| (A.1.2) |

To find such a direction , we can approximate through a Taylor expansion around :

| (A.1.3) |

where the inner product here (and in this chapter) will be the inner product, i.e., . To find the direction of steepest descent, we attempt to minimize this Taylor expansion among unit vectors . If , then the second term above is regardless of the value of , so we cannot attempt to make progress, i.e., the algorithm has converged. On the other hand, if then it holds

| (A.1.4) |

In words, this means that the value of decreases the fastest along the direction , for small enough . This leads to the gradient descent method: From the current state (), we take a step of size in the direction of the negative gradient to reach the next iterate,

| (A.1.5) |

The step size is also called the learning rate in machine learning contexts.

Step-Size Selection

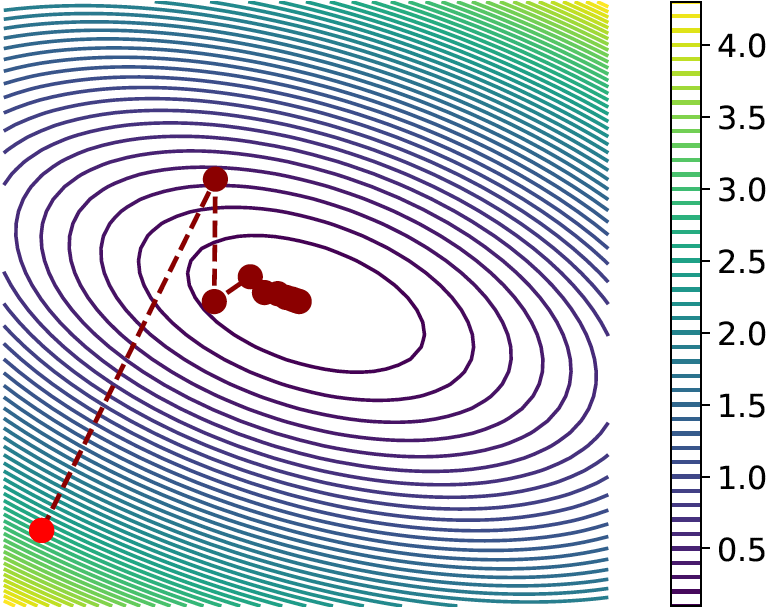

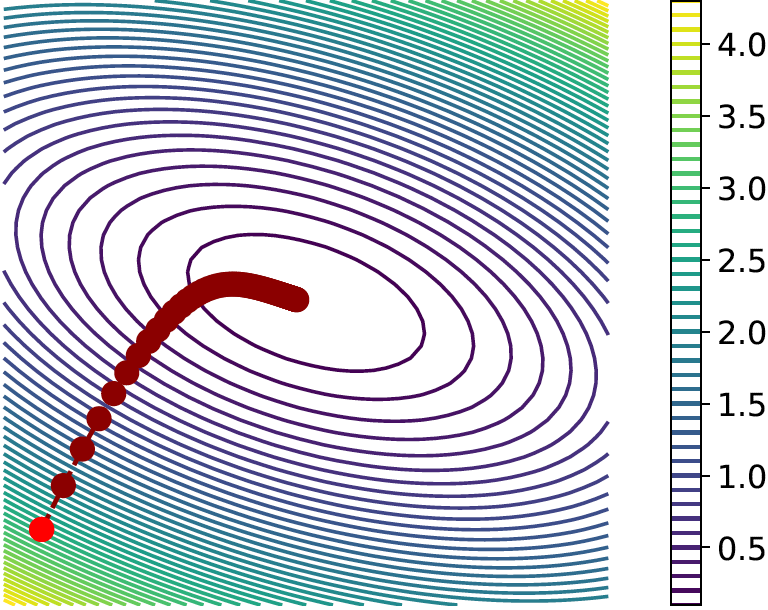

The remaining question is what the step size should be? If we choose to be too small, the value of the function may decrease very slowly, as shown by the plot in the middle in Figure A.1. If is too large, the value might not even decrease at all, as shown by the plot on the right in Figure A.1.

So the step size should be chosen based on the landscape of the function . Ideally, to choose the best step size , we can solve the following optimization problem over a single variable :

| (A.1.6) |

This method of choosing the step size is called line search; as hinted by the notation, it is usually used to obtain a optimal learning rate for each iteration . However, when the function is complicated, which is usually the case for training a deep neural network, this one-dimensional optimization is very difficult to solve at each iteration of gradient descent.

Then how should we choose a proper step size ? One common and classical approach is to try to obtain a good approximation of the local landscape around the current state based on some knowledge about the overall landscape of the function .

Common conditions for the landscape of include:

-

•

-Strong Convexity. Recall that is -strongly convex if its graph lies above a global quadratic lower bound of slope , i.e.,

(A.1.7) for any “base point” . We say that is convex if it is -strongly convex, i.e., its graph lies above its tangents. It is easy to show (proof as exercise) that strongly convex functions have unique global minima. Another important fact (proof as exercise) is that -strongly convex twice-differentiable functions have (symmetric) Hessians whose minimum eigenvalue is . For this implies the Hessian is symmetric positive definite, and for (i.e., is convex) this implies that the Hessian is symmetric positive semidefinite.

-

•

-Lipschitz Gradient (also called -Smoothness). Recall that has -Lipschitz gradient if exists and is -Lipschitz, i.e.,

(A.1.8) for any “base point” . It is easy to show (proof as exercise) that this is equivalent to having a global quadratic upper bound of slope , i.e.,

(A.1.9) for any “base point” . Another important fact (proof as exercise) is that convex -Lipschitz gradient twice-differentiable functions have (symmetric) Hessians whose largest eigenvalue is .

First, let us suppose that has -Lipschitz gradient (but is not necessarily even convex). We will use this occasion to introduce a common optimization theme: to minimize , we can minimize an upper bound on , which is justified by the following lemma visualized in Figure A.2.

Lemma A.1 (Majorization-Minimization).

Suppose that is a global upper bound on , namely for all . Suppose that they meet with equality at , i.e., . Then

| (A.1.10) |

We will use this lemma to show that we can use the Lipschitz gradient property to ensure that each gradient step cannot worsen the value of . Indeed, at every base point , we have that is a global upper bound on , and . Hence by Lemma A.1

| (A.1.11) |

This motivates us, when finding an update to obtain from , we can instead minimize the upper bound over and set that to be . By minimizing (proof as exercise) we get

| (A.1.12) |

This implies that a step size is a usable learning rate, but it does not provide a convergence rate or certify that actually converges to . This requires a little more rigor, which we now pursue.

Now, let us suppose that is -strongly convex, has -Lipschitz gradient, and has global optimum . We will show that will converge directly to the unique global optimum , which is a very strong form of convergence. In particular, we will bound using both strong convexity and Lipschitzness of the gradient of , i.e., taking a look at the neighborhood around :333In this proof the -Lipschitz Gradient invocation step is a little non-trivial. We also leave this step as an exercise, with the hint to plug in into the Lipschitz gradient identity.

| (A.1.13) | ||||

| (A.1.14) | ||||

| (A.1.15) | ||||

| (A.1.16) | ||||

| (A.1.17) | ||||

| (A.1.18) |

In order to ensure that the gradient descent iteration makes progress we must pick the step size so that , i.e., . If such a setting occurs, then

| (A.1.19) | ||||

| (A.1.20) |

In order to minimize the right-hand side, we can set , which obtains

| (A.1.21) |

showing convergence to global optimum with exponentially decaying error. Notice that here we used a convergence rate to obtain a favorable step size of . This motif will re-occur in this section.

We end this section with a caveat: learning a global optimum is (usually) impractically hard. Under certain conditions, we can ensure that the gradient descent iterates converge to a local optimum. Also, under more relaxed conditions, we can ensure local convergence, i.e., that the iterates converge to a (global or local) optimum if the sequence is initialized close enough to the optimum.

A.1.2 Preconditioned Gradient Descent for Badly-Conditioned Problems

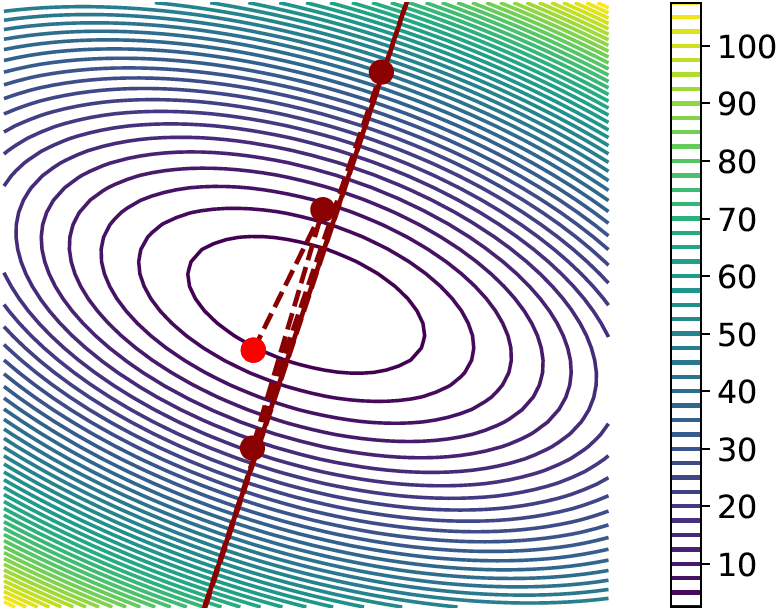

![Figure A.3 : The negative gradient − ∇ ℒ λ -\nabla\mathcal{L}_{\lambda} - ∇ caligraphic_L start_POSTSUBSCRIPT italic_λ end_POSTSUBSCRIPT and pre-conditioned (Newton’s method step) vector field − [ ∇ 2 ℒ λ ] − 1 [ ∇ ℒ λ ] -[\nabla^{2}\mathcal{L}_{\lambda}]^{-1}[\nabla\mathcal{L}_{\lambda}] - [ ∇ start_POSTSUPERSCRIPT 2 end_POSTSUPERSCRIPT caligraphic_L start_POSTSUBSCRIPT italic_λ end_POSTSUBSCRIPT ] start_POSTSUPERSCRIPT - 1 end_POSTSUPERSCRIPT [ ∇ caligraphic_L start_POSTSUBSCRIPT italic_λ end_POSTSUBSCRIPT ] where λ = 19 \lambda=19 italic_λ = 19 . There is a section of the space where following the negative gradient vector field makes very little progress towards finding the minimum, but in all cases following the Newton’s method vector field achieves equal speed of progress towards the optimum since the gradient is whitened. Since the Hessian here is diagonal, adaptive learning rate algorithms (e.g. Adam, as will be discussed later in the section) can make similar progress as Newton’s method, but a non-axis-aligned Hessian may even prevent Adam from succeeding quickly.](chapters/appendixA/figs/hessian_geometry.png)

Newton’s Method

There are some smooth problems and strongly convex problems on which gradient descent nonetheless does quite poorly. For example, let and let of the form

| (A.1.22) |

This problem is -strongly convex and has -Lipschitz gradient. The convergence rate is then geometric with rate . For large , this is still not very fast. In this section, we will introduce a class of optimization problems which can successfully optimize such badly-conditioned functions.

The key lies in the objective’s curvature, which is given by the Hessian. Suppose that (as a counterfactual) we had a second-order oracle which would allow us to compute , , and . Then, instead of picking a descent direction to optimize the first-order Taylor expansion around , we could optimize the second-order Taylor expansion instead. Intuitively this would allow us to incorporate curvature information into the update.

Let us carry out this computation. The second-order Taylor expansion of around is

| (A.1.23) |

Then we can compute the descent direction:

| (A.1.24) |

This optimization problem is a little difficult to solve because of the constraint . But in practice we never normalize the descent direction and use the step size to control the size of the update. So let us just solve the above problem over all vectors :444If is not invertible, then we can replace with the Moore-Penrose pseudoinverse of .

| (A.1.25) |

We can thus use the steepest descent iteration

| (A.1.26) |

(this is the celebrated Newton’s method), or

| (A.1.27) |

(which is called underdamped Newton’s method). Since the second-order quadratic is equal to its second-order Taylor expansion, if we run Newton’s method for one step, we will achieve the global minimum in one step no matter how large is. Figure A.3 gives some intuition about poorly conditioned functions and the gradient steps versus Newton’s steps.

PGD

In practice, we do not have a second-order oracle which allows us to compute . Instead, we can attempt to learn an approximation to it alongside the parameter update from .

How do we learn an approximation to it? We shall find some equations which the Hessian’s inverse satisfies and then try to update our approximation so that it satisfies the equations. Namely, taking the Taylor series of around point , we obtain

| (A.1.28) |

In this case we have

| (A.1.29) |

We can now try to learn a symmetric positive semidefinite pre-conditioner such that

| (A.1.30) |

updating it at each iteration along with . Namely, we have the PSGD iteration

| (A.1.31) | ||||

| (A.1.32) |

This update has two problems: how can we even use (since we already said we cannot store an matrix) and how can we update at each iteration? The answers are very related; we can never materialize in computer memory, but we can represent it using a low-rank factorization (or comparable methods such as Kronecker factorization which is particularly suited to the form of deep neural networks). Then the preconditioner update step is designed to exploit the structure of the preconditioner representation.

We end this subsection with a caveat: in deep learning, for example, is not a convex function and so Newton’s method (and approximations to it) do not make sense. In this case we look at the geometric intuition of Newton’s method on convex functions, say from Figure A.3: the inverse Hessian whitens the gradients. Thus instead of a Hessian-approximating preconditioner, we can adjust the above procedures to learn a more general whitening transformation for the gradient. This is the idea behind the original proposal of PSGD [Li17], which contains more information about how to store and update the preconditioner, and more modern optimizers like Muon [LSY+25].

A.1.3 Proximal Gradient Descent for Non-Smooth Problems

Even in very toy problems, however, such as LASSO or dictionary learning, the problem is not strongly convex but rather just convex, and the objective is no longer just smooth but rather the sum of a smooth function and a non-smooth regularizer (such as the norm). Such problems are solved by proximal optimization algorithms, which generalize steepest descent to non-smooth objectives.

Formally, let us say that

| (A.1.33) |

where is smooth, say with -Lipschitz gradient, and is non-smooth (i.e., rough). The proximal gradient algorithm generalizes the steepest descent algorithm, by using the majorization-minimization framework (i.e., Lemma A.1) with a different global upper bound. Namely, we construct such an upper bound by asking: what if we take the Lipschitz gradient upper bound of but leave alone? Namely, we have

| (A.1.34) |

Note that (proof as exercise)

| (A.1.35) |

Now if we try to minimize the upper bound , we are picking a that:

-

•

is close to the gradient update ;

-

•

has a small value of the regularizer

and trades off these properties according to the smoothness parameter . Accordingly, let us define the proximal operator

| (A.1.36) |

Then, we can define the proximal gradient descent iteration which, at each iteration, minimizes the upper bound , i.e.,

| (A.1.37) |

Convergence proofs are possible when , but we do not give any in this section.

One remaining question is: how can we compute the proximal operator? At first glance, it seems like we have traded one intractable minimization problem for another. Since we have not made any assumption on so far, the framework works even when is a very complex function (such as a neural network loss), which would require us to solve a neural network training problem in order to compute a single proximal operator. However, in practice, for simple regularizers such as those we use in this manuscript, there exist proximal operators which are easy to compute or even in closed-form. We give a few below (the proofs are an exercise).

Example A.1.

Let be a set, and let be the characteristic function on , i.e.,

| (A.1.38) |

Then the proximal operator of is a projection, i.e.,

| (A.1.39) |

Example A.2.

The norm has a proximal operator which performs soft thresholding:

| (A.1.40) |

then is defined coordinate-wise by

| (A.1.41) |

The proximal gradient operation with the smooth part of the objective being least-squares and the non-smooth part being the norm (hence using this soft thresholding proximal operator) is called the Iterative Shrinkage-Thresholding Algorithm (ISTA).

A.1.4 Stochastic Gradient Descent for Large-Scale Problems

In deep learning, the objective function usually cannot be computed exactly, and instead at each optimization step it is estimated using finite samples (say, using a mini-batch). A common way to model this situation is to define a stochastic loss function where is some “source of randomness”. For example, could contain the indices of the samples in a batch over which to compute the loss. Then, we would like to minimize over , given access to a stochastic first-order oracle: given , we can sample and compute and . This minimization problem is called a stochastic optimization problem.

The basic first-order stochastic algorithm is stochastic gradient descent: at each iteration we sample , define , and perform a gradient step on , i.e.,

| (A.1.44) |

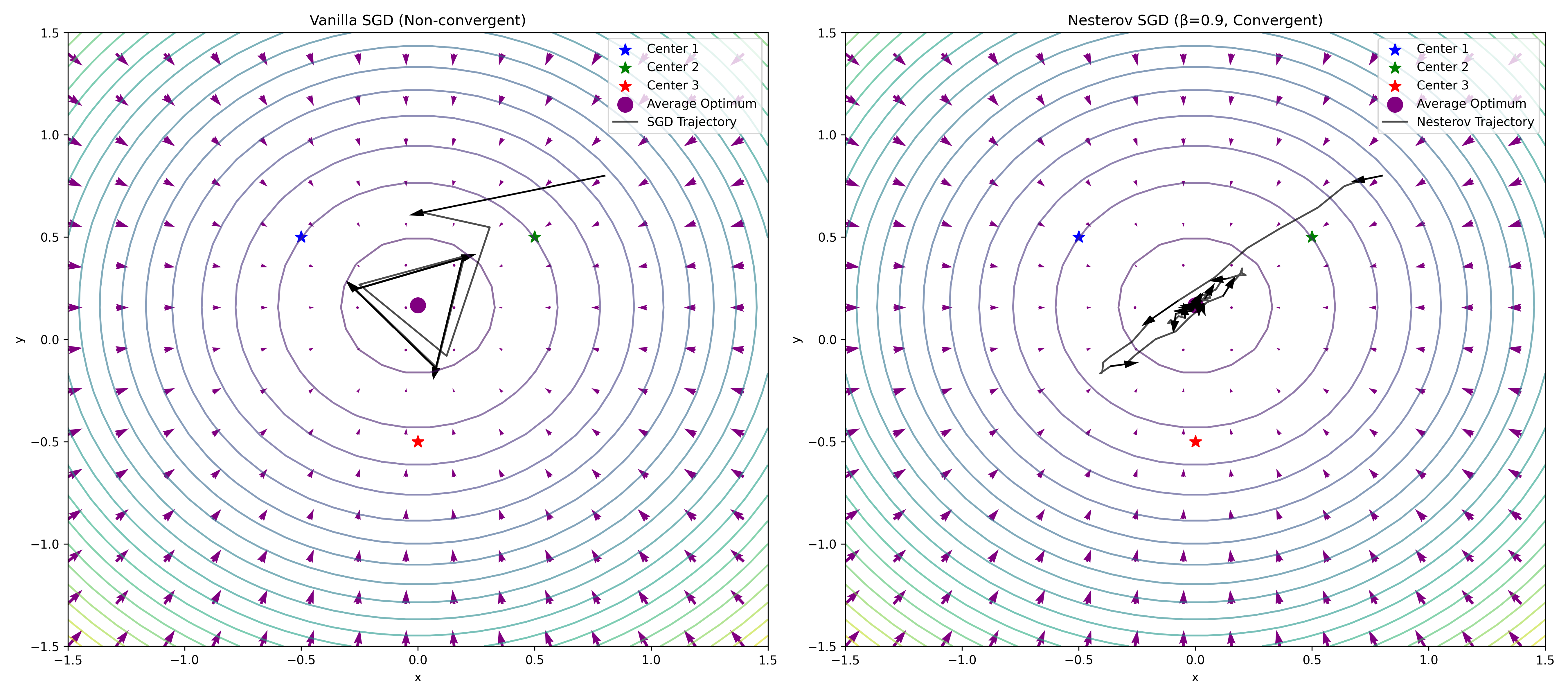

However, even for very simple problems we cannot expect the same type of convergence as we obtained in gradient descent. For example, suppose that there are possible values for which it takes with equal probability, and there are possible targets , such that the loss function is

| (A.1.45) |

Then , but stochastic gradient descent can “pinball” around the global optimum value, and not converge, as visualized in Figure A.4.

In order to fix this, we can either average the parameters or average the gradients over time. If we average the parameters , then (using Figure A.4 as a mental model) the issue of pinballing is straightforwardly not possible, since the average iterate will grow closer to the center. As such, most theoretical convergence proofs consider the convergence of the average iterate to the global minimum. If we average the gradients, we will eventually learn an average gradient which does not change much at each iteration and therefore does not pinball.

In practice, instead of using an arithmetic average, we take an exponentially moving average (EMA) of the parameters (this is called Polyak momentum) or of the gradients (this is called Nesterov momentum). Nesterov momentum is more popular and we will study it here.

A stochastic gradient descent iteration with Nesterov momentum is as follows:

| (A.1.46) | ||||

| (A.1.47) |

We do not go through a convergence proof (see Chapter 7 of [GG23] for an example). However, Nesterov momentum handles our toy case in Figure A.4 easily (see the right-hand figure): it stops pinballing and eventually converges to the global optimum.

We end with a caveat: one can show that Polyak momentum and Nesterov momentum are equivalent, for certain choices of parameter settings. Then it is also possible to show that a decaying learning rate schedule (i.e., the learning rate depends on the iteration , and its limit is as ) with plain SGD (or PSGD) can mimic the effect of momentum. Namely, [DCM+23] shows that if the SGD algorithm lasts iterations, the gradient norms are bounded , and we define , then plain SGD iterates satisfy the rate — but only so long as the learning rate decays linearly with time. This matches learning rate schedules used in practice. Indeed, surprisingly, such a theory of convex optimization can predict many empirical phenomena in deep networks [SHT+25], despite deep learning optimization being highly non-convex and non-smooth in the worst case. It is so far unclear why this is the case.

A.1.5 Putting Everything Together: Adam

The gradient descent scheme proposes an iteration of the form

| (A.1.48) |

where (recall) is chosen to be (proportional to) the steepest descent vector in the Euclidean norm:

| (A.1.49) |

However, in the context of deep learning optimization, there is absolutely nothing which implies that we have to use the Euclidean norm; indeed the “natural geometry” of the space of parameters is not well-respected by the Euclidean norm, since small changes in the parameter space can lead to very large differences in the output space, for a particular fixed input to the network. If we were instead to use a generic norm on the parameter space , we would get some other quantity corresponding to the so-called dual norm:

| (A.1.50) |

For instance, if we were to use the -norm, it is possible to show that

| (A.1.51) |

where . Thus if we were so-inclined, we could use the so-called sign-gradient descent:

| (A.1.52) |

From sign-gradient descent, we can derive the famous Adam optimization algorithm. Note that for a scalar we can write

| (A.1.53) |

Similarly, for a vector we write (where and are element-wise multiplication and division)

| (A.1.54) |

Using this representation we can write (A.1.52) as

| (A.1.55) |

Now consider the stochastic regime where we are optimizing a different loss at each iteration. In SGD, we “tracked” (i.e., took an average of) the gradients using Nesterov momentum. Here, we can track both the gradient and the squared gradient using momentum, i.e.,

| (A.1.56) | ||||

| (A.1.57) | ||||

| (A.1.58) |

where are the momentum parameters. The algorithm presented by this iteration is the celebrated Adam optimizer,555In order to avoid division-by-zero errors, we divide by where is small, say on the order of . which is the most-used optimizer in deep learning. While convergence proofs of Adam are more involved, it falls out of the same steepest descent principle we used so far, and so we should expect that given a small enough learning rate, each update should improve the loss.

Another way to view Adam, which partially explains its empirical success, is that it dynamically updates the learning rates for each parameter based on the squared gradients. In particular, notice that we can write

| (A.1.59) |

where is the parameter-wise adaptively set learning rate. This scheme is called adaptive because if the gradient of a particular parameter is large up to iteration , then the learning rate for this parameter becomes smaller to compensate, and vice versa, as can be seen from the above equation.

A.2 Computing Gradients via Automatic Differentiation

Above, we discussed several optimization algorithms for deep networks which assumed access to a first-order oracle, i.e., a device which would allow us to compute and . For simple functions , it is possible to do this by hand. However, for deep neural networks, this quickly becomes tedious, and hinders rapid experimentation. Hence we require a general algorithm which would allow us to efficiently compute the gradients of arbitrary (sub)differentiable network architectures.

In this section, we introduce the basics of automatic differentiation (AD or autodiff), which is a computationally efficient way to compute gradients and Jacobians of general functions . We will show how this leads to the backpropagation algorithm for computing gradients of loss functions involving neural networks. A summary of the structure of this section is as follows:

-

1.

We introduce differentials, a convenient formalism for calculating and organizing the derivatives of functions between high-dimensional parameter spaces (which may themselves be products of other spaces involving matrices, tensors, etc.).

-

2.

We describe the basics of forward-mode and reverse-mode automatic differentiation, which involves considerations that are important for efficient computation of gradients/Jacobians for different kinds of functions arising in machine learning applications.

-

3.

We describe backpropagation in the special case of a loss function applied to a stack-of-layers neural network as an instantiation of reverse-mode automatic differentiation.

Our treatment will err on the mathematical side, to give the reader a deep understanding of the underlying mathematics. The reader should ensure to couple this understanding with a strong grasp of practical aspects of automatic differentiation for deep learning, for example as manifested in the outstanding tutorial of [Kar22a].

A.2.1 Differentials

A full accounting of this subsection is given in the excellent guide [BEJ25]. To motivate differentials, let us first consider the simple example of a differentiable function acting on a parameter . We can write

| (A.2.1) |

If we take and , we can write

| (A.2.2) |

We will (non-rigorously) define and , i.e., the differentials of and , to be infinitesimally small changes in and . Think of them as what one gets when (and therefore ) are extremely small. The goal of differential calculus, in some sense, is to study the relationships between the differentials and , namely, seeing how small changes in the input of a function change the output. We should note that the differential is the same shape as , and the differential is the same shape as . In particular, we can write

| (A.2.3) |

whereby we have that all higher powers of , such as , are .

Let’s see how this works for a higher dimensions, i.e., . Then we still have

| (A.2.4) |

for some notion of a derivative . Since (hence ) is a column vector here and (hence ) is a scalar, we must have that is a row vector. In this case, is the Jacobian of w.r.t. . Here notice that we have set all higher powers and products of coordinates of to . In sum,

All products and powers of differentials are equal to .

Now consider a higher-order tensor function . Then our basic linearization equation is insufficient for this case: does not make sense since is an matrix but is a matrix, so there is no possible vector or matrix shape for that works in general (as no matrix can multiply a matrix to form a matrix unless ). So we must have a slightly more advanced interpretation.

Namely, we consider as a linear transformation whose input is -space and whose output is -space, which takes in a small change in and outputs the corresponding small change in . Namely, we can write

| (A.2.5) |

In the previous cases, was first a linear operator whose action was to multiply its input by the scalar derivative of with respect to , and then a linear operator whose action was to multiply its input by the Jacobian derivative of with respect to . In general is the “derivative” of w.r.t. . Think of as a generalized version of the Jacobian of . As such, it follows some simple derivative rules, most crucially the chain rule.

Theorem A.1 (Differential Chain Rule).

Suppose where and are differentiable. Then

| (A.2.6) |

where (as usual) multiplication indicates composition of linear operators. In particular,

| (A.2.7) |

in the sense of equality of linear operators.

It is productive to think of the multivariate chain rule in functorial terms: composition of functions gets ‘turned into’ matrix multiplication of Jacobians (composition of linear operators!). We illustrate the power of this result and this perspective through several examples.

Example A.4.

Consider the function . Then

| (A.2.8) |

Thus the derivative of an affine function w.r.t. its input is

| (A.2.9) |

Notice that is constant. On the other hand, consider the function . Then

| (A.2.10) | ||||

| (A.2.11) |

Notice that this derivative is constant in (which makes sense since itself is linear) and linear in the differential inputs .

Example A.5.

Consider the function where are differentiable functions whose outputs can multiply together. Then where and . Applying the chain rule we have

| (A.2.12) |

To compute we can compute

| (A.2.13) |

To compute we can compute

| (A.2.14) | ||||

| (A.2.15) |

where (recall) the product of the differentials and is set to . Therefore

| (A.2.16) |

Putting these together, we find

| (A.2.17) | ||||

| (A.2.18) |

This gives

| (A.2.19) |

If for example we say that then everything commutes so

| (A.2.20) |

which is the familiar product rule.

Example A.6.

Consider the function where is a matrix and is a constant matrix. Then, letting where and , we can use the product rule to obtain

| (A.2.21) | ||||

| (A.2.22) |

Example A.7.

Consider the function given by

| (A.2.23) |

We cannot write a (matrix-valued) Jacobian or gradient for this function. But we can compute its differential just fine:

| (A.2.24) |

So

| (A.2.25) |

which represents a higher-order tensor multiplication operation that is nonetheless well-defined.

This gives us all the technology we need to compute differentials of everything. The last thing we cover in this section is a method to compute gradients using the differential. Namely, for a function whose output is a scalar, the gradient is defined as

| (A.2.26) |

where the inner product here is the “standard” inner product for the specified objects (i.e., for vectors it’s the inner product, whereas for matrices it’s the Frobenius inner product, and for higher-order tensors it’s the analogous sum-of-coordinates inner product). This definition is the correct generalization of the ‘familiar’ example of the gradient of a function from to as the vector of partial derivatives—a version of Taylor’s theorem for general functions makes this connection rigorous. So one way to compute the gradient is to compute the differential and rewrite it in the form , then that “something” is the gradient.

A.2.2 Automatic Differentiation

The main idea of AD is to compute the chain rule efficiently. The basic problem we need to cope with is the following. In the optimization section of the appendix, we considered that the parameter space was an abstract Euclidean space like . In practice the parameters are really some collection of vectors, matrices, and higher-order objects: . While in theory this is the same thing as a large parameter space for some (very large) , computationally efficient algorithms for differentiation must treat these two spaces differently. Forward and reverse mode automatic differentiation are two different schemes for performing this computation.

Let us do a simple example to start. Let be defined by where are differentiable. Then the chain rule gives

| (A.2.27) |

To compute , we first compute then then , and store them all. There are two ways to compute . The forward-mode AD will compute

| (A.2.28) |

i.e., computing the derivatives “from the bottom-up”. The reverse mode AD will compute

| (A.2.29) |

i.e., computing the derivatives “from the top down”. To see why this matters, suppose that is given by where , , . Then the chain rule is:

| (A.2.30) |

where (recall) is the derivative, in this case the Jacobian (since the input and output of each function are both vectors). Assuming that computing each Jacobian is trivial and the only cost is multiplying the Jacobians together, forward-mode AD has the following computational costs (assuming that multiplying takes time):

| (A.2.31) | |||

| (A.2.32) | |||

| (A.2.33) |

Meanwhile, doing reverse-mode AD has the following computational costs:

| (A.2.34) | |||

| (A.2.35) | |||

| (A.2.36) |

In other words, the forward-mode AD takes time, and the reverse-mode AD takes time. These take a different amount of time!

More generally, suppose that where each , so that . Then the forward-mode AD takes time while the reverse-mode AD takes time. From the above rates, we see that all else equal:

-

•

If the function to optimize has more outputs than inputs (i.e., ), use forward-mode AD.

-

•

If the function to optimize has more inputs than outputs (i.e., ), use reverse-mode AD.

In a neural network, we compute the gradient of a loss function , where the parameter space is usually very high-dimensional. So in practice we always use reverse-mode AD for training neural networks. Reverse-mode AD, in the context of training neural networks, is called backpropagation.

A.2.3 Back Propagation

In this section, we will discuss algorithmic backpropagation using a simple yet completely practical example. Suppose that we fix an input-label pair , and fix a network architecture where and task-specific head , and write

| (A.2.37) | |||

| (A.2.38) | |||

| (A.2.39) |

Then, we can define the loss on this one term by

| (A.2.40) |

where is a differentiable function of its second argument. Then the backward-mode AD instructs us to compute the derivatives in the order .

To carry out this computation, let us make some changes to the notation.

-

•

First, let us change the notation to emphasize the dependency structure between the variables. Namely,

(A.2.41) (A.2.42) (A.2.43) (A.2.44) -

•

Then, instead of having the derivative be , we explicitly notate the independent variable and write the derivative as , for example. This is because there are many variables in our model and we only care about one at a time.

We can start by computing the appropriate differentials. First, for we have

| (A.2.45) | ||||

| (A.2.46) | ||||

| (A.2.47) | ||||

| (A.2.48) |

Now since does not depend on , we have , so in the end it holds (using the fact that the gradient is the transpose of the derivative for a function whose codomain is ):

| (A.2.49) | ||||

| (A.2.50) | ||||

| (A.2.51) | ||||

| (A.2.52) | ||||

| (A.2.53) |

Thus to compute , we compute the gradient and the adjoint666The adjoint is like a generalized transpose for more general linear transformations. Particularly, for a given pair of inner product spaces and linear transformation between those spaces, the adjoint is defined by the identity . In finite dimensions (i.e., all cases relevant to this book) the adjoint exists and is unique. of the derivative and multiply (i.e., apply the adjoint linear transformation to the gradient). In practice, both derivatives can be computed by hand, but many modern computational frameworks can automatically define the derivatives (and/or their adjoints) given code for the “forward pass,” i.e., the loss function computation. While extending this automatic derivative definition to as many functions as possible is an area of active research, the resource [BEJ25] describes one basic approach to do it in some detail. By the way, backpropagation is also called the adjoint method for this reason — i.e., that we use adjoints derivatives to compute the gradient.

Now let us compute the differentials w.r.t. some :

| (A.2.54) | ||||

| (A.2.55) | ||||

| (A.2.56) | ||||

| (A.2.57) | ||||

| (A.2.58) | ||||

| (A.2.59) | ||||

| (A.2.60) | ||||

| (A.2.61) |

Thus to compute we compute then apply the adjoint derivative to it. Since depends on , we also want to be able to compute the gradients w.r.t. . This can be computed in the exact same way:

| (A.2.62) | ||||

| (A.2.63) | ||||

| (A.2.64) | ||||

| (A.2.65) | ||||

| (A.2.66) | ||||

| (A.2.67) |

Thus to compute we compute then apply the adjoint derivative to it. So we have a recursion to compute for all , with base case , which is given by

| (A.2.68) | ||||

| (A.2.69) | ||||

| (A.2.70) | ||||

| (A.2.71) | ||||

| (A.2.72) | ||||

| (A.2.73) | ||||

| (A.2.74) |

Thus we have the recursion:

| (A.2.75) | ||||

| (A.2.76) | ||||

| (A.2.77) | ||||

| (A.2.78) | ||||

| (A.2.79) | ||||

| (A.2.80) | ||||

| (A.2.81) |

This gives us a computationally efficient algorithm to find all gradients in the whole network.

We’ll finish this section by computing the adjoint derivative for a simple layer.

Example A.8.

Consider the “linear” (affine) layer

| (A.2.82) |

We can compute the differential w.r.t. both parameters as

| (A.2.83) | ||||

| (A.2.84) |

Thus the derivative of this transformation is

| (A.2.85) |

namely, representing the following linear transformation from to :

| (A.2.86) |

We calculate the adjoint w.r.t. the sum-over-coordinates (Frobenius) inner product by the following procedure:

| (A.2.87) | ||||

| (A.2.88) | ||||

| (A.2.89) | ||||

| (A.2.90) | ||||

| (A.2.91) | ||||

| (A.2.92) |

So .

Note that as a simple application of chain rule, both backpropagation and automatic differentiation work over general “computational graphs”, i.e., compositions of (simple) functions. We give all examples as neural network layers because this is the most common example in practice.

A.3 Game Theory and Minimax Optimization

In certain cases, such as in Chapter 5, a learning problem cannot be reduced to a single optimization problem but rather represents multiple potentially opposing components of the system try to each minimize their own objective. Examples of this paradigm include distribution learning via generative adversarial networks (GAN) and closed-loop transcription (CTRL). We will denote such a system as a two-player game, where we have two “players” (i.e., components) called Player 1 and Player 2 trying to minimize their objectives and respectively. Player 1 picks parameters and Player 2 picks parameters . In this book we consider the special case of zero-sum games, i.e., defining a common objective such that .

Our first, very preliminary example is as follows. Suppose that there exists functions and such that

| (A.3.1) |

Then both players’ objectives are independent of the other player, and the players should try to achieve their respective optima:

| (A.3.2) |

The pair is a straightforward special case of an equilibrium: a situation where neither player will want to move, given the chance, since moving will end up making their own situation worse. However, not all games are so trivial; many have more complicated objectives and information structures.

In this book, the relevant game-theoretic formalism is a Stackelberg game (variously called sequential game). In this formalism, one player (without loss of generality Player 1, and also described as a leader) picks their parameters before the other (i.e., Player 2, also described as a follower), and the follower can use the full knowledge of the leader’s choice to make their own choice. The correct notion of equilibrium for a Stackelberg game is a Stackelberg equilibrium. To explain this equilibrium, note that since Player 2 (i.e., the follower) can choose reactively to the choice made by Player 1 (i.e., the leader), Player 2 would of course choose the which minimizes . But of course a rational Player 1 would realize this, and so pick a such that the worst-case picked by Player 2 according to this rule is not too bad. More formally, let be the set of minimizing , i.e., the set of all which Player 2 is liable to play given that Player 1 has played . Then is a Stackelberg equilibrium if

| (A.3.3) |

Actually (proof as exercise), one can show that in the context of two-player zero-sum Stackelberg games, is a Stackelberg equilibrium if and only if

| (A.3.4) |

(note that the notation is not used nor needed).

Proof.

Note that

| (A.3.5) |

so it holds

| (A.3.6) |

∎

In the rest of the section, we will briefly discuss some algorithmic approaches to learn Stackelberg equilibria. The intuition you should have is that learning an equilibrium is like letting the different parts of the system automatically figure out tradeoffs between the different objectives they want to optimize.

We end this section with a caveat: in two-player zero-sum games, if it holds that

| (A.3.7) |

then every Stackelberg equilibrium is a saddle point,777Famously called a Nash equilibrium. i.e.,

| (A.3.8) |

and vice versa, and furthermore each Stackelberg equilibrium has the (same) objective value

Proof.

Suppose that indeed

| (A.3.9) |

First suppose that is a saddle point. We will show it is a Stackelberg equilibrium. By definition we have

| (A.3.10) |

It then holds for any and that

| (A.3.11) |

Therefore

| (A.3.12) |

Completely symmetrically,

| (A.3.13) |

Therefore since we have

| (A.3.14) |

In particular, it holds that

| (A.3.15) |

From the saddle point condition we have . So is a Stackelberg equilibrium. Furthermore we have also proved that all saddle points obey (A.3.14).

Now let be a Stackelberg equilibrium. We claim that it is a saddle point, which completes the proof. By the definition of minimax equilibrium,

| (A.3.16) |

Then by the assumption we have

| (A.3.17) |

This proves that all minimax equilibria have the desired objective value . Now we want to show that

| (A.3.18) |

Indeed the latter assertion holds by definition of the minimax equilibrium, so only the former need be proved. Namely, we will show that

| (A.3.19) |

To show this note that by definition of the

| (A.3.20) |

meanwhile we have so

| (A.3.21) |

Therefore it holds

| (A.3.22) |

and the proof is complete. ∎

Conditions under which the equality holds are given by so-called minimax theorems; the most famous of these is a theorem of von Neumann. However, in the cases we think about, this property usually does not hold.

A.3.1 Learning Stackelberg Equilibria

How can we learn Stackelberg equilibria via GDA? In general this is clearly impossible, since learning Stackelberg equilibria via GDA is obviously at least as hard as computing a global minimizer of a loss function (say by setting the shared objective to only be a function of ). As such, we can achieve two types of convergence guarantees:

-

•

When is (strongly) concave in the first argument and (strongly) convex in the second argument (as well as having Lipschitz gradients in both arguments), we can achieve exponentially fast convergence to a Stackelberg equilibrium.

-

•

When is not concave or convex in either argument, we can achieve local convergence guarantees: namely, if we initialize the parameter values near a (local) Stackelberg equilibrium and the optimization geometry is good then we can learn that equilibrium efficiently.

The former situation is exactly analogous to the case of single-player optimization, where we proved that gradient descent converges exponentially fast for strongly convex objectives which have Lipschitz gradient. The latter situation is also analogous to the case of single-player optimization, although we did not cover it in depth due to technical difficulty; indeed there exist local convergence guarantees for nonconvex objectives which have locally nice geometry.

The algorithm in these two cases is the same algorithm, called Gradient Descent-Ascent (GDA). To motivate GDA, suppose we are trying to learn . We could do gradient ascent on the function . But then we would need to take the derivative in of this function. To see how to do this, suppose that is strongly convex in so that there is one minimizer of . Then, Danskin’s theorem says that

| (A.3.23) |

where the gradient is only with respect to the first argument (i.e., not a total derivative which would require computing the Jacobian of with respect to ), indicated by the stop-gradient operator.888In the case that is not strongly convex in but rather just convex, Danskin’s theorem can be stated in terms of subdifferentials. If is not convex at all in , then this derivative may not be well-defined, but one can obtain (local) convergence guarantees for the resulting algorithm anyways. Hence we use Danskin’s theorem as a motivation and not a justification for our algorithms. Danskin’s thoerems can be generalized into more relaxed circumstances by the so-called envelope theorems. In order to take the derivative in , we need to set up a secondary process to also optimize to obtain an approximation for . We can do this through the following algorithmic template:

| (A.3.24) | |||

| (A.3.25) |

That is, we take steps of gradient descent to update (hopefully to the minimizer of ), and then take a gradient ascent step to update . As a bonus, on top of estimating , we also learn an — this is an approximate Stackelberg equilibrium.

This method is often not done in practice, as it requires total gradient descent iterations to update once. Instead, we use the so-called (simultaneous) Gradient Descent-Ascent (GDA) iteration

| (A.3.26) | ||||

| (A.3.27) |

which can be implemented efficiently via a single gradient step on . The crucial idea here is, to make our method close to the inefficient iteration above, we use an update which is times faster than the update (these can be seen as nearly the same by taking a linearization of the dynamics).

It is crucial to pick sensibly. How can we do that? In the sequel, we discuss two configurations of which lead to convergence of GDA to a Stackelberg equilibrium under different assumptions.

Convergence of One-Timescale GDA to Stackelberg Equilibrium

If (i.e., named one-timescale because both and updates are of the same scale), then the GDA algorithm becomes

| (A.3.28) |

If has Lipschitz gradients in and , and is strongly concave in and strongly convex in , and is coercive (i.e.,), then all saddle points are Stackelberg equilibria and vice versa. The work [ZAK24] shows that GDA (again, with ) with sufficiently small step size converges to a saddle point (hence Stackelberg equilibrium) exponentially fast if one exists, analogously to gradient descent for strongly convex functions with Lipschitz gradient.999We do not provide the step size or exponential base since they are complicated functions of the strong convexity/concavity/Lipschitz constant of the gradient. Of course, the paper [ZAK24] provides the precise parameter values. To our knowledge, this flavor of results constitute the only known rigorous justification for single-timescale GDA.

Local Convergence of Two-Timescale GDA to Stackelberg Equilibrium

Strong convexity/concavity is a global property, and none of the games we look into in this book have objectives which are globally strongly concave/strongly convex. In this case, the best we can hope for is local convergence to Stackelberg equilibria: if the parameters are initialized close to a Stackelberg equilibrium, then GDA can converge onto it, given an appropriate step size and timescale .

In fact, our results also hold for a version of the local Stackelberg equilibrium called the differential Stackelberg equilibrium, which was introduced in [FCR19] (though we use the precise definition in [LFD+22]), and which we define as follows. A point is a differential Stackelberg equilibrium if:

-

•

;

-

•

is symmetric positive definite;

-

•

;

-

•

is symmetric negative definite.

Notice that the last condition asks for the (total) Hessian

| (A.3.29) |

or, equivalently, its Schur complement to be negative definite. If we look at the computation of furnished by Danskin’s theorem, the last two criteria are actually constraints on the gradient and Hessian of the function , ensuring that the gradient is and the Hessian is negative semidefinite. This intuition tells us that we can expect that each Stackelberg equilibrium is a differential Stackelberg equilibrium; [FCR20] confirms this rigorously (up to some technical conditions).

Analogously to the notion of strict local optimum in single-player optimization (where we require to be positive semidefinite), the definition of differential Stackelberg equilibrium implies that is locally (strictly) convex in a neighborhood of the equilibrium, and that is locally (strictly) concave in the same region.

In this context, we present the result from [LFD+22]. Let be a differential Stackelberg equilibrium. Suppose that has Lipschitz gradients, i.e.,

| (A.3.30) |

Further define the local strong convexity/concavity parameters of and respectively as

| (A.3.31) |

Then define the local condition numbers as

| (A.3.32) |

The paper [LFD+22] says that if we take step size and take , so that the algorithm is

| (A.3.33) | ||||

| (A.3.34) |

the total Hessian is diagonalizable, and we initialize close enough to , then there are positive constants such that

| (A.3.35) |

implying exponential convergence to the differential Stackelberg equilibrium.

A.3.2 Practical Considerations when Learning Stackelberg Equilibria

In practice, we do not know how to initialize parameters close to a (differential) Stackelberg equilibrium. Due to symmetries within the objective, including those induced by overparameterization of the neural networks being trained, one can (heuristically) expect that most initializations are close to a Stackelberg equilibrium. Also, we do not know how to compute the step size or the timescale , since they are dependent on properties of the loss at the equilibrium. In practice, there are some common approaches:

-

•

Take (equal step-sizes), and use updates for and that are derived from a learning-rate-adaptive optimizer like Adam (as opposed to vanilla GD). Here, you hope (but do not know) that the optimizer can adjust the learning rates to learn a good equilibrium.

-

•

Take to be some constant like which implies that equilibrates times as fast as . Here you can also use Adam-style updates, and hope that it fixes the time scale.

-

•

Let depend on the iteration , and let as . This schedule was studied (also in the case of noise) by Borkar in [Bor97].

For example, you can use this while training CTRL-style models (see Chapter 5), where the encoder is Player and the decoder is Player . Some theory about CTRL is given in Theorem 5.1.

A.4 Exercises

Exercise A.1.

We have shown that for a smooth function , gradient descent converges linearly to the global optimum if it is strongly convex. However, in general nonconvex optimization, we do not have convexity, let alone strong convexity. Fortunately, in some cases, satisfies the so-called -Polyak-Lojasiewicz (PL) inequality, i.e., there exists a constant such that for all ,

where is a minimizer of .

Please show that under the PL inequality and the assumption that is -smooth, gradient descent (A.1.12) converges linearly to .

Exercise A.2.

Compute the differential and adjoint derivative of the softmax function, defined as follows.

| (A.4.1) |

Exercise A.3.

Carry through the backpropagation computation for a -layer MLP, as defined in Section 7.2.3.

Exercise A.4.

Carry through the backpropagation computation for a -layer transformer, as defined in Section 7.2.3.

Exercise A.5.

Carry through the backpropagation computation for an autoencoder with encoder layers and decoder layers (without necessarily specifying an architecture).