Chapter 3 Pursuing Low-Dimensionality via Lossy Compression

“We compress to learn, and we learn to compress.”

— High-dimensional Data Analysis, Wright and Ma, 2022

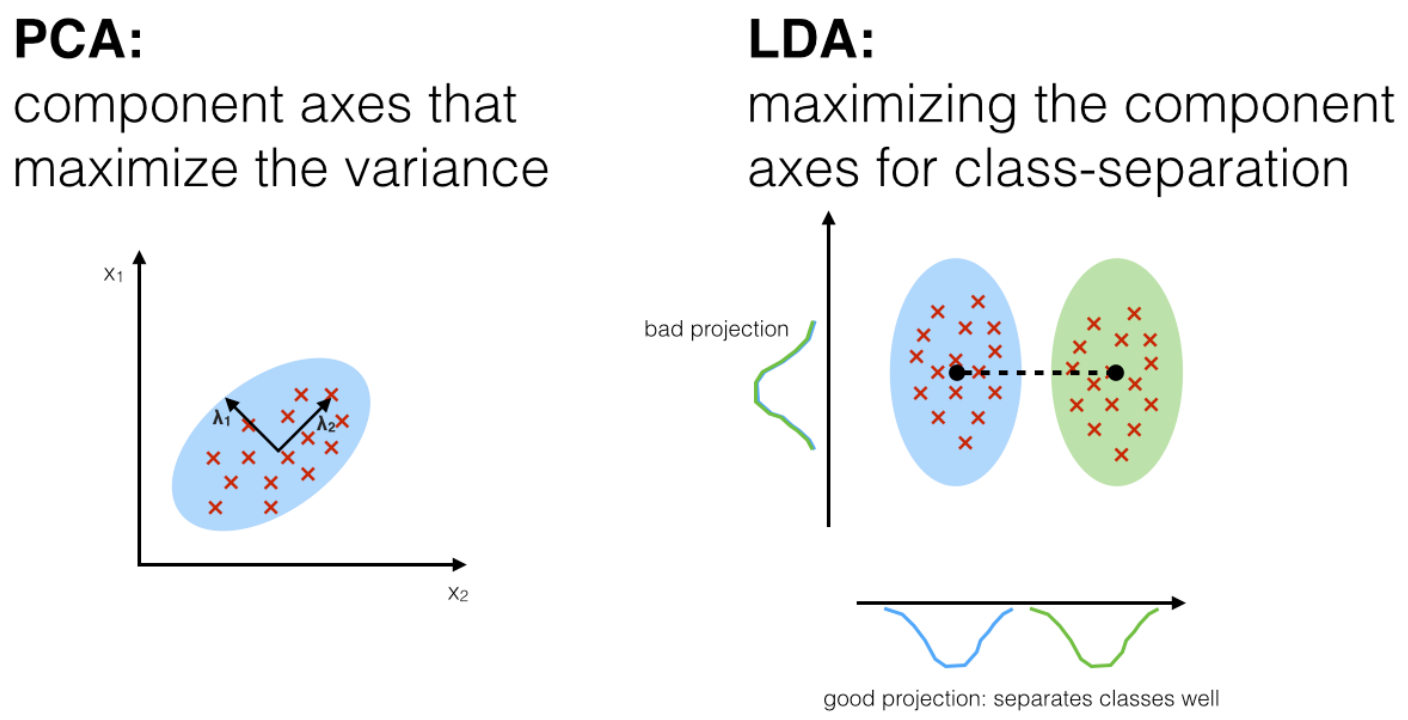

In Chapter 2, we have shown how to learn simple classes of distributions whose supports are assumed to be either a single or a mixture of low-dimensional subspaces or low-rank Gaussians. For further simplicity, the different (hidden) linear or Gaussian modes are assumed to be orthogonal or independent111Or can be easily reduced to such idealistic cases., as illustrated in Figure 2.4. As we have shown, for such special distributions, one can derive rather simple and effective learning algorithms with correctness and efficiency guarantees. The geometric and statistical interpretation of operations in the associated algorithms is also very clear.

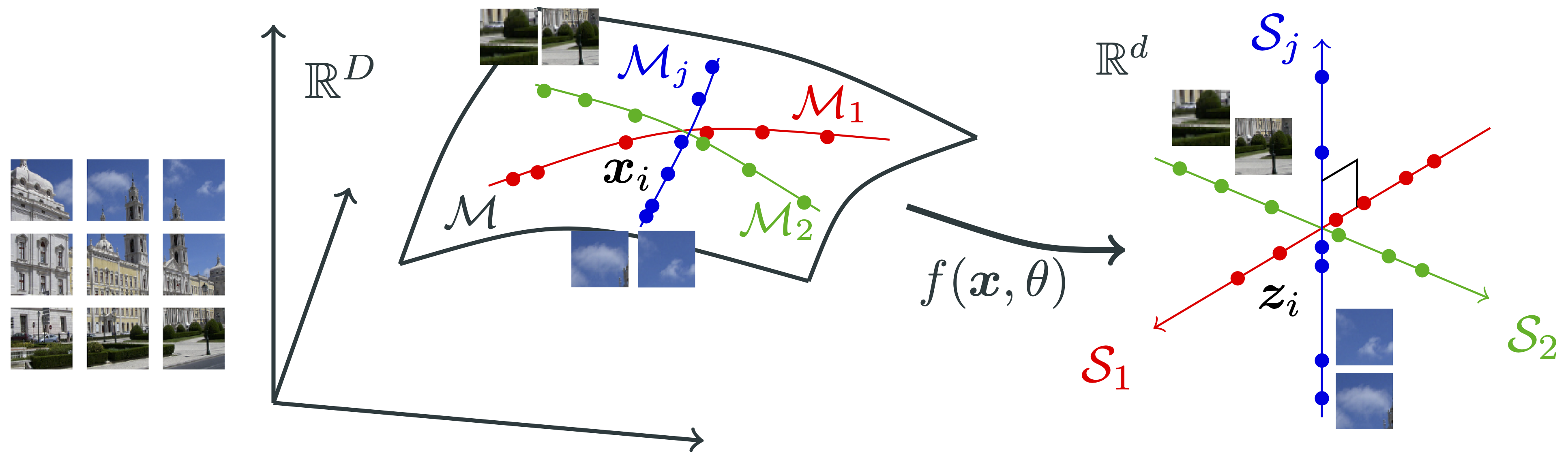

In practice, both linearity and independence are rather idealistic assumptions that distributions of real-world high-dimensional data rarely satisfy. The only thing that we may assume is that the intrinsic dimension of the distribution is very low compared to the dimension of the ambient space in which the data are embedded. Hence, in this chapter, we show how to learn a more general class of low-dimensional distributions in a high-dimensional space that is not necessarily (piecewise) linear.

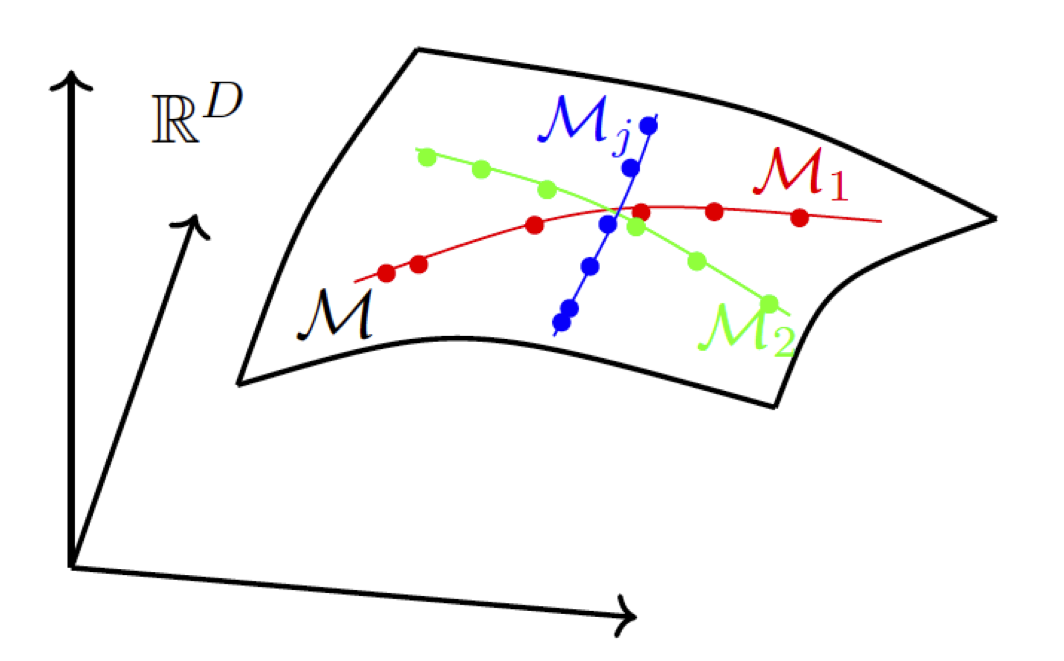

It is typical that the distribution of real data often contains multiple components or modes, say corresponding to different classes of objects in the case of images. These modes might not be statistically independent and they may even have different intrinsic dimensions. It is also typical that we have access to only a finite number of samples of the distribution. Therefore, in general, we may assume our data are distributed on a mixture of (nonlinear) low-dimensional submanifolds in a high-dimensional space. Figure 3.1 illustrates an example of such a distribution.

To learn such a distribution under such conditions, there are several fundamental questions that we need to address:

-

•

What is a general approach to learn a general low-dimensional distribution in a high-dimensional space and represent the learned distribution?

-

•

How do we measure the complexity of the resulting representation so that we can effectively exploit the low dimensionality to learn?

-

•

How do we make the learning process computationally tractable and even scalable, as the ambient dimension is usually high and the number of samples typically large?

As we will see, the fundamental idea of compression, or dimension reduction, which has been shown to be very effective for the linear/independent case, still serves as a general principle for developing effective computational models and methods for learning general low-dimensional distributions.

Due to its theoretical and practical significance, we will study in greater depth how this general framework of learning low-dimensional distributions via compression substantiates when the distribution of interest can be well-modeled or approximated by a mixture of low-dimensional subspaces or low-rank Gaussians.

3.1 Entropy Minimization and Compression

3.1.1 Entropy and Coding Rate

In Chapter 1, we have mentioned that the goal of learning is to find the simplest way to generate a given set of data. Conceptually, the Kolmogorov complexity was intended to provide such a measure of complexity, but it is not computable and is not associated with any implementable scheme that can actually reproduce the data. Hence, we need an alternative, computable, and realizable measure of complexity. That leads us to the notion of entropy, introduced by Shannon in 1948 [Sha48].

To illustrate the constructive nature of entropy, let us start with the simplest case. Suppose that we have a discrete random variable that takes distinct values, or tokens, with equal probability . Then we could encode each token using the -bit binary representation of . This coding scheme could be generalized to encoding arbitrary discrete distributions [CT91]: Given a distribution such that , one could assign each token with probability to a binary code of size bits. Hence the average number of bits, or the coding rate, needed to encode any sample from the distribution is given by the expression:222By the convention of Information Theory [CT91], the here is to the base . Hence entropy is measured in (binary) bits.

| (3.1.1) |

This is known as the entropy of the (discrete) distribution . Note that this entropy is always nonnegative and it is zero if and only if for some with .333Here notice that we use the fact .

3.1.2 Differential Entropy

When the random variable is continuous and has a probability density , one may view that the limit of the above sum (3.1.1) is related to an integral:

| (3.1.2) |

More precisely, given a continuous variable , we may quantize it with a quantization size . Denote the resulting discrete variable as . Then one can show that . Hence, when is small, the differential entropy can be negative. Interested readers may refer to [CT91] for a more detailed explanation.

Example 3.1 (Entropy of Gaussian Distributions).

Through direct calculation, it is possible to show that the entropy of a Gaussian distribution is given by:

| (3.1.3) |

It is also known that the Gaussian distribution achieves the maximal entropy for all distributions with the same variance . The entropy of a multivariate Gaussian distribution in is given by:

| (3.1.4) |

Similar to the entropy for a discrete distribution, we would like the differential entropy to be associated with the coding rate of some realizable coding scheme. For example, as above, we may discretize the domain of the distribution with a grid of size . The coding rate of the resulting discrete distribution can be viewed as an approximation to the differential entropy [CT91].

Be aware that there are some caveats associated with the definition of differential entropy. For a distribution in a high-dimensional space, when its support becomes degenerate (low-dimensional), its differential entropy diverges to . This fact is proved in Theorem B.1 (we also recall the maximum entropy characterization of the Gaussian distribution mentioned above in Theorem B.1) but even in the simple explicit case of Gaussian distributions (3.1.4), when the covariance is singular, we can see that so we have . In such a situation, it is not obvious how to properly quantize or encode such a distribution. Nevertheless, degenerate (Gaussian) distributions are precisely the simplest possible, and arguably the most important, instances of low-dimensional distributions in a high-dimensional space. In this chapter, we will discuss a complete resolution to this seeming difficulty with degeneracy.

3.1.3 Minimizing Coding Rate

Remember that the learning problem entails the recovery of a (potentially continuous) distribution from a set of samples drawn from the distribution. For ease of exposition, we write . Given that the distributions of interest here are (nearly) low-dimensional, we should expect that their (differential) entropy is very small. But unlike the situations that we have studied in the previous chapter, in general we do not know the family of (analytical) low-dimensional models to which the distribution belongs. So checking whether the entropy is small seems to be the only guideline that we can rely on to identify and model the distribution.

Now given the samples alone without knowing what is, in theory they could be interpreted as samples from any generic distribution. In particular, they could be interpreted as any of the following cases:

-

1.

as samples from the empirical distribution itself, which assigns probability to each of the samples .

-

2.

as samples from a standard normal distribution with a variance large enough (say larger than the sample norms);

-

3.

as samples from a normal distribution with a covariance being the empirical covariance of the samples;

-

4.

as samples from a distribution that closely approximates the ground truth distribution .

Now the question is: which one is better, and in what sense? Suppose that you believe these data are drawn from a particular distribution , which may be one of the above distributions considered. Then we could encode the data points with the optimal code book for the distribution . The required average coding length (or coding rate) is given by:

| (3.1.5) |

as the number of samples becomes large. If we have identified the correct distribution , the coding rate is given by the entropy . It turns out that the above coding length is always larger than or equal to the entropy unless . Their difference, denoted as

| (3.1.6) | |||||

| (3.1.7) |

is known as the Kullback-Leibler (KL) divergence, or relative entropy. This quantity is always non-negative.

Theorem 3.1 (Information Inequality).

Let be two probability density functions (that have the same support). Then , where the inequality becomes equality if and only if .444Technically, this equality should be taken to mean “almost everywhere”, i.e., except possibly on a set of zero measure (volume), since this set would not impact the value of any integral.

Proof.

where the first inequality follows from Jensen’s inequality and the fact that the function is strictly concave. The equality holds if and only if . ∎

Hence, given a set of sampled data , to determine which case is better among , , and , we may compare their coding rates for and see which one gives the lowest rate. We know from the above that the (theoretically achievable) coding rate for a distribution is closely related to its entropy. In general, we have:

| (3.1.8) |

Hence, if the data were encoded by the code book associated with each of these distributions, the coding rate for would in general decrease in the same order:

| (3.1.9) |

This observation gives us a general guideline on how we may be able to pursue a distribution which has a low-dimensional structure. It suggests two possible approaches:

-

1.

Starting with a general distribution (say a normal distribution) with high entropy, gradually transforming the distribution towards the (empirical) distribution of the data by reducing entropy.

-

2.

Among a large family of (parametric or non-parametric) distributions with explicit coding schemes that encode the given data, progressively search for better coding schemes that give lower coding rates.

Conceptually, both approaches are essentially trying to do the same thing. For the first approach, we need to make sure such a path of transformation exists and is computable. For the second approach, it is necessary that the chosen family is rich enough and can closely approximate (or contain) the ground truth distribution. For either approach, we need to ensure that solutions with lower entropy or better coding rates can be efficiently computed and converge to the desired distribution quickly.555Say the distribution of real-world data such as images and texts. We will explore both approaches in the two remaining sections of this chapter.

3.2 Compression via Denoising

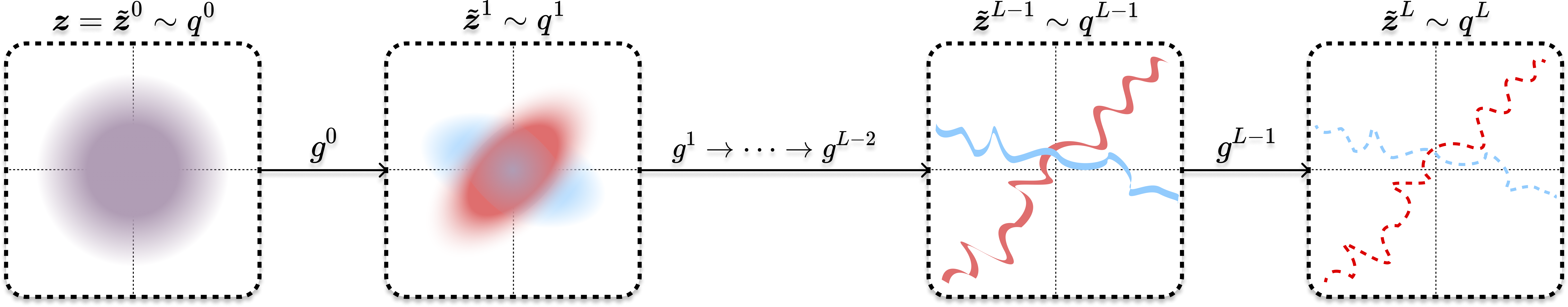

In this section, we will describe a natural and computationally tractable way to learn a distribution by way of learning a parametric encoding of our distribution such that the representation has the minimum entropy or coding rate, then using this encoding to transform high-entropy samples from a standard Gaussian into low-entropy samples from the target distribution, as illustrated in Figure 3.2. This presents a methodology that utilizes both approaches above in order to learn and sample from the distribution.

3.2.1 Diffusion and Denoising Processes

We first want to find a procedure to decrease the entropy of a given very noisy sample into a lower-entropy sample from the data distribution. Here, we describe a potential approach—one of many, but perhaps the most natural way to attack this problem. First, we find a way to gradually increase the entropy of existing samples from the data distribution. Then, we find an approximate inverse of this process. But in general, the operation of increasing entropy does not have an inverse, as information from the original distribution may be destroyed. We will thus tackle a special case where (1) the operation of adding entropy takes on a simple, computable, and reversible form; (2) we can obtain a (parametric) encoding of the data distribution, as alluded to in the above pair of approaches. As we will see, the above two factors will ensure that our approach is possible.

We will increase the entropy in arguably the simplest possible way, i.e., adding isotropic Gaussian noise. More precisely, given the random variable , we can consider the stochastic process which adds gradual noise to it, i.e.,

| (3.2.1) |

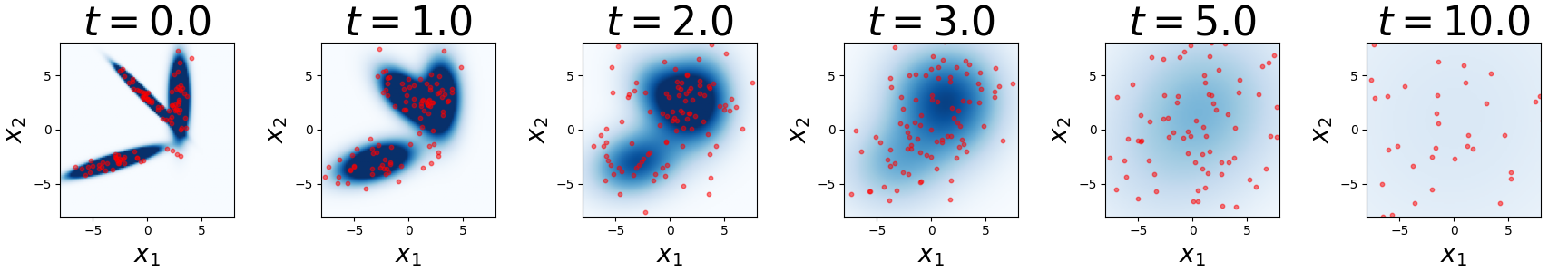

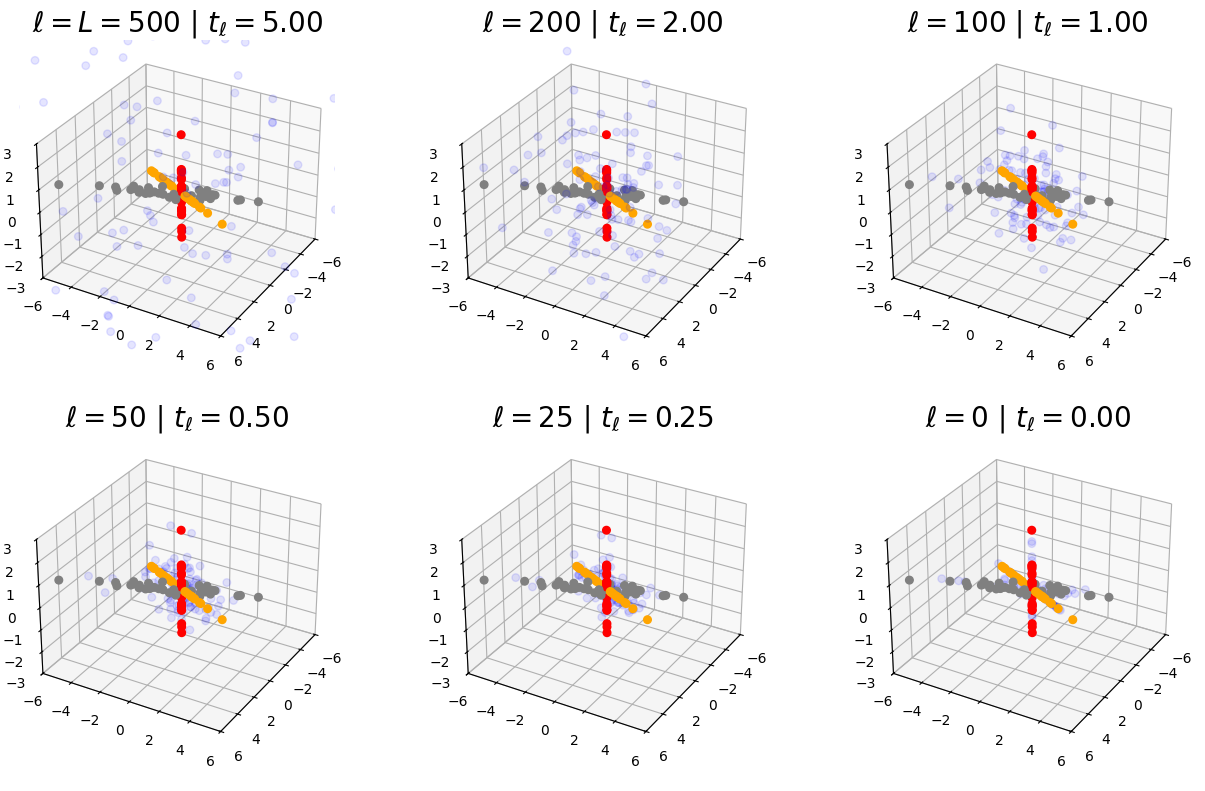

where is a time horizon and is drawn independently of . This process is an example of a diffusion process, so-named because it spreads the probability mass out over all of as time goes on, increasing the entropy over time. This intuition is confirmed graphically by Figure 3.3, and rigorously via the following theorem.

Theorem 3.2 (Simplified Version of Theorem B.2).

Suppose that follows the model (3.2.1). For any , the random variable has differential entropy . Moreover, under certain technical conditions on ,

| (3.2.2) |

showing that the entropy of the noised increases over time .

The proof is elementary, but it is rather long, so we postpone it to Section B.2.1. The main as-yet unstated implication of this result is that for every . To see this, note that if then for all , and if then by the fundamental theorem of calculus, so in both cases for every .

The inverse operation to adding noise is known as denoising. It is a classical and well-studied topic in signal processing and system theory, such as the Wiener filter and the Kalman filter. Several problems discussed in Chapter 2, such as PCA, ICA, and Dictionary Learning, are specific instances of the denoising problem. For a fixed and the additive Gaussian noise model (3.2.1), the denoising problem can be formulated as attempting to learn a function which forms the best possible approximation (in expectation) of the true random variable , given both and :

| (3.2.3) |

The solution to this problem, when optimizing over all possible (square-integrable) functions, is the so-called Bayes optimal denoiser:

| (3.2.4) |

This expression justifies the notation , which is meant to compute a conditional expectation (i.e., conditional mean or conditional average). In short, it attempts to remove the noise from the noisy input, outputting the best possible guess (in expectation and w.r.t. the -distance) of the (de-noised) original random variable.

Example 3.2 (Denoising Gaussian Noise from a Mixture of Gaussians).

In this example we compute the Bayes optimal denoiser for an incredibly important class of distributions, the Gaussian mixture model. To start, let us fix parameters for the distribution: mixture weights , component means , and component covariances , where is the set of symmetric positive semidefinite matrices. Now, suppose is generated by the following two-step procedure:

-

•

First, an index (or label) is sampled such that with probability .

-

•

Second, is sampled from the normal distribution .

Then has distribution

| (3.2.5) |

and so

| (3.2.6) |

Let us define as the probability density of evaluated at . In this notation, the density of is

| (3.2.7) |

Conditioned on , the variables are jointly Gaussian: if we say that where is the matrix square root and independently of (and ), then we have

| (3.2.8) |

This shows that and are jointly Gaussian (conditioned on ) as claimed. Thus we can write

| (3.2.9) |

Thus the conditional expectation of given (i.e., the Bayes optimal denoiser conditioned on ) is famously (Exercise 3.2)

| (3.2.10) |

To find the overall Bayes optimal denoiser, we use the law of iterated expectation, obtaining

| (3.2.11) | ||||

| (3.2.12) | ||||

| (3.2.13) |

The probability can be dealt with as follows. Let be the probability density of conditioned on the value of . Then

| (3.2.14) | ||||

| (3.2.15) |

On the other hand, the conditional expectation is as described before:

| (3.2.16) |

So putting this all together, the true Bayes optimal denoiser is

| (3.2.17) |

This example is particularly important, and several special cases will give us great conceptual insight later. For now, let us attempt to extract some geometric intuition from the functional form of the optimal denoiser (3.2.17).

To try to understand (3.2.17) intuitively, let us first set (i.e., one Gaussian) such that . Let us then diagonalize . Then the Bayes optimal denoiser is

| (3.2.18) |

where are the eigenvalues of . We can observe that this denoiser has three steps:

-

•

Translate the input by .

-

•

Contract the (translated) input in each eigenvector direction by a quantity . If the translated input is low-rank and some eigenvalues of are zero, these directions get immediately contracted to by the denoiser, ensuring that the output of the contraction is similarly low-rank.

-

•

Translate the output back by .

It is easy to show that it contracts the current towards the mean :

| (3.2.19) |

This is the geometric interpretation of the denoiser of a single Gaussian. The overall denoiser of the Gaussian mixture model (3.2.17) uses such denoisers, weighting their output by the posterior probabilities . If the means of the Gaussians are well-separated, these posterior probabilities are very close to or near each mean or cluster. In this regime, the overall denoiser (3.2.17) has the same geometric interpretation as the above single Gaussian denoiser.

At first glance, such a contraction mapping (3.2.19) may appear similar to power iterations (see Section 2.1.2). However, the two are fundamentally different. Power iteration implements a contraction mapping towards a subspace—namely the subspace spanned by the first principal component. In contrast, the iterates in (3.2.19) converge to the mean of the underlying distribution, which is a single point.

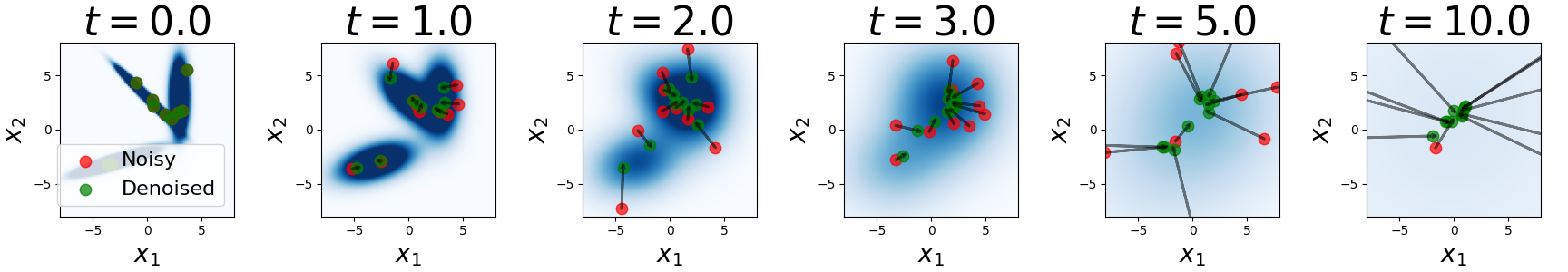

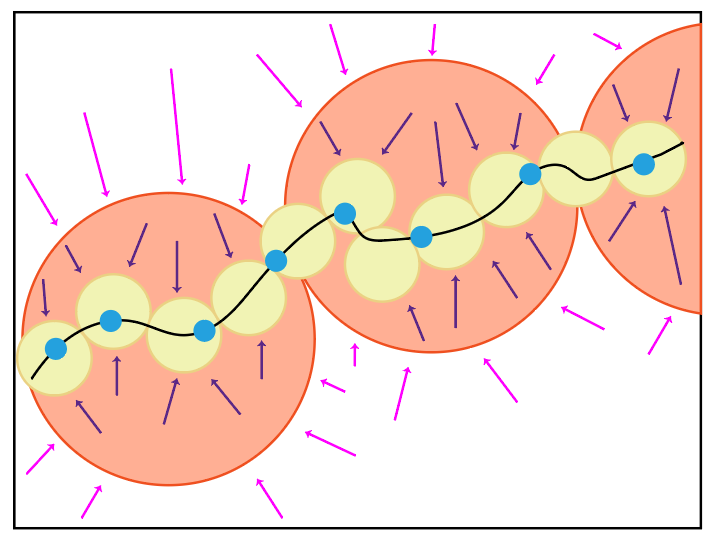

Intuitively, and as we can see from Example 3.2, the Bayes optimal denoiser should move its input towards the modes of the distribution of . It turns out that, actually, we can quantify this by showing that the Bayes optimal denoiser takes a gradient ascent step on the (log-)density of , which (recall) we denoted . That is, following the denoiser means moving from the input iterate to a region of higher probability within this (perturbed) distribution. For small , the perturbation is small so our initial intutition is therefore (almost) exactly right. The picture is visualized in Figure 3.4 and rigorously formulated as Tweedie’s formula [Rob56].

Theorem 3.3 (Tweedie’s Formula).

Suppose that obeys (3.2.1). Let be the density of (as previously declared). Then

| (3.2.20) |

Proof.

For the proof let us suppose that has a density (even though the theorem is true without this assumption), and call this density . Let and be the conditional densities of given and given respectively. Let be the density of evaluated at , so that . Then a simple calculation gives

| (3.2.21) | ||||

| (3.2.22) | ||||

| (3.2.23) | ||||

| (3.2.24) | ||||

| (3.2.25) | ||||

| (3.2.26) | ||||

| (3.2.27) | ||||

| (3.2.28) | ||||

| (3.2.29) | ||||

| (3.2.30) | ||||

| (3.2.31) | ||||

| (3.2.32) |

Simple rearranging of the above equality proves the theorem. ∎

This result develops a connection between denoising and optimization: the Bayes-optimal denoiser takes a single step of gradient ascent on the perturbed data density , and the step size adaptively becomes smaller (i.e., taking more precise steps) as the perturbation to the data distribution grows smaller. The quantity is called the (Hyvärinen) score and frequently appears in discussions about denoising, etc.; it first appeared in a paper of Aapo Hyvärinen in the context of ICA [Hyv05].

Similar to how one step of gradient descent is almost never sufficient to minimize an objective in practice when initializing far from the optimum, the output of the Bayes-optimal denoiser is almost never contained in a high-probability region of the data distribution when is large, especially when the data have low-dimensional structures. We illustrate this point explicitly in the following example.

Example 3.3 (Denoising a Two-Point Mixture).

Let be uniform on the two-point set and let follow (3.2.1). This is precisely a degenerate Gaussian mixture model with priors equal to , means , and covariances both equal to . For a fixed we can use the calculation of the Bayes-optimal denoiser in (3.2.17) to obtain (proof as exercise)

| (3.2.33) |

For near , this quantity is near for almost all inputs . However, for large, this quantity is not necessarily even approximately in the original support of , which, remember, is . In particular, for it holds which lies completely in between the two possible points. Thus will not output “realistic” . Or more mathematically, the distribution of is very different from the distribution of .

Therefore, if we want to denoise the very noisy sample (where—recall— is the maximum time), we cannot just use the denoiser once. Instead, we must use the denoiser many times, analogously to gradient descent with decaying step sizes, to converge to a stationary point . Namely, we shall use the denoiser to go from to which approximates , then from to , etc., all the way from to . Each time we take a denoising step, the action of the denoiser becomes more like a gradient step on the original (log-)density.

More formally, we uniformly discretize into timesteps , i.e.,

| (3.2.34) |

Then for each , going from to , we can run the iteration

| (3.2.35) | ||||

| (3.2.36) | ||||

| (3.2.37) | ||||

| (3.2.38) | ||||

| (3.2.39) |

The effect of this iteration is as follows. At the beginning of the iteration, where is large, we barely trust the output of the denoiser and mostly keep the current iterate. This makes sense, as the denoiser can have huge variance (cf Example 3.3). When is small, the denoiser will “lock on” to the modes of the data distribution, as a denoising step basically takes a gradient step on the true distribution’s log-density, and we can trust it not to produce unreasonable samples, so the denoising step mostly involves the output of the denoiser. At we even throw away the current iterate and just keep the output of the denoiser.

The above is intuition for why we expect the denoising process to converge. We visualize the convergence process in in Figure 3.5. We will develop some rigorous results about convergence later. For now, recall that we wanted to build a process to reduce the entropy. While we did do this in a roundabout way by inverting a process which adds entropy, it is now time to pay the piper and confirm that our iterative denoising process reduces the entropy.

Theorem 3.4 (Simplified Version of Theorem B.3).

Suppose that obeys (3.2.1). Then, under certain technical conditions on , for every with ,

| (3.2.40) |

The full statement of the theorem, and the proof itself, requires some technicality, so it is postponed to Section B.2.2.

The last thing we discuss here is that many times, we will not be able to compute for any , since we do not have the distribution . But we can try to learn one from data. Recall that the denoiser is defined in (3.2.3) as minimizing the mean-squared error . We can use this mean-squared error as a loss or objective function to learn the denoiser. For example, we can parameterize by a neural network, writing it as , and optimize the loss over the parameter space :

| (3.2.41) |

The solution to this optimization problem, implemented via gradient descent or a similar algorithm, will give us a which is a good approximation to (at least if the training works) and which we will use as our denoiser.

What is a good architecture for this neural network ? To answer this question, we will examine the ubiquitous case of a Gaussian mixture model, whose denoiser we computed in Example 3.2. This model is relevant because it can approximate many types of distributions: in particular, given a distribution for , there is a Gaussian mixture model that can approximate it arbitrarily well. So optimizing among the class of denoisers for Gaussian mixture models can give us something close to the optimal denoiser for the real data distribution.

In our case, we assume that is low-dimensional, which loosely translates into the requirement that is approximately distributed according to a mixture of low-rank Gaussians. Formally, we write

| (3.2.42) |

where is an orthogonal matrix. Then the optimal denoiser under (3.2.1) is (from Example 3.2)

| (3.2.43) |

Notice that within the computation and outside of it, we compute the inverse . This is a low-rank perturbation of the full-rank matrix , and thus ripe for simplification via the Sherman-Morrison-Woodbury identity, i.e., for matrices such that and are invertible,

| (3.2.44) |

We prove this identity in Exercise 3.3. For now we apply this identity with , , , and , obtaining

| (3.2.45) | ||||

| (3.2.46) | ||||

| (3.2.47) |

Then we can compute the posterior probabilities as follows. Note that since ’s are all orthogonal, are all the same for each . So

| (3.2.48) | ||||

| (3.2.49) | ||||

| (3.2.50) | ||||

| (3.2.51) | ||||

| (3.2.52) |

This is a softmax operation weighted by the projection of onto each subspace measured by (tempered by a temperature ). Meanwhile, the component denoisers can be written as

| (3.2.53) | ||||

| (3.2.54) | ||||

| (3.2.55) |

Putting these together, we have

| (3.2.56) |

i.e., a projection of onto each of subspaces, weighted by a soft-max operation of a quadratic function of . This functional form is similar to an attention mechanism in a transformer architecture! As we will see in Chapter 4, this is no coincidence at all; the deep link between denoising and lossy compression (to be covered in Section 3.3) makes transformer denoisers so effective in practice. And so overall, our Gaussian mixture model theory motivates the use of transformer-like neural networks for denoising.

Remark 3.1.

Connections between denoising a distribution and probabilistic PCA. Here, we would like to connect denoising a low-dimensional distribution to probabilistic PCA (see Section 2.1.3 for more details about probabilistic PCA). Suppose that we consider in (3.2.42), i.e., , where is an orthogonal matrix. According to (3.2.56), the Bayes optimal denoiser is

| (3.2.57) |

To learn this Bayes optimal denoiser, we can accordingly parameterize the denoising operator as follows:

| (3.2.58) |

where are learnable parameters. Substituting this into the training loss (3.2.3) yields

| (3.2.59) |

where the equality is due to (3.2.1). Conditioned on , we compute

| (3.2.60) | ||||

| (3.2.61) | ||||

| (3.2.62) |

where the second equality follows from and due to . Therefore, Problem (3.2.59) in equivalent to

| (3.2.63) |

This is further equivalent to

| (3.2.64) |

which is essentially Problem (2.1.27).

Overall, the learned denoiser forms an (implicit parametric) encoding scheme of the given data, since it can be used to denoise/project onto the data distribution. Training a denoiser is equivalent to finding a better coding scheme, and this partially fulfills one of the desiderata (the second) at the end of Section 3.1.3. In the sequel, we will discuss how to fulfill the other (the first).

3.2.2 Learning and Sampling a Distribution via Iterative Denoising

Remember that at the end of Section 3.1.3, we discussed a pair of desiderata for pursuing a distribution with low-dimensional structure. The first such desideratum is to start with a normal distribution, say with high entropy, and gradually reduce its entropy until it reaches the distribution of the data. We will call this procedure sampling since we are generating new samples. It is now time for us to discuss how to do this with the toolkit we have built up.

We know how to denoise very noisy samples to attain approximations that have similar distributions to the target random variable . But the desideratum says that, to sample, we want to start with a template distribution with no influence from the distribution of and use the denoiser to guide the iterates towards the distribution of . How can we do this? One way is motivated as follows:

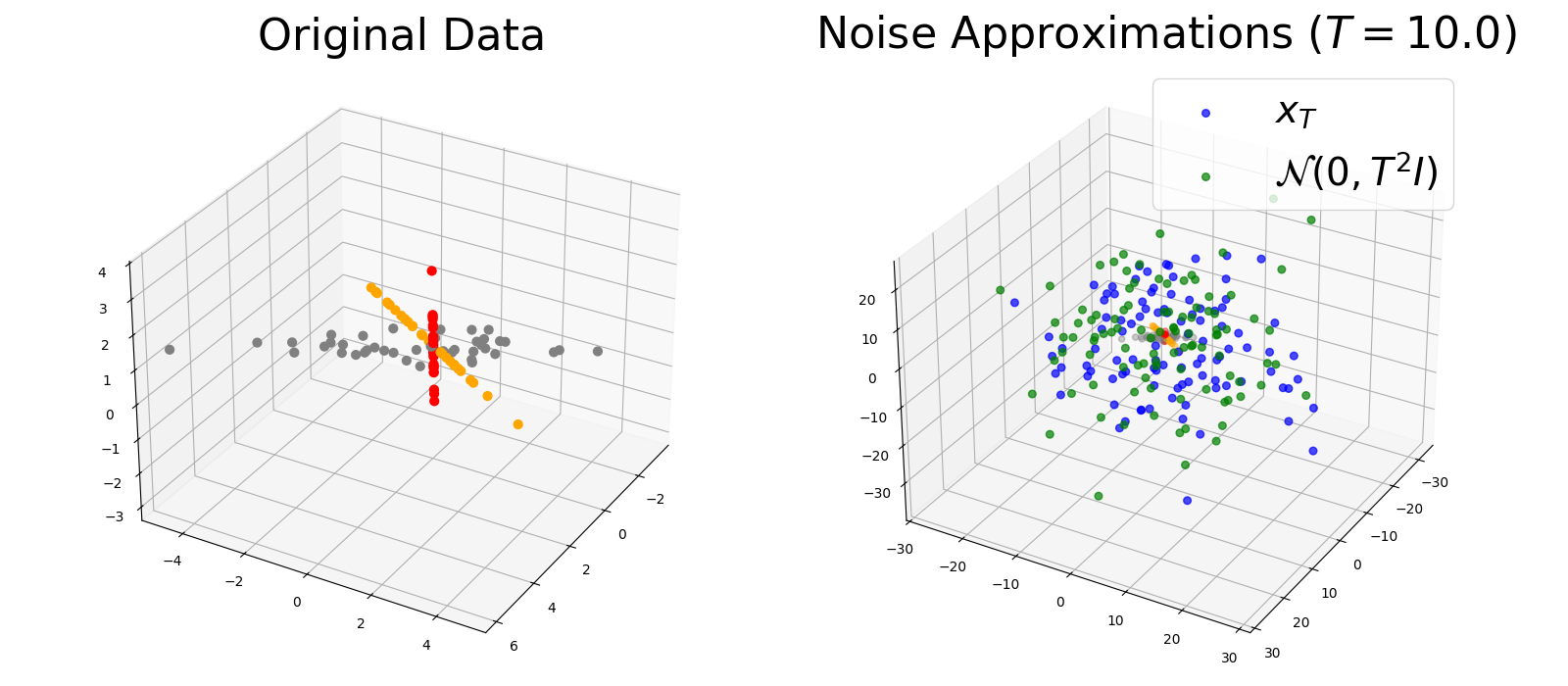

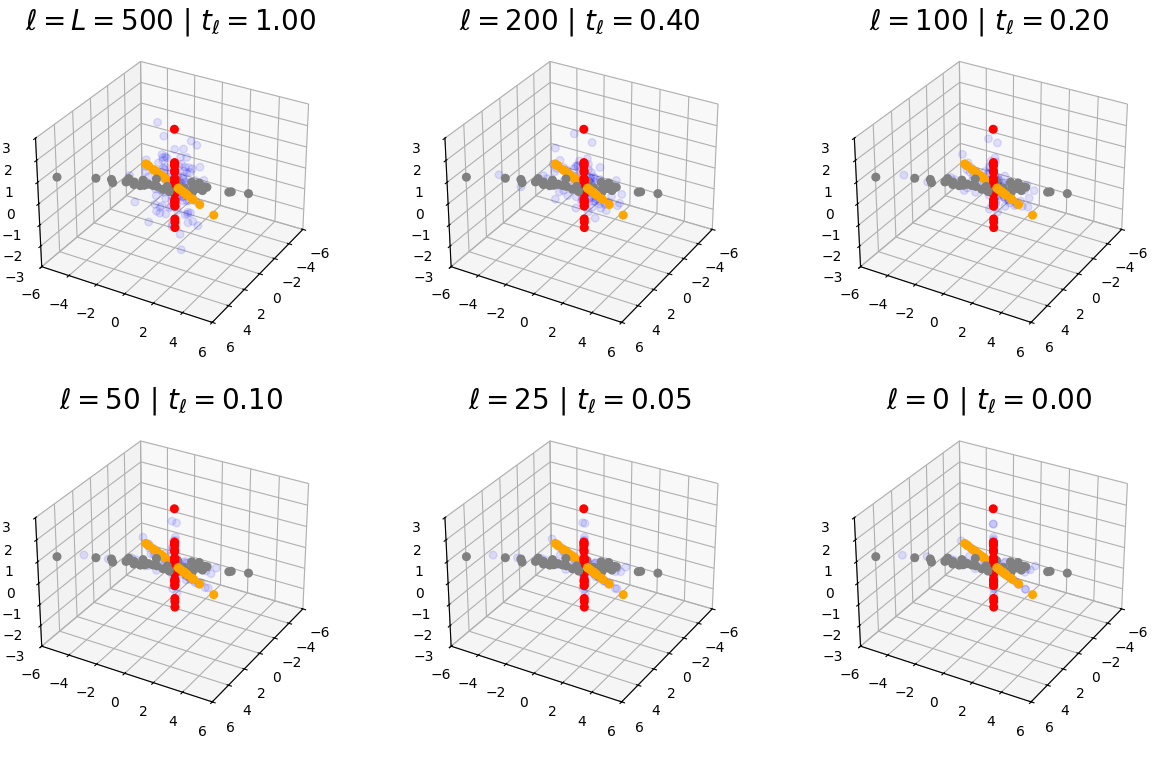

| (3.2.65) |

Thus, . This approximation is quite good for almost all practical distributions, and visualized in Figure 3.6.

So, discretizing into uniformly using (as in the previous section), one possible way to sample from pure noise is:

-

•

Sample (i.i.d. of everything else)

-

•

Run the denoising iteration as in Section 3.2.1, i.e.,

(3.2.66) -

•

Output .

This conceptually is all there is behind diffusion models, which transform noise into data samples in accordance with the first desideratum. However, there are a few steps left to take before we get models which can actually sample from real data distributions like images given practical resource constraints. In the sequel, we will introduce and motivate several such steps.

Step 1: different discretizations.

The first step we do is motivated by the following point: we do not need to spend so many denoising iterations at large . If we look at Figure 3.5, we observe that the first or iterations out of the iterations of the sampling process are just spent contracting the noise towards the data distribution as a whole, before the remaining iterations push the samples towards a subspace. Given a fixed iteration count , this signals that we should spend more timesteps near compared to . During sampling (and training), we can therefore use another discretization of into , such as an exponential discretization:

| (3.2.67) |

where are constants which can be tuned for optimal performance in practice; theoretical analysis will often specify such optimal constants as well. Then the denoising/sampling iteration becomes

| (3.2.68) |

with, again, .

Step 2: different noise models.

The second step is to consider slightly different models compared to (3.2.1). The basic motivation for this is as follows. In practice, the noise distribution becomes an increasingly poor estimate of the true covariance in high dimensions, i.e., (3.2.65) becomes an increasingly worse approximation, especially with anisotropic high-dimensional data. The increased distance between and the true distribution of may cause the denoiser to perform worse in such circumstances. Theoretically, never converges to any distribution as increases, so this setup is difficult to analyze end-to-end. In this case, our remedy is to simultaneously add noise and shrink the contribution of , such that converges as . The rate of added noise is denoted , and the rate of shrinkage is denoted , such that is increasing and is (not strictly) decreasing, and

| (3.2.69) |

The previous setup has and , and this is called the variance-exploding (VE) process. A popular choice which decreases the contribution of , as we described originally, has (so that ), and ; this is the variance-preserving (VP) process. Note that under the VP process, exactly, so we can just sample from this standard distribution and iteratively denoise. As a result, the VP process is much easier to analyze theoretically and more stable empirically.666Why use the whole setup? As we will see in Exercise 3.5, it encapsulates and unifies many proposed processes, including the recently popular so-called flow matching process. Despite this, the VE and VP processes are still the most popular empirically and theoretically (so far), and so we will consider them in this Section.

With this more general setup, Tweedie’s formula (3.2.20) becomes

| (3.2.70) |

The denoising iteration (3.2.68) becomes

| (3.2.71) |

Finally, the Gaussian mixture model denoiser (3.2.17) becomes

| (3.2.72) |

Figure 3.7 demonstrates iterations of the sampling procedure. Note that the denoising iteration (3.2.71) gives a sampling algorithm called the DDIM (“Denoising Diffusion Implicit Model”) sampler [SME20], and is one of the most popular sampling algorithms used today in diffusion models. We summarize it here in Algorithm 3.1.

Step 3: optimizing training pipelines.

If we use the procedure dictated by Section 3.2.1 to learn a separate denoiser for each time to be used in the sampling algorithm, we would have to learn separate denoisers! This is highly inefficient—the usual case is that we have to train separate neural networks, taking up times the training time and storage memory, and then be locked into using these timesteps for sampling forever. Instead, we can train a single neural network to denoise across all times , taking as input the continuous variables and (instead of just before). Mechanically, our training loss averages over , i.e., solves the following problem:

| (3.2.73) |

Similar to Step 1, where we used more timesteps closer to to ensure a better sampling process, we may want to ensure that the denoiser is higher quality closer to , and thereby weight the loss so that near has higher weight. Letting be the weight at time , the weighted loss would look like

| (3.2.74) |

One reasonable choice of weight in practice is . The precise reason will be covered in the next paragraph, but generally it serves to up-weight the losses corresponding to near while still remaining reasonably numerically stable. Also, of course, we cannot compute the expectation in practice, so we use the most straightforward Monte-Carlo average to estimate it. The series of changes made here have several conceptual and computational benefits: we do not need to train multiple denoisers, we can train on one set of timesteps and sample using a subset (or others entirely), etc. The full pipeline is discussed in Algorithm 3.2.

(Optional) Step 4: changing the estimation target.

Note that it is common to instead reorient the whole denoising pipeline around noise predictors, i.e., estimates of . In practice, noise predictors are slightly easier to train because their output is (almost) always of comparable size to a Gaussian random variable, so training is more numerically stable. Note that by (3.2.69) we have

| (3.2.75) |

Therefore any predictor for can be turned into a predictor for using the above relation, i.e.,

| (3.2.76) |

and vice-versa. Thus a good network for estimating is the same as a good network for estimating plus a residual connection (as seen in, e.g., transformers). Their losses are also the same as the denoiser, up to the factor of , i.e.,

| (3.2.77) |

For the sake of completeness we will mention that other targets have been proposed for different tasks, e.g., (called -prediction or velocity prediction), etc., but denoising and noise prediction remain commonly used. Throughout the rest of this book we will only consider denoising.

We have made lots of changes to our original platonic noising/denoising process. To assure ourselves that the new process still works in practice, we can compute numerical examples (such as Figure 3.7). To assure ourselves that it is theoretically sound, we can prove a bound on the error rate for the sampling algorithm, which shows that the error rate is small. We will now furnish such a rate from the literature, which shows that the output distribution of the sampler converges in the so-called total variation (TV) distance to the true distribution. The TV distance is defined between two random variables and as:

| (3.2.78) |

If and are very close (uniformly), then the supremum will be small. So the TV distance measures the closeness of random variables. (It is indeed a metric, as the name suggests; the proof is an exercise.)

Theorem 3.5 ([LY24] Theorem 1, Simplified).

Suppose that . If is denoised according to the VP process with an exponential discretization777The precise definition is rather lengthy in our notation and only defined up to various absolute constants, so we omit it here for brevity. Of course it is in the original paper [LY24]. as in (3.2.67), the output of Algorithm 3.1 satisfies the total variation bound

| (3.2.79) |

where is the Bayes optimal denoiser for , and is a version of the big- notation which ignores logarithmic factors in .

The very high-level proof technique is, as discussed earlier, to bound the error at each step, distinguish the error sources (between discretization and denoiser error), and carefully ensure that the errors do not accumulate too much (or even cancel out).

Note that if and we correctly learn the Bayes optimal denoiser (such that the excess error is ), then the sampling process in Algorithm 3.1 yields a perfect (in distribution) inverse of the noising process, since the error rate in Theorem 3.5 goes to ,888There are similar results for VE processes, though none are as sharp as this to our knowledge. as heuristically argued previously.

Remark 3.2.

What if the data is low-dimensional, say supported on a low-rank subspace of the high dimensional space ? If the data distribution is compactly supported—say if the data is normalized to the unit hypercube, which is often ensured as a pre-processing step for real data such as images—it is possible to do better. Namely, the authors of [LY24] also define a measure of approximate intrinsic dimension using the asymptotics of the so-called covering number, which is extremely similar in intuition (if not in implementation) to the rate distortion function presented in the next Section. Then they show that using a particular small modification of the DDIM sampler in Algorithm 3.1 (i.e., slightly perturbing the update coefficients), the discretization error becomes

| (3.2.80) |

instead of like it was in Theorem 3.5. Therefore, using this modified algorithm, does not have to be too large even as reaches the thousands or millions, since real data have low-dimensional structure. However in practice we use the DDIM sampler instead, so should have a mild dependence on to achieve consistent error rates. The exact choice of trades off between the computational complexity (e.g., runtime or memory consumption) of sampling and the statistical complexity of learning a denoiser for low-dimensional structures. The value of is often different at training time (where a larger allows better coverage of the interval , which helps the network learn a relationship which generalizes over ) and sampling time (where being smaller means more efficient sampling). One can even pick the timesteps adaptively at sampling time in order to optimize this tradeoffs [BLZ+22].

Remark 3.3.

Various other works define the reverse process as moving backward in the time index using an explicit difference equation, or differential equation in the limit , or forward in time using the transformation , such that if increases then becomes closer to . In this work we strive to keep consistency: we move forward in time to noise, and backward in time to denoise. If you are reading another work which is not clear on the time index, or trying to implement an algorithm which is similarly unclear, there is one way to do it right every time: the sampling process should always have a positive coefficient on both the denoiser term and the current iterate when moving from step to step. But in general many papers define their own notation and it is not user-friendly.

Remark 3.4.

The theory presented at the end of the last Section 3.2.1 seems to suggest (loosely speaking) that in practice, using a transformer-like network is a good choice for learning or approximating a denoiser. This is reasonable, but what is the problem with using any old neural network (such as a multi-layer perceptron (MLP)) and just trying to scale it up to infinity? To observe the problem with this, let us look at another special case of the Gaussian mixture model studied in Example 3.2. Namely, the empirical distribution is an instance of a degenerate Gaussian mixture model, with components sampled with equal probability . In this case the Bayes optimal denoiser is

| (3.2.81) |

This is a convex combination of the data , and the coefficients get “sharper” (i.e., closer to or ) as . Notice that this denoiser optimally solves the denoising optimization problem (3.2.74) when we compute the loss based on drawing uniformly at random from a fixed finite dataset , which is a very realistic setting. Thus, if our network architecture is expressive enough such that optimal denoisers of the above form (3.2.81) may be well-approximated, then the learned denoiser may do just that. Then, our iterative denoising Algorithm 3.1 will sample exactly from the empirical distribution, re-generating samples in the training data, as certified by Theorem 3.5. This is a bad sampler, not really more interesting than a database of all samples, and so it is important to understand how to avoid this in practice. The key is to come up with a network architecture which can well-approximate the true denoiser (say corresponding to a low-rank distribution as in (3.2.56)) but not the empirical Bayesian denoiser as in (3.2.81). Some work has explored this fine line and why modern diffusion models, which use transformer- and convolutional-based network architectures, can memorize and generalize in different regimes [KG24, NZM+24].

At a high level, a denoiser which memorizes all the training points, as in (3.2.81), corresponds to a parametric model of the distribution which has minimal coding rate, and achieves this by just coding every sample separately. We will discuss this problem (and seeming paradox with our initial desiderata at the end of Section 3.1.3) from the perspective of information theory in the next section.

3.3 Compression via Lossy Coding

Let us recap what we have covered so far. We have discussed how to fit a denoiser using finite samples. We showed that this denoiser encodes a distribution in that it is directly connected to its log-density via Tweedie’s formula (3.2.20). Then, we used it to gradually transform a pure noise (high-entropy) distribution towards the learned distribution via iterative denoising. Thus, we have developed the first way of learning or pursuing a distribution laid out at the end of Section 3.1.3.

Nevertheless, in this methodology, the encoding of the distribution is implicit in the denoiser’s functional form and parameters, if any. In fact, acute readers might have noticed that for a general distribution, we have never explicitly specified what the functional form for the denoiser is. In practice, people typically model it by some deep neural network with an empirically designed architecture. In addition, although we know the above denoising process reduces the entropy, we do not know by how much, nor do we know the entropy of the intermediate and resulting distributions.

Recall that our general goal is to model data from a (continuous) distribution with a low-dimensional support. If our goal is to identify the “simplest” model that generates the data, one could consider three typical measures of parsimony: the dimension, the volume, or the (differential) entropy. Well, if one uses the dimension, then obviously the best model for a given dataset is the empirical distribution itself which is zero-dimensional. For all distributions with low-dimensional supports, the differential entropy is always negative infinity; the volume of their supports are always zero. So, among all distributions of low-dimensional supports that could have generated the same data samples, how can we decide which one is better based on these measures of parsimony that cannot distinguish among low-dimensional models at all? This section aims to address this seemingly baffling situation.

In the remainder of this chapter, we discuss a framework that allows us to alleviate the above technical difficulty by associating the learned distribution with an explicit computable encoding and decoding scheme, following the second approach suggested at the end of Section 3.1.3. As we will see, such an approach essentially allows us to accurately approximate the entropy of the learned distributions in terms of a (lossy) coding length or coding rate associated with the coding scheme. With such a measure, not only can we accurately measure how much the entropy is reduced, hence information gained, by any processing (including denoising) of the distribution, but we can also derive an explicit form of the optimal operator that can conduct such operations in the most efficient way. As we will see in the next Chapter 4, this will lead to a principled explanation for the architecture of deep networks, as well as to more efficient deep-architecture designs.

3.3.1 Necessity of Lossy Coding

We have previously, multiple times, discussed a difficulty: if we learn the distribution from finite samples in the end, and our function class of denoisers contains enough functions, how do we ensure that we sample from the true distribution (with low-dimensional supports), instead of any other distribution that may produce those finite samples with high probability? Let us reveal some of the conceptual and technical difficulties with some concrete examples.

Example 3.4 (Volume, Dimension, and Entropy).

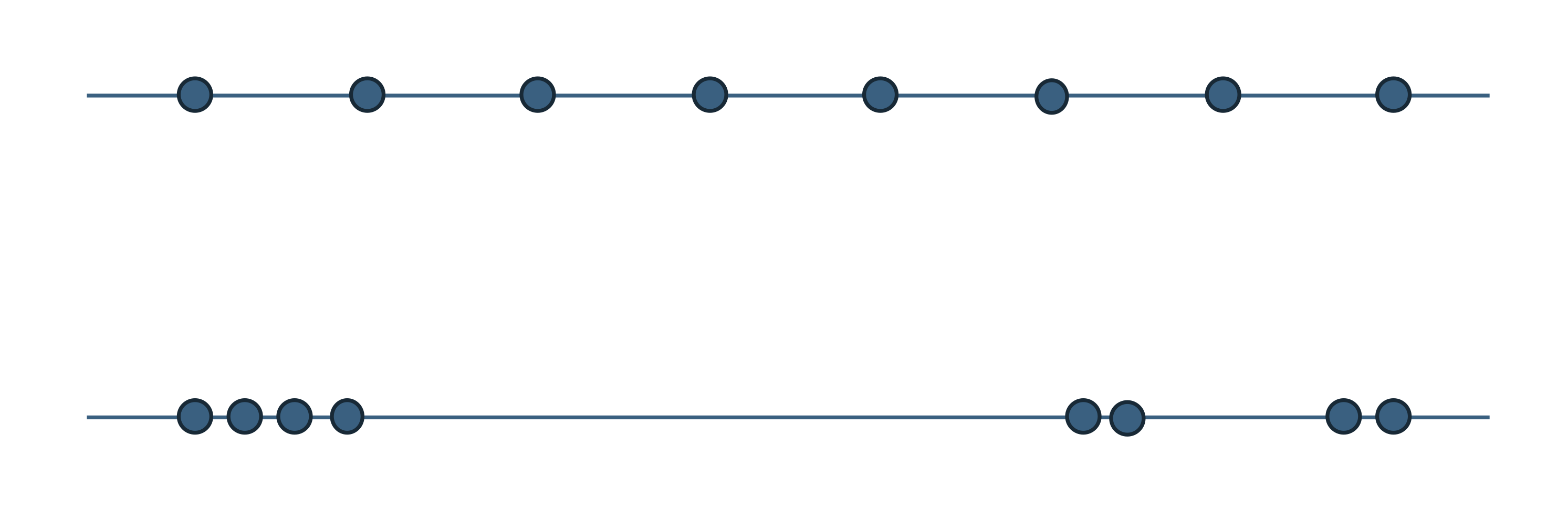

For the example shown on the top of Figure 3.8, suppose we have taken some samples from a uniform distribution on a line (say in a 2D plane). The volume of the line or the sample sets is zero. Geometrically, the empirical distribution on the produced finite sample set is the minimum-dimension one which can produce the finite sample set.999A set of discrete samples are all of zero dimension whereas the supporting line is one dimension. But this is in seemingly contrast with yet another measure of complex: entropy. The (differential) entropy of the line is negative infinity but the (discrete) entropy of this sample set is finite and positive. So we seem to have a paradoxical situation according to these common measures of parsimony or complexity: they cannot properly differentiate among (models for) distributions of low-dimensional supports at all, and some seem to differentiate them even in exactly opposite manners.101010Of course, strictly speaking, differential entropy and discrete entropy are not directly comparable.

Example 3.5 (Density).

Consider the two sets of sampled data points shown in Figure 3.8. Geometrically, they are essentially the same: each set consists of eight points and each point has occurred with equal frequency th. The only difference is that for the second data set, some points are “close” enough to be viewed as having a higher density around their respective “cluster.” Which one is more relevant to the true distribution that may have generated the samples? How can we reconcile such ambiguity in interpreting this kind of (empirical) distributions?

There is yet another technical difficulty associated with constructing an explicit encoding and decoding scheme for a data set. Given a sampled data set in , how to design a coding scheme that is implementable on machines with finite memory and computing resources? Note that even representing a general real number requires an infinite number of digits or bits. Therefore, one may wonder whether the entropy of a distribution is a direct measure for the complexity of its (optimal) coding scheme. We examine this matter with another simple example.

Example 3.6 (Precision).

Consider a discrete distribution with equal probability taking the values of the Euler number or the number . The entropy of this distribution is , which suggests that one may encode the two numbers by a one-bit digit or , respectively. But can you realize a decoding scheme for this code on a finite-state machine? The answer is actually no, as it takes infinitely many bits to describe either number precisely.

Hence, it is generally impossible to have an encoding and decoding scheme that can precisely reproduce samples from an arbitrary real-valued distribution.111111That is, if one wants to encode such samples precisely, the only way is to memorize every single sample. But there would be little practical value to encode a distribution without being able to decode for samples drawn from the same distribution.

So to ensure that any encoding/decoding scheme is computable and implementable with finite memory and computational resources, we need to quantify the sample and encode it only up to a certain precision, say . By doing so, in essence, we treat any two data points equivalent if their distance is less than . More precisely, we would like to consider coding schemes

| (3.3.1) |

such that the expected error caused by the quantization is bounded by . It is mathematically more convenient, and conceptually almost identical, to bound the expected squared error by , i.e.,

| (3.3.2) |

Typically, the distance is chosen to be the Euclidean distance, or the 2-norm.121212More generally, we can replace with any so-called divergence. We will adopt this choice in the sequel.

3.3.2 Rate Distortion and Data Geometry

Of course, among all encoding schemes that satisfy the above constraint, we would like to choose the one that minimizes the resulting coding rate. For a given random variable and a precision , this rate is known as the rate distortion, denoted as . A deep theorem in information theory, originally proved by [Sha59], establishes that this rate can be expressed equivalently in purely probabilistic terms as

| (3.3.3) |

where the quantity is known as the mutual information, defined by

| (3.3.4) |

Note that the minimization in (3.3.3) is over all conditional distributions that satisfy the distortion constraint . Each such conditional distribution induces a joint distribution , which determines the mutual information (3.3.4). Many convenient properties of the mutual information (and hence the rate distortion) are implied by corresponding properties of the KL divergence (recall Theorem 3.1). From the definition, we know that is a non-increasing function in .

Remark 3.5.

As it turns out, the rate distortion is an implementable approximation to the entropy of in the following sense. Assume that and are continuous random vectors. Then the mutual information can be written as

| (3.3.5) |

where is the conditional entropy of given . Hence, the minimal coding rate is achieved when the difference between the entropy of and the conditional entropy of given is minimized among all distributions that satisfy the constraint: .

In fact, it is not necessary to assume that and are continuous to obtain the above type of conclusion. For example, if both random vectors are instead discrete, we have after a suitable interpretation of the KL divergence for discrete-valued random vectors that

| (3.3.6) |

More generally, advanced mathematical notions from measure theory can be used to define the mutual information (and hence the rate distortion) for arbitrary random variables and , including those with rather exotic low-dimensional distributions; see [CT91, §8.5].

Remark 3.6.

Given a set of data points in , one can always interpret them as samples from a uniform discrete distribution with equal probability on these vectors. The entropy for such a distribution is .131313Note again, even if we can encode these vectors with this coding rate, we cannot decode them with an arbitrary precision. However, even if is a uniform distribution on its samples, the coding rate achievable with a lossy coding scheme could be significantly lower than if these samples are not so evenly distributed and many are clustered closely to each other. Therefore, for the second distribution shown in Figure 3.8, for a properly chosen quantization error , the achievable lossy coding rate can be significantly lower than coding it as a uniform distribution.141414Nevertheless, for this discrete uniform distribution, when is small enough, we always have . Also notice that, with the notion of rate distortion, the difficulty discussed in Example 3.6 also disappears: We can choose two rational numbers that are close enough to each of the two irrational numbers. The resulting coding scheme will have a finite complexity.

Example 3.7.

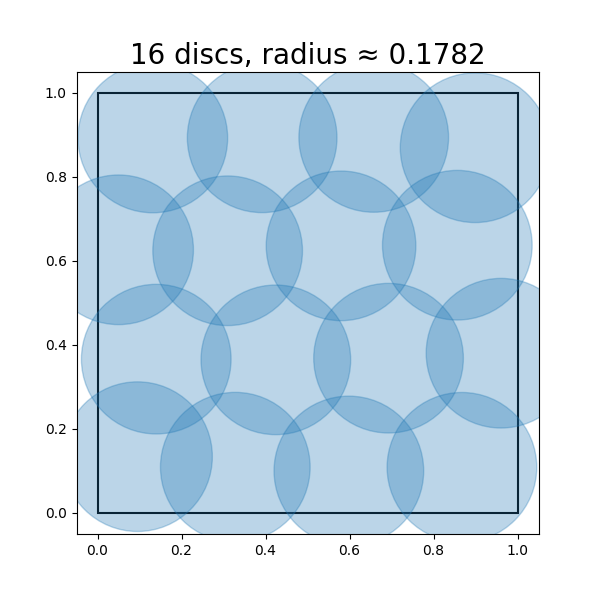

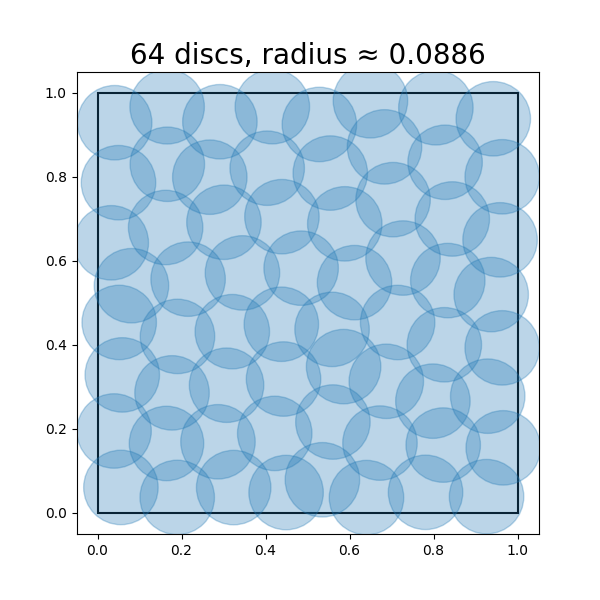

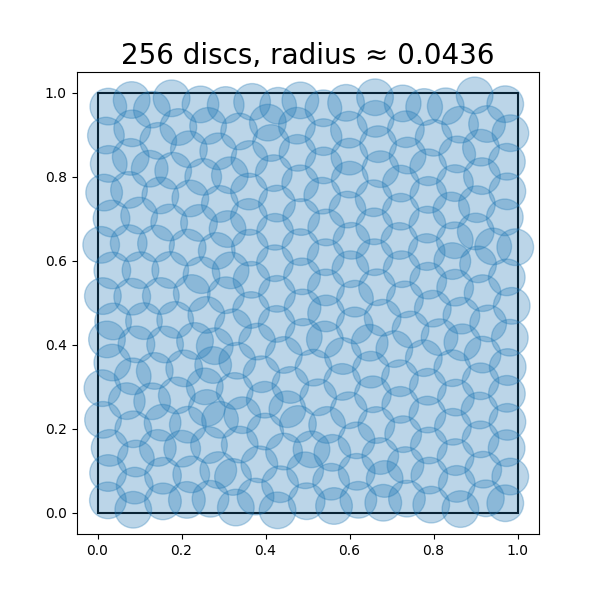

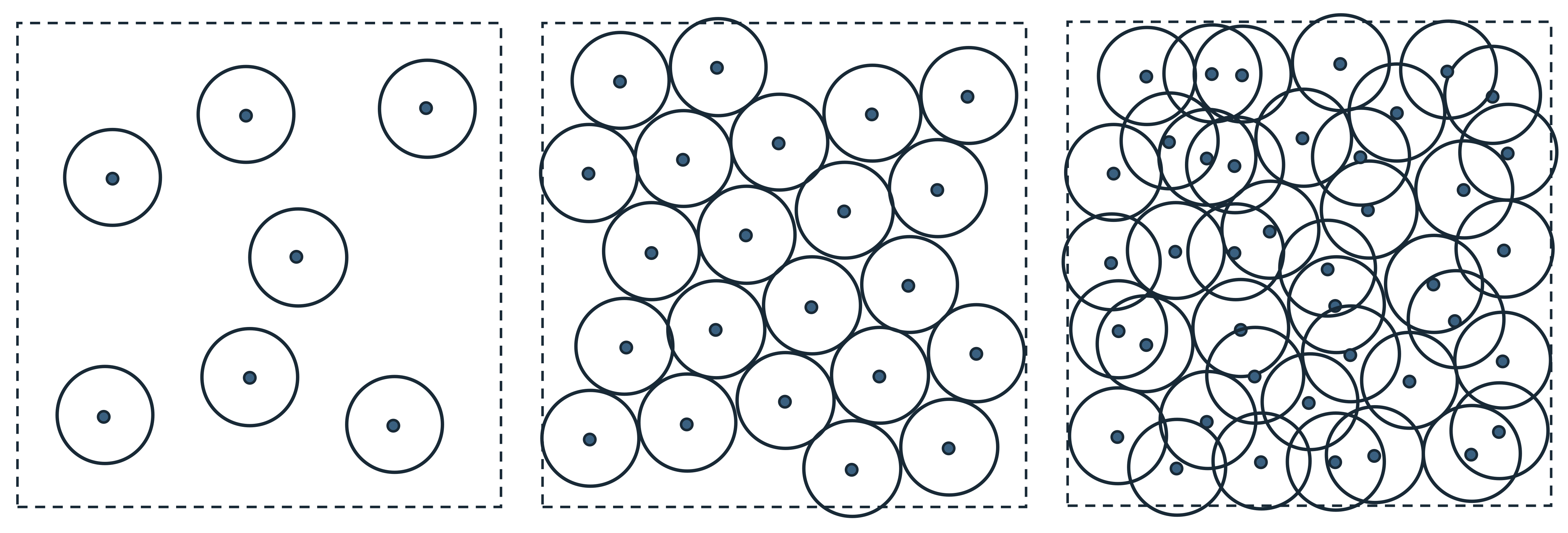

Sometimes, one may face an opposite situation when we want to fix the coding rate first and try to find a coding scheme that minimizes the distortion. For example, suppose that we only want to use a fixed number of codes for points sampled from a distribution, and we want to know how to design the codes such that the average or maximum distortion is minimized during the encoding/decoding scheme. For example, given a uniform distribution on a unit square, we wonder how precisely we can encode points drawn from this distribution, with say bits. This problem is equivalent to asking what is the minimum radius (i.e., distortion) such that we can cover the unit square with discs of this radius. Figure 3.9 shows approximately optimal coverings of a square with , so that discs, respectively. Notice that the optimal radii of the discs decreases as the number of discs increases.

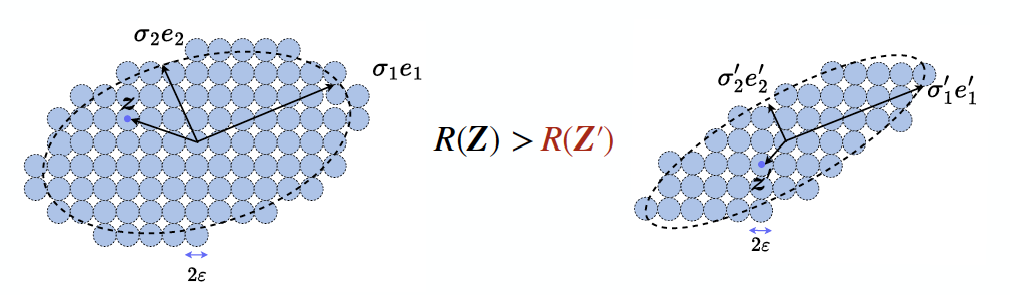

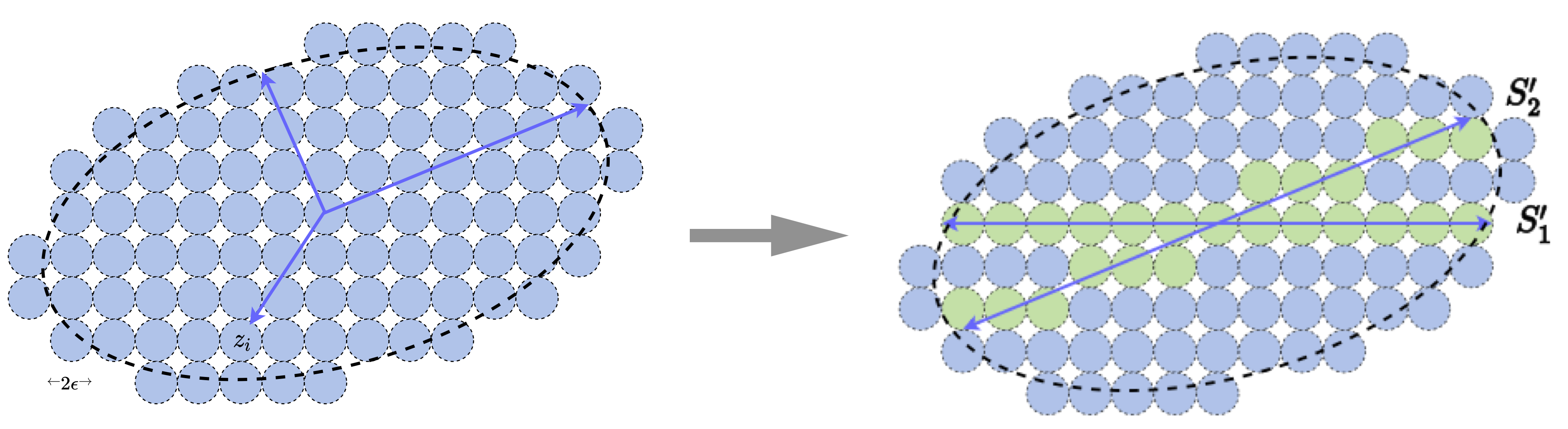

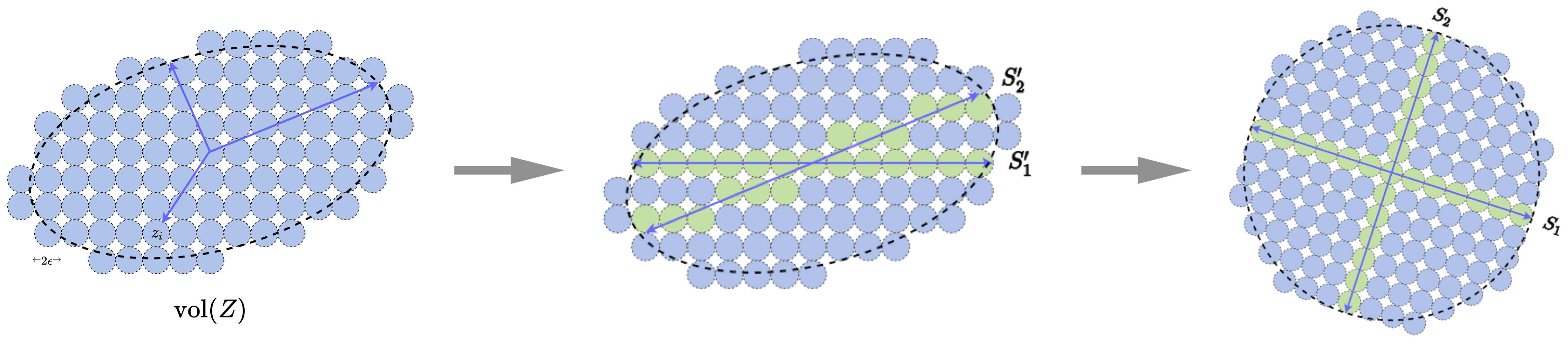

It turns out to be a notoriously hard problem to obtain closed-form expressions for the rate distortion function (3.3.3) for general distributions . However, as Example 3.7 suggests, there are important special cases where the geometry of the support of the distribution can be linked to the rate distortion function and hence to the optimal coding rate at distortion level . In fact, this example can be generalized to any setting where the support of is a sufficiently regular compact set—including low-dimensional distributions—and is uniformly distributed on its support. This covers a vast number of cases of practical interest. We formalize this notion in the following result, which establishes this property for a special case.

Theorem 3.6.

Suppose that is a random variable such that its support is a compact set. Define the covering number as the minimum number of balls of radius that can cover , i.e.,

| (3.3.7) |

where is the Euclidean ball of radius centered at . Then it holds

| (3.3.8) |

If, in addition, is uniformly distributed on and is a mixture of mutually orthogonal low-rank subspaces,151515In fact, it is possible to treat highly irregular , such as fractals, with a parallel result, but its statement becomes far more technical: c.f. Riegler et al. [RBK18, RKB23]. We give a simple proof in Section B.3 which shows the result for mixtures of subspaces. then a matching lower bound holds:

| (3.3.9) |

Proof.

A proof of this theorem is beyond the scope of this book and we defer it to Section B.3. ∎

The implication of Theorem 3.6 can be summarized as follows: for sufficiently accurate coding of the distribution of , the minimum rate distortion coding framework is completely characterized by the sphere packing problem on the support of . The core of the proof of Theorem 3.6 can indeed be generalized to more complex distributions such as sufficiently incoherent mixtures of manifolds, but we leave this for a future study. So the rate distortion can be thought of as a “probability-aware” way to approximate the support of the distribution of by a mixture of many small balls.

We now discuss another connection between this and the denoising-diffusion-entropy complexity hierarchy we discussed earlier in this chapter.

Remark 3.7.

The key ingredient in the proof of the lower bound in Theorem 3.6 is an important result from information theory known as the Shannon lower bound for the rate distortion, named after Claude Shannon, who first derived it in a special case [Sha59]. It asserts the following estimate for the rate distortion function, for any random variable with a density and finite expected squared norm [LZ94]:

| (3.3.10) |

where is a constant depending only on . Moreover, this lower bound is actually sharp as : that is,

| (3.3.11) |

So when the distortion is small, we can think solely in terms of the Shannon lower bound, rather than the (generally intractable) optimization problem defining the rate distortion (3.3.3).

The Shannon lower bound is the bridge between the coding rate, entropy minimization/denoising, and geometric sphere packing approaches for learning low-dimensional distributions. Notice that in the special case of a uniform density , (3.3.10) becomes

| (3.3.12) | ||||

| (3.3.13) |

The ratio approximates the number of -balls needed to cover by a worst-case argument, which is accurate for sufficiently regular sets when is small (see Section B.3 for details). Meanwhile, recall the Gaussian denoising model from earlier in the Chapter, where is independent of . Interestingly, the differential entropy of the joint distribution can be calculated as

| (3.3.14) | ||||

| (3.3.15) |

We have seen the Gaussian entropy calculated in Equation 3.1.4: when is small, it is equal, up to additive constants, to the volumetric quantity we have seen in the Shannon lower bound. In certain special cases (e.g., data supported on incoherent low-rank subspaces), when is small and the support of is sufficiently regular, the distribution of can even be well-approximated locally by the product of the distributions and , justifying the above computation. Hence the Gaussian denoising process yields yet another interpretation of the Shannon lower bound, as arising from the entropy of a noisy version of , with noise level proportional to the distortion level .

Thus, this finite rate distortion approach via sphere covering re-enables or generalizes all previous measures of complexity of the distribution, allowing us to differentiate between and rank different distributions in a unified way. These interrelated viewpoints are visualized in Figure 3.10.

For a general distribution at finite distortion levels, it is typically impossible to find its rate distortion function in an analytical form. One must often resort to numerical computation161616Interested readers may refer to [Bla72] for a classic algorithm that computes the rate distortion function numerically for a discrete distribution.. Nevertheless, as we will see, in our context we often need to know the rate distortion as an explicit function of a set of data points or their representations. This is because we want to use the coding rate as a measure of the goodness of the representations. An explicit analytical form makes it easy to determine how to transform the data distribution to improve the representation. So, we should work with distributions whose rate distortion functions take explicit analytical forms. To this end, we start with the simplest, and also the most important, family of distributions.

3.3.3 Lossy Coding Rate for a Low-Dimensional Gaussian

Now suppose we are given a set of data samples in from any distribution.171717Or these data points could be viewed as an (empirical) distribution themselves. We would like to come up with a constructive scheme that can encode the data up to certain precision, say

| (3.3.16) |

Notice that this is a sufficient, explicit, and interpretable condition which ensures that the data are encoded such that . This latter inequality is exactly the rate distortion constraint for the provided empirical distribution and its encoding. For example, in Example 3.7, we used this simplified criterion to explicitly find the minimum distortion and explicit coding scheme for a given coding rate.

Without loss of generality, let us assume the mean of is zero, i.e., . Without any prior knowledge about the nature of the distribution behind , we may view as sampled from a Gaussian distribution with the covariance181818It is known that given a fixed variance, the Gaussian achieves the maximal entropy. That is, it gives an upper bound for what the worst case could be in terms of possible coding rate.

| (3.3.17) |

Notice that geometrically characterizes an ellipsoidal region where most of the samples reside.

We may view as a noisy version of :

| (3.3.18) |

where is a Gaussian noise independent of . Then the covariance of is given by

| (3.3.19) |

Note that the volume of the region spanned by the vectors is proportional to the square root of the determinant of the covariance matrix

| (3.3.20) |

The volume spanned by each random vector is proportional to

| (3.3.21) |

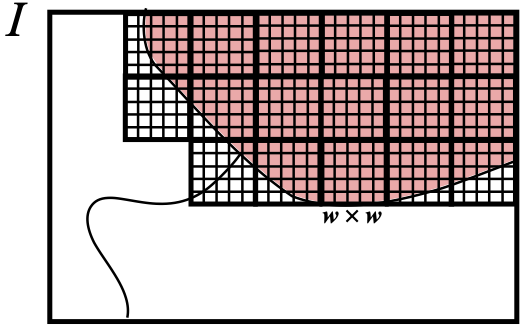

To encode vectors that fall into the region spanned by , we can cover the region with non-overlapping balls of radius , as illustrated in Figure 3.11. When the volume of the region spanned by is significantly larger than the volume of the -ball, the total number of balls that we need to cover the region is approximately equal to the ratio of the two volumes:

| (3.3.22) |

If we use binary numbers to label all the -balls in the region of interest, the total number of binary bits needed is thus

| (3.3.23) |

Example 3.8.

Figure 3.11 shows an example of a 2D distribution with an ellipsoidal support – approximating the support of a 2D Gaussian distribution. The region is covered by small balls of size . All the balls are numbered from to say . Then given any vector in this region, we only need to determine to which -ball center it is the closest, denoted as . To remember , we only need to remember the number of this ball, which takes bits to store. If we need to decode from this number, we simply take as the center of the ball. This leads to an explicit encoding and decoding scheme:

| (3.3.24) |

One may refer to these ball centers as “codes” of a code book or a dictionary for the encoding scheme. It is easy to see that the accuracy of this (lossy) encoding-decoding scheme is about the radius of the ball . Clearly is the average number of bits required to encode the ball number of each vector with this coding scheme, and hence can be called the coding rate associated with this scheme.

From the above derivation, we know that the coding rate is (approximately) achievable with an explicit encoding (and decoding) scheme. It has two interesting properties:

- •

-

•

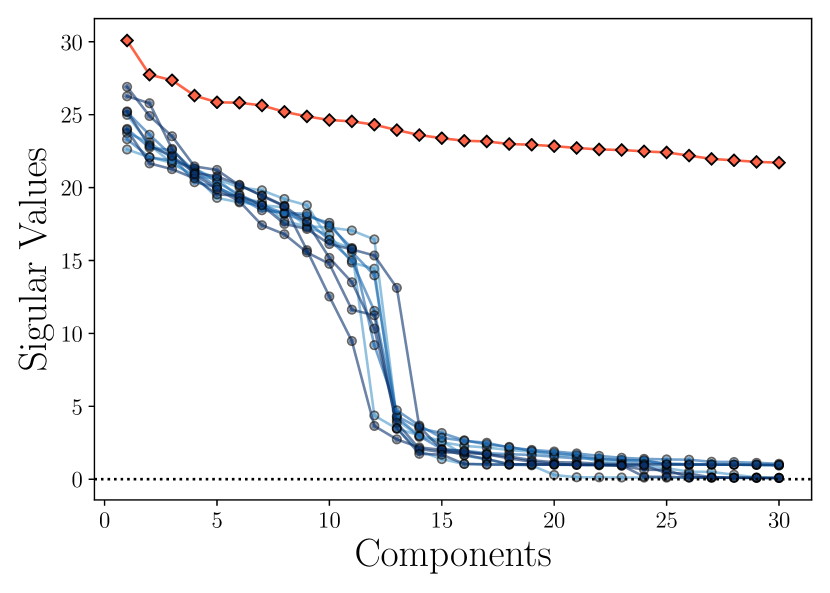

Second, the same closed-form coding rate can be derived as an approximation of if the data are assumed to be from a linear subspace. This can be shown by properly quantifying the singular value decomposition (SVD) of and constructing a lossy coding scheme for vectors in the subspace spanned by [MDH+07].

In our context, the closed-form expression is rather fundamental: it is the coding rate associated with an explicit and natural lossy coding scheme for data drawn from either a Gaussian distribution or a linear subspace. As we will see in the next chapter, this formula plays an important role in understanding the architecture of deep neural networks.

3.3.4 Clustering a Mixture of Low-Dimensional Gaussians

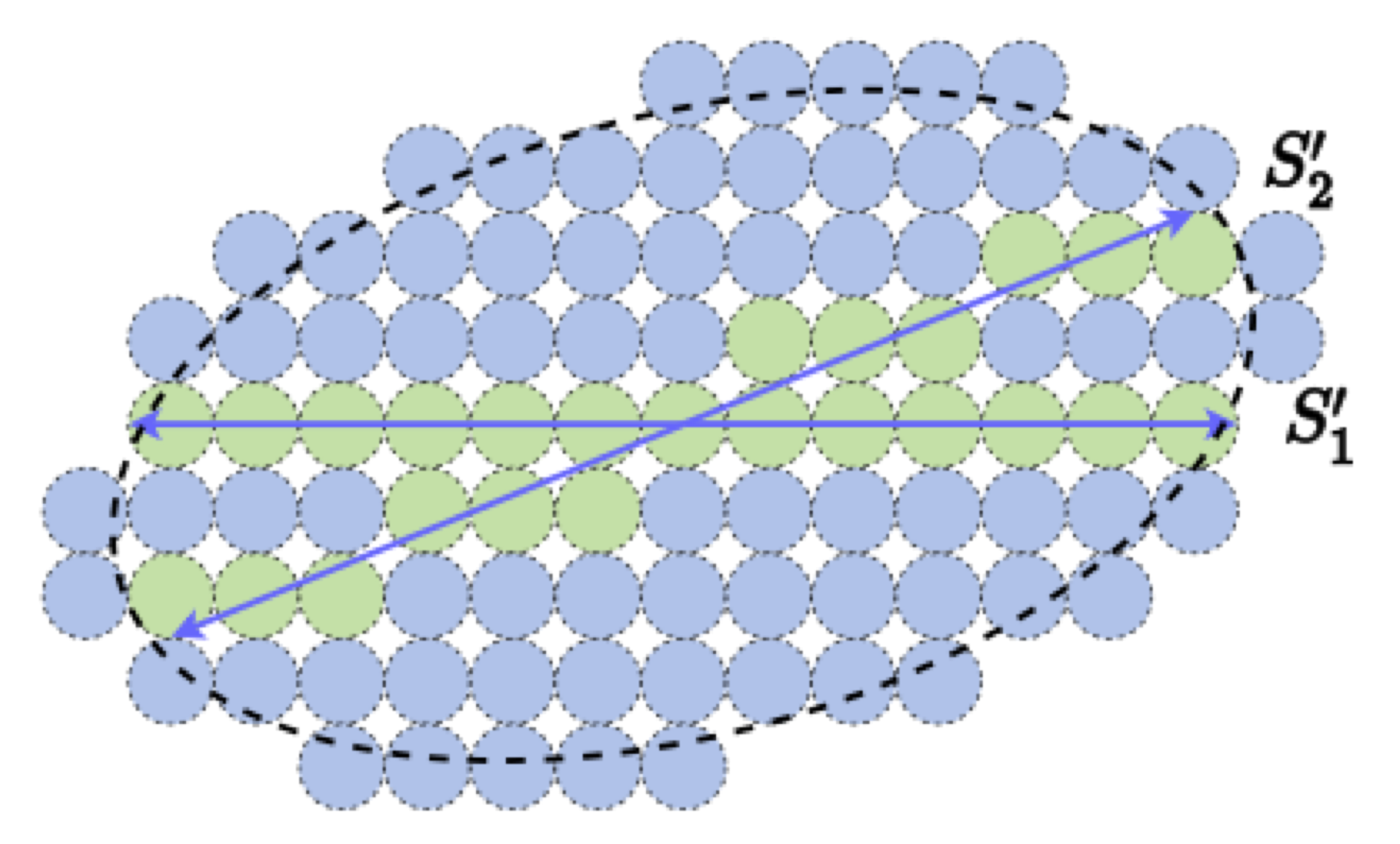

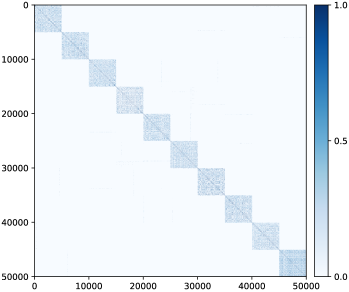

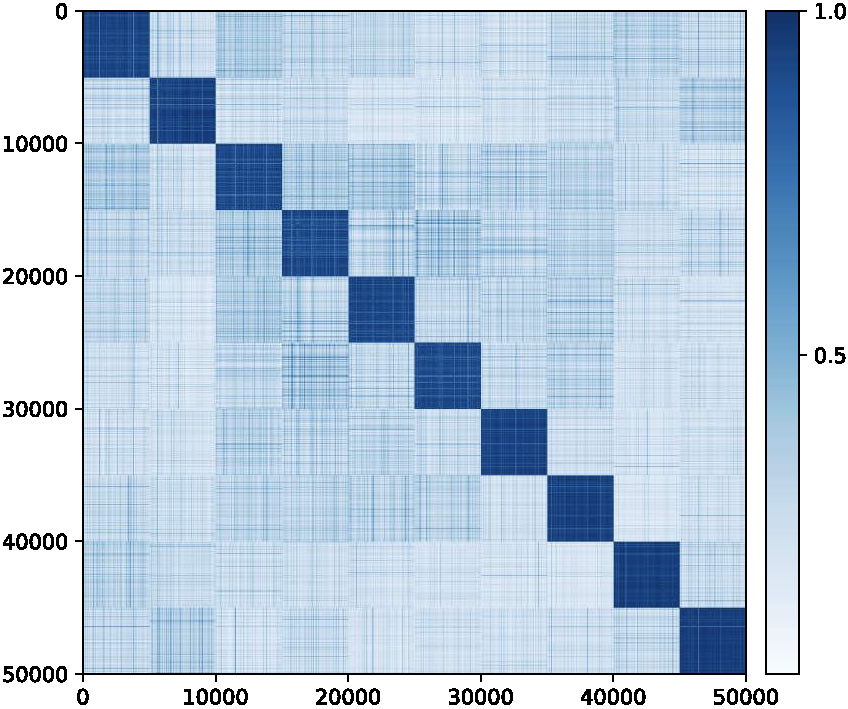

As we have discussed before, the given dataset often has low-dimensional intrinsic structures. Hence, encoding it as a general Gaussian would be very redundant. If we can identify those intrinsic structures in , we could design much better coding schemes that give much lower coding rates. Or equivalently, the codes used to encode such can be compressed. We will see that compression gives a unifying computable way to identify such structures. In this section, we demonstrate this important idea with the most basic family of low-dimensional structures: a mixture of (low-dimensional) Gaussians or subspaces.

Example 3.9.

Figure 3.12 shows an example in which the data are distributed around two subspaces (or low-dimensional Gaussians). If they are viewed and coded together as one single Gaussian, the associated discrete (lossy) code book, represented by all the blue balls, is obviously very redundant. We can try to identify the locations of the two subspaces, denoted by and , and design a code book that only covers the two subspaces, i.e., the green balls. If we can correctly partition samples in the data into the two subspaces: with and , where denotes a permutation matrix, then the resulting coding rate for the data will be much lower. This gives a more parsimonious, hence more desirable, representation of the data.

So, more generally speaking, if the data are drawn from any mixture of subspaces or low-dimensional Gaussians, it would be desirable to identify those components and encode the data based on the intrinsic dimensions of those components. It turns out that we do not lose much generality by assuming that the data are drawn from a mixture of low-dimensional Gaussians. This is because a mixture of Gaussians can closely approximate most general distributions [BDS16].

The clustering problem.

Now for this specific family of distributions, how can we effectively and efficiently identify those low-dimensional components from a set of samples

| (3.3.25) |

drawn from them? In other words, given the whole data set , we want to partition, or cluster, it into multiple, say , subsets:

| (3.3.26) |

where each subset consists of samples drawn from only one low-dimensional Gaussian or subspace and is a permutation matrix to indicate membership of the partition. Note that, depending the situation, the partition could be either deterministic or probabilistic. As shown in [MDH+07a], for mixture of Gaussians, probabilistic partition does not lead to a lower coding rate. So for simplicity, we here consider a deterministic partition only.

Clustering via lossy compression.

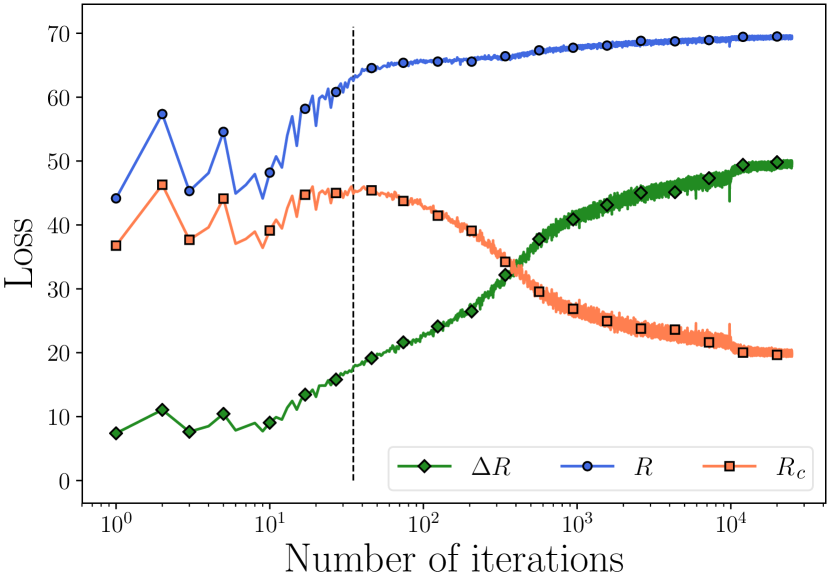

The main difficulty in solving the above clustering problem is that we normally do not know the number of clusters , nor do we know the dimension of each component. There has been a long history for the study of this clustering problem. The textbook [VMS16] gives a systematic and comprehensive coverage of different approaches to this problem. To find an effective approach to this problem, we first need to understand and clarify why we want to cluster. In other words, what exactly do we gain from clustering the data, compared with not to? How do we measure the gain? From the perspective of data compression, a correct clustering should lead to a more efficient encoding (and decoding) scheme.

For any given data set , there are already two obvious encoding schemes as the baseline. They represent two extreme ways to encode the data:

-

•

Simply view all the samples together drawn as from one single Gaussian. The associated coding rate is, as derived before, given by:

(3.3.27) -

•

Simply memorize all the samples separately by assigning a different number to each sample. The coding rate would be:

(3.3.28)

Note that either coding scheme can become the “optimal” solution for certain (extreme) choice of the quantization error :

-

1.

Lazy Regime: If we choose to be extremely large, all samples in can be covered by a single ball. The rate is .

-

2.

Memorization Regime: If is extremely small, every sample in is covered by a different -ball, hence the total is . The rate is .

Note that the first scheme corresponds to the scenario when one does not care about anything interesting about the distribution at all. One does not want to spare any bit for anything informative. We call this the “lazy regime.” The second scheme corresponds to the scenario when one wants to decode every sample with an extremely high precision. So one would better “memorize” every sample. We call this the “memorization regime.”

Example 3.10.

To see when the memorization regime is preferred or not, let us consider a number, say , of samples randomly distributed in a unit area on a 2D plane.191919Say the points are drawn by a Poisson process with density points per unit area. Imagine we try to design a lossy coding scheme with a fixed quantization error . This is equivalent to putting an -disc around each sample, as shown in Figure 3.13. When is small, the chance that all the discs overlap with each other is zero. A codebook of size is necessary and optimal in this case. When or the density reaches a certain critical value , with high probability all the discs start to overlap and connect into one cluster that covers the whole plane—this phenomenon is known as continuum “percolation” [Gil61, MM12]. When becomes larger than this value, the discs overlap heavily. The number of discs becomes very redundant because we only want to encode points on the plane up to the given precision . The number of discs needed to cover all the samples is much less than .202020In fact, there are efficient algorithms to find such a covering [BBF+01].

Both the lazy and memorization regimes are somewhat trivial and perhaps are of little theoretical or practical interest. Either scheme would be far from optimal when used to encode a large number of samples drawn from a distribution that has a compact and low-dimensional support. The interesting regime exists in between these two.

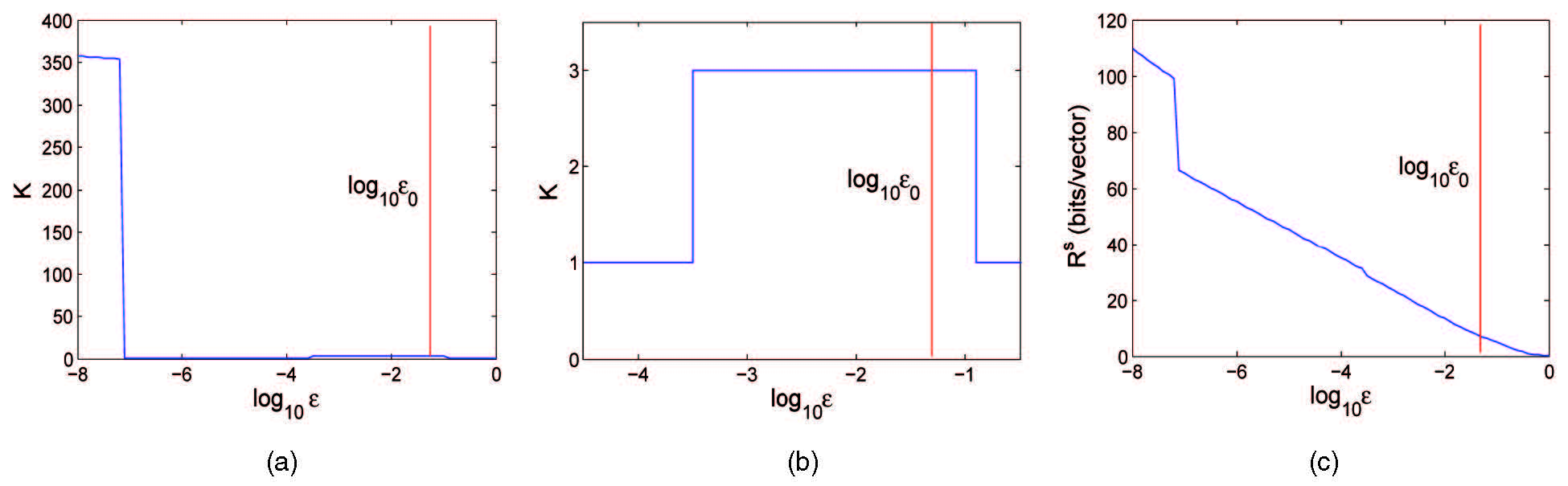

Example 3.11.

Figure 3.14 shows an example with noisy samples drawn from two lines and one plane in . As we notice from the plot (c) on the right, the optimal coding rate decreases monotonically as we increase , as anticipated from the property of the rate distortion function. The plots (a) and (b) show, when varying from very small (near zero) to very large (towards infinite), the optimal number of clusters when the coding rate is minimal. We can clearly see the lazy regime and the memorization regime on the two ends of the plots. But one can also notice in plot (b), when the quantization error is chosen to be around the level of the true noise variance , the optimal number of clusters is the “correct” number three that represents two planes and one subspace. We informally refer to this middle regime as the “generalization regime”. Notice that a sharp phase transition takes place between these regimes.212121So far, to our best knowledge, there is no rigorous theoretical justification for these phase transition behaviors.

From the above discussion and examples, we see that, when the quantization error relative to the sample density222222or the sample density relative to the quantization error is in a proper range, minimizing the lossy coding rate would allow us to uncover the underlying (low-dimensional) distribution of the sampled data. Hence, quantization, started as a choice of practicality, seems to be becoming necessary for learning a continuous distribution from its empirical distribution with finite samples. Although a rigorous theory for explaining this phenomenon remains elusive, here, for learning purposes, we care about how to exploit the phenomenon to design algorithms that can find the correct distribution.

Let us use the simple example shown in Figure 3.12 to illustrate the basic ideas. If one can partition all samples in into two clusters in and , with and samples respectively, then the associated coding rate would be232323We here ignore some overhead bits needed to encode the membership for each sample, say via the Huffman coding.

| (3.3.29) |

where we use to indicate membership of the partition. If the partition respects the low-dimensional structures of the distribution, in this case and belonging to the two subspaces respectively, then the resulting coding rate should be significantly smaller than the above two basic schemes:

| (3.3.30) |

In general, we can cast the clustering problem into an optimization problem that minimizes the coding rate:

| (3.3.31) |

Optimization strategies to cluster.

The remaining question is how we optimize the above coding rate objective to find the optimal clusters. There are three natural approaches to this objective:

-

1.

We may start with the whole set as a single cluster (i.e. the lazy regime) and then search (say randomly) to partition it so that it would lead to a smaller coding rate.

-

2.

Inversely, we may start with each sample as its own cluster (i.e. the memorization regime) and search to merge clusters that would result in a smaller coding rate.

-

3.

Alternatively, if we could represent (or approximate) the membership as some continuous parameters, we may use optimization methods such as gradient descent (GD).

The first approach is not so appealing computationally as the number of possible partitions that one needs to try is exponential in the number of samples. For example, the number of partitions of into two subsets of equal size is which explodes as becomes large. We will explore the third approach in the next Chapter 4. There, we will see how the role of deep neural networks, transformers in particular, is connected with the coding rate objective.

The second approach was originally suggested in the work of [MDH+07a]. It demonstrates the benefit of being able to evaluate the coding rate efficiently (say with an analytical form). With it, the (low-dimensional) clusters of the data can be found rather efficiently and effectively via the principle of minimizing coding length (MCL). Note that for a cluster with samples, the length of binary bits needed to encode all the samples in is given by:242424In fact, a more accurate estimate of the coding length is where the extra bits are used to encode the basis of the subspace [MDH+07a]. Here we omit this overhead for simplicity.

| (3.3.32) |

If we have two clusters and , if we want to code the samples as two separate clusters, the length of binary bits needed is

The last two terms are the number of bits needed to encode the memberships of samples according to the Huffman code.

Then, given any two separate clusters and , we can decide whether to merge them or not based on the difference between the two coding lengths:

| (3.3.33) |

is positive or negative and denotes the union of the sets of samples in and . If it is negative, it means the coding length would become smaller if we merge the two clusters into one. This simple fact leads to the following clustering algorithm proposed by [MDH+07a]:

Note that this algorithm is tractable as the total number of (pairwise) comparisons and merges is about . However, due to its greedy nature, there is no theoretical guarantee that the process will converge to the globally optimal clustering solution. Nevertheless, as reported in [MDH+07a], in practice, this seemingly simple algorithm works extremely well. The clustering results plotted in Figure 3.14 were actually computed by this algorithm.

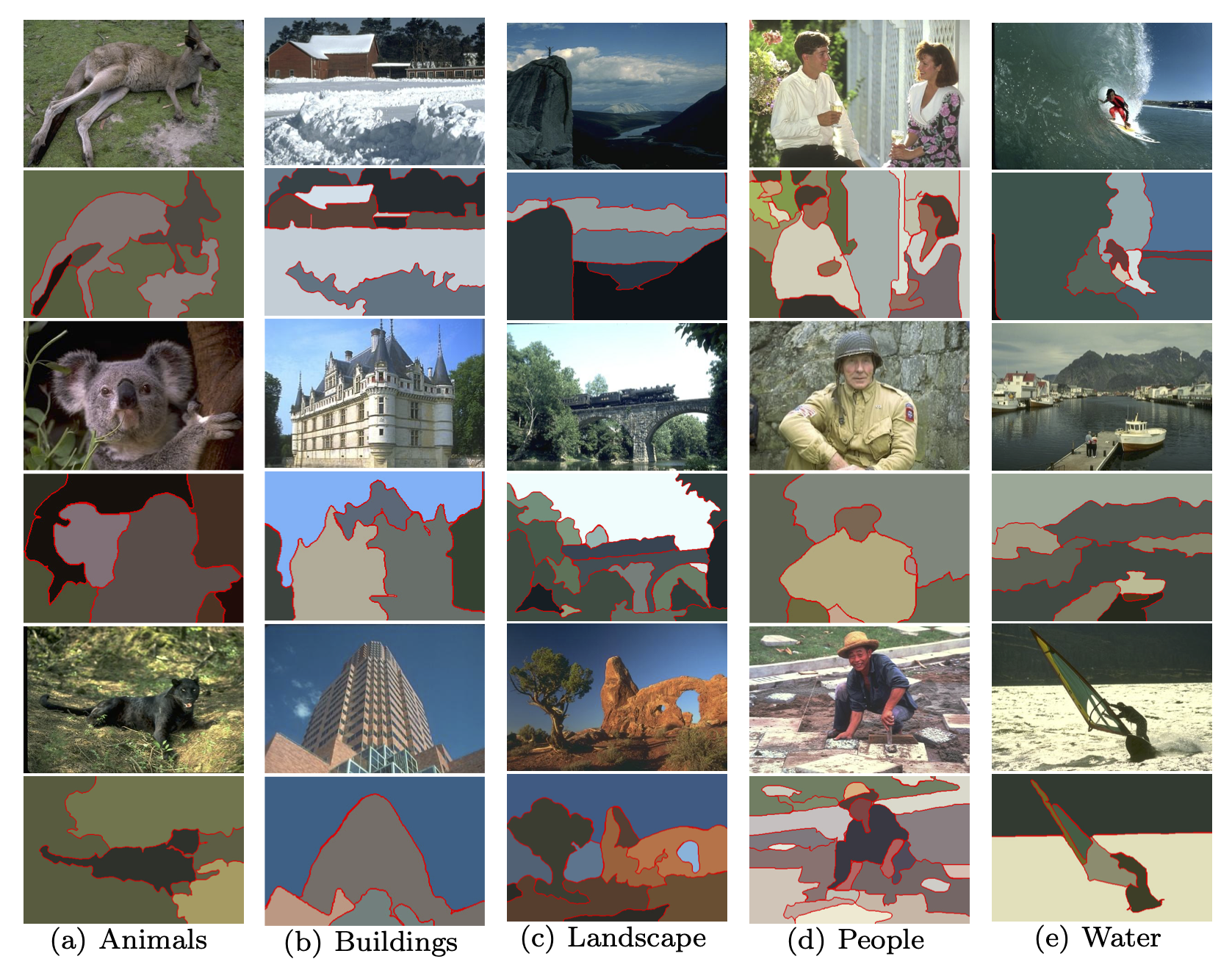

Example 3.12 (Image Segmentation).

The above measure of coding length and the associated clustering algorithm assume the data distribution is a mixture of (low-dimensional) Gaussians. Although this seems somewhat idealistic, the measure and algorithm can already be very useful and even powerful in scenarios when the model is (approximately) valid.

For example, a natural image typically consists of multiple regions with nearly homogeneous textures. If we take many small windows from each region, they should resemble samples drawn from a (low-dimensional) Gaussian, as illustrated in Figure 3.15. Figure 3.16 shows the results of image segmentation based on applying the above clustering algorithm to the image patches directly. More technical details regarding customizing the algorithm to the image segmentation problem can be found in [MRY+11].

3.4 Maximizing Information Gain

So far in this chapter, we have discussed how to identify a distribution with low-dimensional structures through the principle of compression. As we have seen from the previous two sections, computational compression can be realized through either the denoising operation or clustering. Figure 3.17 illustrates this concept with our favorite example.

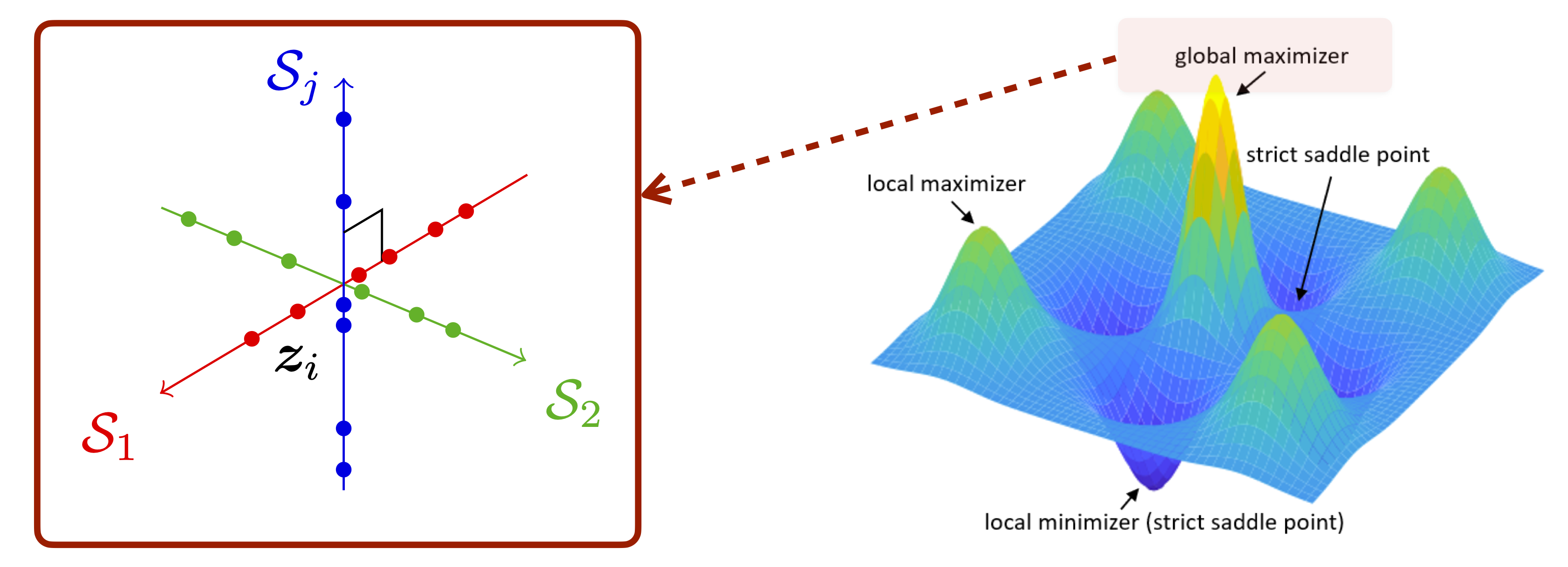

Of course, the ultimate goal for identifying a data distribution is to use it to facilitate certain subsequent tasks such as segmentation, classification, or generation (of images). Hence, how the resulting distribution is “represented” matters tremendously with respect to how information related to these subsequent tasks can be efficiently and effectively retrieved and utilized. This naturally raises a fundamental question: what makes a representation truly “good” for downstream use? In the following, we will explore the essential properties that a meaningful and useful representation should possess, and how these properties can be explicitly characterized and pursued via maximizing information gain.

How to measure the goodness of representations.

One may view a given dataset as samples of a random vector with a certain distribution in a high-dimensional space, say . Typically, the distribution of has a much lower intrinsic dimension than the ambient space. Generally speaking, learning a representation refers to learning a continuous mapping, say , that transforms to a so-called feature vector in another (typically lower-dimensional) space, say , where . It is hopeful that through such a mapping

| (3.4.1) |

the low-dimensional intrinsic structures of are identified and represented by in a more compact and structured way so as to facilitate subsequent tasks such as classification or generation. The feature can be viewed as a (learned) compact code for the original data , so the mapping is also called an encoder. The fundamental question of representation learning is

What is a principled and effective measure for the goodness of representations?

Conceptually, the quality of a representation depends on how well it identifies the most relevant and sufficient information of for subsequent tasks and how efficiently it represents this information. For a long time, it was believed and argued that the “sufficiency” or “goodness” of a learned feature representation should be defined in terms of a specific task. For example, just needs to be sufficient for predicting the class label in a classification problem. Below, let us start with the classic problem of image classification and argue why such a notion of a task-specific “representation” is limited and needs to be generalized.

3.4.1 Linear Discriminative Representations