Chapter 6 Inference with Low-Dimensional Distributions

- Bayesian Inference and Constrained Optimization

- Conditional Inference with a Known Data Distribution

- Conditional Inference with a Learned Data Representation

- Conditional Inference with Paired Data and Measurements

- Conditional Inference with Measurement Self-Consistency

- Summary and Notes

- Exercises and Extensions

“Mathematics is the art of giving the same name to different things.”

— Henri Poincaré

In the previous chapters of this book, we have studied how to effectively and efficiently learn a representation for a variable in the world with a distribution that has a low-dimensional support in a high-dimensional space. So far, we have mainly developed the methodology for learning representation and autoencoding in a general, distribution or task-agnostic fashion. With such a learned representation, one can already use it to perform some generic and basic tasks such as classification (if the encoding is supervised with the class) and generation of random samples that have the same distribution as the given data (say natural images or natural languages).

More generally, however, the universality and scalability of the theoretical and computational framework presented in this book has enabled us to learn the distribution of a variety of important real-world high-dimensional data such as natural languages, human poses, natural images, videos, and even 3D scenes. Once the intrinsically rich and low-dimensional structures of these real data can be learned and represented correctly, they start to enable a broad family of powerful, often seemingly miraculous, tasks. Hence, from here onwards, we will start to show how to connect and tailor the general methods presented in previous chapters to learn useful representations for specific structured data distributions and for many popular tasks in modern practice of machine intelligence.

6.1 Bayesian Inference and Constrained Optimization

Leveraging Low-dimensionality for Stable and Robust Inference.

Generally speaking, a good representation or autoencoding should enable us to utilize the learned low-dimensional distribution of the data and its representation for various subsequent classification, estimation, and generation tasks under different conditions. As we have alluded to earlier in Chapter 1 Section 1.2.2, the importance of the low-dimensionality of the distribution is the key for us to conduct stable and robust inference related to the data , as illustrated by the few simple examples in Figure 1.9, from incomplete, noisy, and even corrupted observations. As it turns out, the very same concept carries over to real-world high-dimensional data whose distributions have a low-dimensional support, such as natural images and languages.

Despite a dazzling variety of applications in the practice of machine learning with data such as languages, images, videos and many other modalities, almost all practical applications can be viewed as a special case of the following inference problem: given an observation that depends on , say

| (6.1.1) |

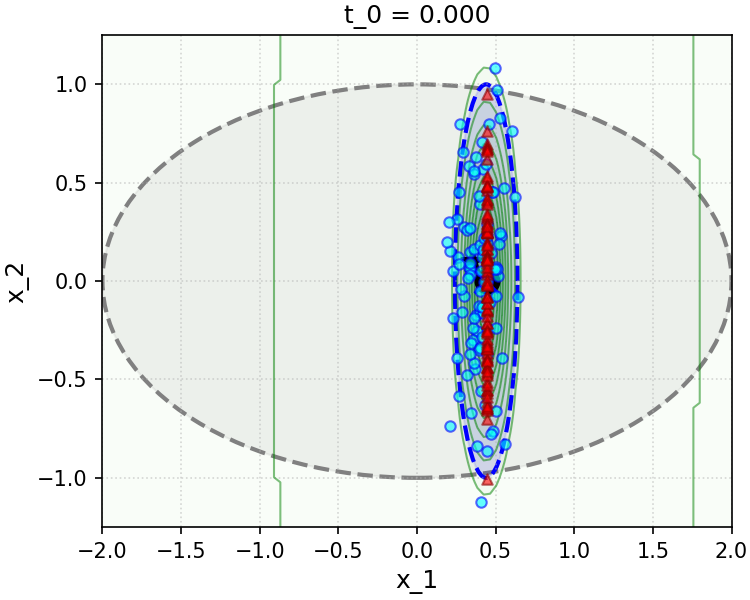

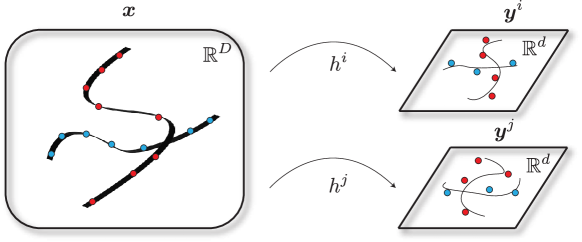

where represents measurements of a part of or certain observed attributes and represents some measurement noise and even (sparse) corruptions, solve the “inverse problem” of obtaining a most likely estimate of or generating a sample that is at least consistent with the observation . Figure 6.1 illustrates the general relationship between and .

![Figure 6.1 : Inference with low-dimensional distributions. This is the generic picture for this chapter: we have a low-dimensional distribution for 𝒙 ∈ ℝ D \bm{x}\in\mathbb{R}^{D} bold_italic_x ∈ blackboard_R start_POSTSUPERSCRIPT italic_D end_POSTSUPERSCRIPT (here depicted as a union of two 2 2 2 -dimensional manifolds in ℝ 3 \mathbb{R}^{3} blackboard_R start_POSTSUPERSCRIPT 3 end_POSTSUPERSCRIPT ) and a measurement model 𝒚 = h ( 𝒙 ) + 𝒘 ∈ ℝ d \bm{y}=h(\bm{x})+\bm{w}\in\mathbb{R}^{d} bold_italic_y = italic_h ( bold_italic_x ) + bold_italic_w ∈ blackboard_R start_POSTSUPERSCRIPT italic_d end_POSTSUPERSCRIPT . We want to infer various things about this model, including the conditional distribution of 𝒙 \bm{x} bold_italic_x given 𝒚 \bm{y} bold_italic_y , or the conditional expectation 𝔼 [ 𝒙 ∣ 𝒚 ] \mathbb{E}[\bm{x}\mid\bm{y}] blackboard_E [ bold_italic_x ∣ bold_italic_y ] , given various information about the model and (potentially finite) samples of either 𝒙 \bm{x} bold_italic_x or 𝒚 \bm{y} bold_italic_y .](x27.png)

Example 6.1 (Image Completion and Text Prediction).

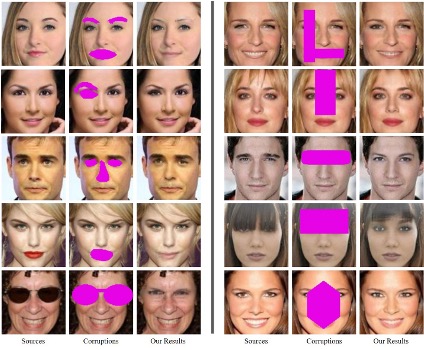

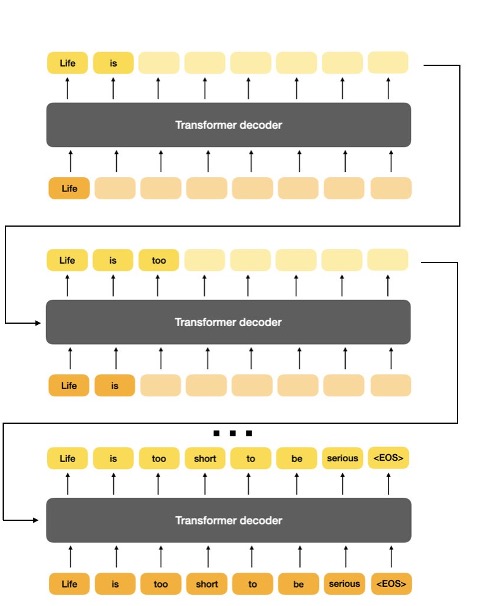

The popular natural image completion and natural language prediction are two typical tasks that require us to recover a full data from its partial observations , with parts of masked out and to be completed based on the rest. Figure 6.2 shows some examples of such tasks. In fact, it is precisely these tasks which have inspired how to train modern large models for text generation (such as GPT) and image completion (such as the masked autoencoder) that we will study in greater details later.

Statistical interpretation via Bayes’ rule.

Generally speaking, to accomplish such tasks well, we need to get ahold of the conditional distribution . If we had this, then we would be able to find the maximal likelihood estimate (prediction):

| (6.1.2) |

compute the conditional expectation estimate:

| (6.1.3) |

and sample from the conditional distribution:

| (6.1.4) |

Notice that from Bayes’ rule, we have

| (6.1.5) |

For instance, the maximal likelihood estimate can be computed by solving the following (maximal log likelihood) program:

| (6.1.6) |

say via gradient ascent:

| (6.1.7) |

Efficiently computing the conditional distribution naturally depends on how we learn and exploit the low-dimensional distribution of the data and the observation model that determines the conditional distribution .

Remark 6.1 (End-to-End versus Bayesian).

In the modern practice of data-driven machine learning, for certain popular tasks people often directly learn the conditional distribution or a (probabilistic) mapping or a regressor. Such a mapping is often modeled by some deep networks and trained end-to-end with sufficient paired samples . Such an approach is very different from the above Bayesian approach in which both the distribution of and the (observation) mapping are needed. The benefit of the Bayesian approach is that the learned distribution can facilitate many different tasks with varied observation models and conditions.

Geometric interpretation as constrained optimization.

As the support of the distribution of is low-dimensional, we may assume that there exists a function such that

| (6.1.8) |

such that is the low-dimensional support of the distribution . Geometrically, one natural choice of is the “distance function” to the support :111Notice that, in reality, we only have discrete samples on the support of the distribution. In the same spirit of continuation, through diffusion or lossy coding studied in Chapter 3, we may approximate the distance function as where is replaced by a covering of the samples with -balls.

| (6.1.9) |

Now given , to solve for , we can solve the following constrained optimization problem:

| (6.1.10) |

Using the method of augmented Lagrange multipliers, we can solve the following unconstrained program:

| (6.1.11) |

for some constant Lagrange multipliers . This is equivalent to the following program:

| (6.1.12) |

where can be viewed as a “mean” for the constraint function. As becomes large when enforcing the constraint via continuation222In the same spirit of continuation in Chapter 3 where we obtained better approximations of our distribution by sending , here we send . Larger values of will constrain to take smaller and smaller values at the optimum, meaning that the optimum lies within a smaller and smaller neighborhood of the support . Interestingly, the theory of Lagrange multipliers hints that, under certain benign conditions on and other terms in the objective, we only need to make large enough in order to ensure at the optimum, meaning that at finite penalty we get perfect approximation of the support. In general, we should have the intuition that plays the same role as ., becomes increasingly small.

The above program may be interpreted in two different ways. Firstly, one may view the first term as the conditional probability of given , and the second term as a probability density for :

| (6.1.13) |

Hence, solving the constrained optimization for the inverse problem is equivalent to conducting Bayes inference with the above probability densities. Hence solving the above program (6.1.12) via gradient ascent is equivalent to the above maximal likelihood estimate (6.1.7), in which the gradient takes the form:

| (6.1.14) |

where and are the Jacobians of and , respectively.

Secondly, notice that the above program (6.1.12) is equivalent to:

| (6.1.15) |

Due to the conspicuous quadratic form of the two terms, they can also be interpreted as certain “energy” functions. Such a formulation is often referred to as “Energy Minimization” in the machine learning literature.

Several representative practical settings for inference.

In practice, however, initial information about the distributions of and the relationship between and can be given in many different ways and forms. In general, they can mostly be categorized into four cases, which are, conceptually, increasingly more challenging:

-

•

Case 1: Both a model for the distribution of and the observation model are known, even with an analytical form. This is typically the case for many classic signal processing problems, such as signal denoising, the sparse vector recovery problem we saw in Chapter 2 and the low-rank matrix recovery problem to be introduced below.

-

•

Case 2: We do not have a model for the distribution but only samples of , and the observation model is known.333In the literature, this setting is sometimes referred to as the empirical Bayesian inference. A model for the distribution of needs to be learned, and subsequently the conditional distribution . Natural image completion or natural language completion (e.g., BERT and GPT) are typical examples of this class of problems.

-

•

Case 3: We only have the paired samples: of the two variables . The distributions of and and their relationship need to be learned from these paired sample data. For example, given many images and their captions, learning to conduct text-conditioned image generation is one such problem.

-

•

Case 4: We only have the samples of the observations , and the observation model needs to be known, at least in some parametric family . The distribution and need to be learned from , estimated from . For example, learning to render a new view from a sequence of calibrated or uncalibrated views is one such problem.

In this chapter, we will discuss general approaches to learn the desired distributions and solve the associated conditional estimation or generation for these cases, typically with a representative problem. Throughout the chapter, you should keep Figure 6.1 in mind.

6.2 Conditional Inference with a Known Data Distribution

Notice that in the setting we have discussed in previous Chapters, the autoencoding network is trained to reconstruct a set of samples of the random vector . This would allow us to regenerate samples from the learned (low-dimensional) distribution. In practice, the low-dimensionality of the distribution, once given or learned, can be exploited for stable and robust recovery, completion, or prediction tasks. That is, under rather mild conditions, one can recover from highly compressive, partial, noisy or even corrupted measures of of the kind:

| (6.2.1) |

where is typically an observation of that is of much lower dimension than and can be random noise or even sparse gross corruptions. This is a class of problems that have been extensively studied in the classical signal processing literature, for low-dimensional structures such as sparse vectors, low-rank matrices, and beyond. Interested readers may see [WM22] for a complete exposition of this topic.

Here to put the classic work in a more general modern setting, we illustrate the basic idea and facts through the arguably simplest task of data (and particularly image) completion. That is, we consider the problem of recovering a sample when parts of it are missing (or even corrupted). We want to recover or predict the rest of from observing only a fraction of it:

| (6.2.2) |

where represents a masking operation (see Figure 6.3 for an example).

In this section and the next, we will study the completion task under two different scenarios: One is when the distribution of the data of interest is already given apriori, even in a certain analytical form. This is the case that prevails in classic signal processing where the structures of the signals are assumed to be known, for example, band-limited, sparse or low-rank. The other is when only raw samples of are available and we need to learn the low-dimensional distribution from the samples in order to solve the completion task well. This is the case for the tasks of natural image completion or video frame prediction. As a precursor to the rest of the chapter, we start with the simplest case of image completion: when the image to be completed can be well modeled as a low-rank matrix. We will move on to increasingly more general cases and more challenging settings later.

Low-rank matrix completion.

The low-rank matrix completion problem is a classical problem for data completion when its distribution is low-dimensional and known. Consider a random sample of a matrix from the space of all matrices of rank . In general, we assume the rank of the matrix is

| (6.2.3) |

So it is clear that locally the intrinsic dimension of the space of all matrices of rank is much lower than the ambient space .

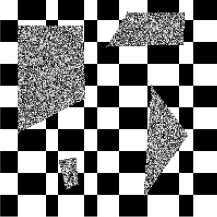

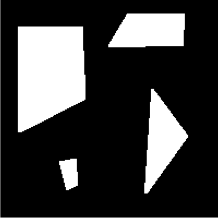

Now, let indicate a set of indices of observed entries of the matrix . Let the observed entries be:

| (6.2.4) |

The remaining entries supported on are unobserved or missing. The problem is whether we can recover from the missing entries of correctly and efficiently. Figure 6.3 shows one example of completing such a matrix.

Notice that the fundamental reason why such a matrix can be completed is that columns and rows of the matrix are highly correlated and they all lie on a low-dimensional subspace. For the example shown in Figure 6.3, the dimension or the rank of the matrix completed is only two. Hence the fundamental idea to recover such a matrix is to seek a matrix that has the lowest rank among all matrices that have entries agreeing with the observed ones:

| (6.2.5) |

This is known as the low-rank matrix completion problem. See [WM22] for a full characterization of the space of all low-rank matrices. As the rank function is discontinuous and rank minimization is in general an NP-hard problem, we would like to relax it with something easier to optimize.

Based on our knowledge about compression from Chapter 3, we could promote the low-rankness of the recovered matrix by enforcing the lossy coding rate (or the volume spanned by ) of the data in to be small:

| (6.2.6) |

The problem can viewed as a continuous relaxation of the above low-rank matrix completion problem (6.2.5) and it can be solved via gradient descent. One can show that the gradient descent operator for the objective is precisely minimizing a close surrogate of the rank of the matrix .

The rate distortion function is a nonconvex function, and its gradient descent does not always guarantee finding the globally optimal solution. Nevertheless, since the underlying structure sought for is piecewise linear, the rank function admits a rather effective convex relaxation: the nuclear norm—the sum of all singular values of the matrix . As shown in the compressive sensing literature, under fairly broad conditions,444Typically, such conditions specify the necessary and sufficient amount of entries needed for the completion to be computationally feasible. These conditions have been systematically characterized in [WM22]. the matrix completion problem (6.2.5) can be effectively solved by the following convex program:

| (6.2.7) |

where the nuclear norm is the sum of singular values of . In practice, we often convert the above constrained convex optimization program to an unconstrained one:

| (6.2.8) |

for some properly chosen . Interested readers may refer to [WM22] for how to develop algorithms that can solve the above programs efficiently and effectively. Figure 6.3 shows a real example in which the matrix is actually recovered by solving the above program.

Further extensions.

It has been shown that images (or more accurately textures) and 3D scenes with low-rank structures can be very effectively completed via solving optimization programs of the above kind, even if there is additional corruption and distortion [ZLG+10, LRZ+12, YZB+23]:

| (6.2.9) |

where is some unknown nonlinear distortion of the image and is an unknown matrix that models some (sparse) occlusion and corruption. Again, interested readers may refer to [WM22] for a more detailed account.

6.3 Conditional Inference with a Learned Data Representation

In the previous subsection, the reason we can infer from the partial observation is because (support of) the distribution of is known or specified apriori, say as the set of all low-rank matrices. For many practical datasets, we do not have their distribution in an analytical form like the low-rank matrices, say the set of all natural images. Nevertheless, if we have sufficient samples of the data , we should be able to learn its low-dimensional distribution first and leverage it for future inference tasks based on an observation . In this section, we assume the observation model is given and known. We will study the case when is not explicitly given in the next section.

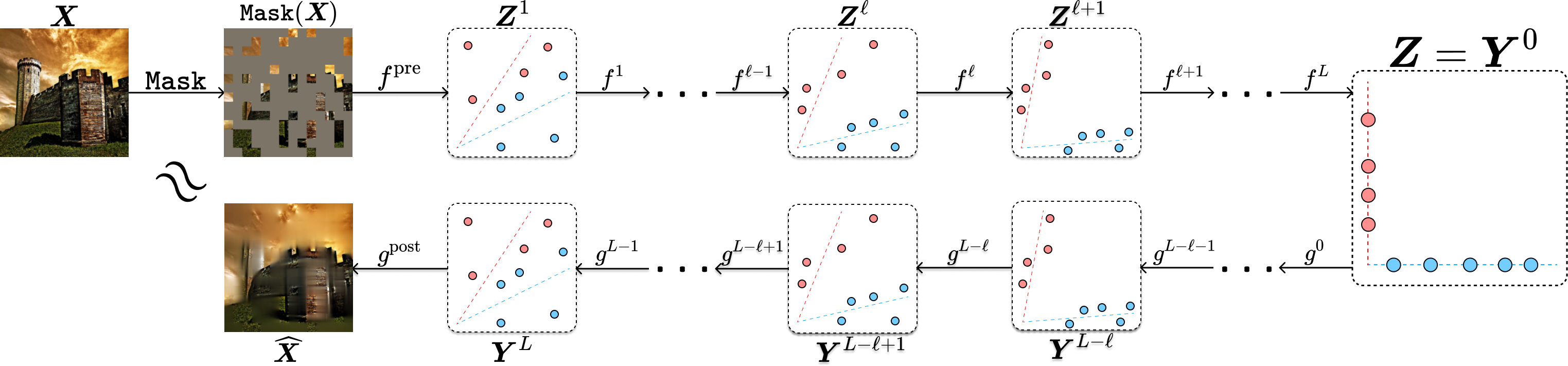

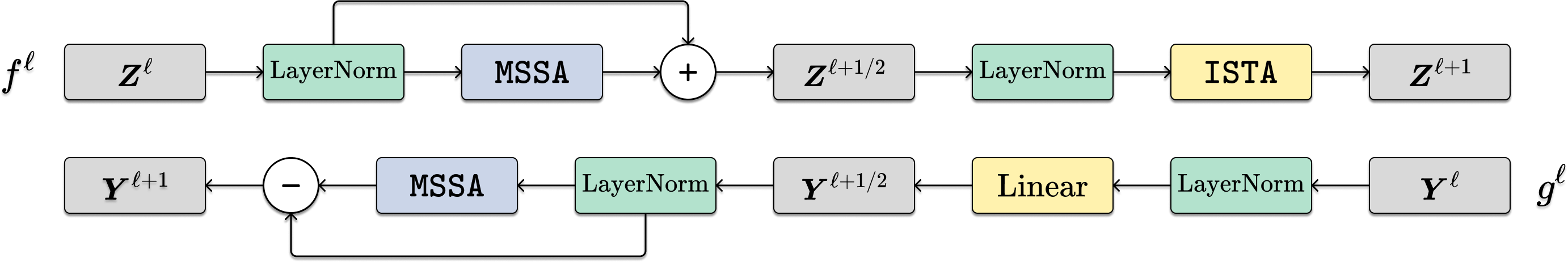

6.3.1 Image Completion with Masked Auto-Encoding

For a general image such as the one shown on the left of Figure 6.4, we can no longer view it as a low-rank matrix. However, humans still demonstrate remarkable ability to complete a scene and recognize familiar objects despite severe occlusion. This suggests that our brain has learned the low-dimensional distribution of natural images and can use it for completion, and hence recognition. However, the distribution of all natural images is not as simple as a low-dimensional linear subspace. Hence a natural question is whether we can learn the more sophisticated distribution of natural images and use it to perform image completion?

One empirical approach to the image completion task is to find an encoding and decoding scheme by solving the following masked autoencoding (MAE) program that minimizes the reconstruction loss:

| (6.3.1) |

Unlike the matrix completion problem which has a simple underlying structure, we should no longer expect that the encoding and decoding mappings admit simple closed forms or the program can be solved by explicit algorithms.

For a general natural image, we can no longer assume that its columns or rows are sampled from a low-dimensional subspace or a low-rank Gaussian. However, it is reasonable to assume that the image consists of multiple regions. Image patches in each region are similar and can be modeled as one (low-rank) Gaussian or subspace. Hence, to exploit the low-dimensionality of the distribution, the objective of the encoder is to transform to a representation :

| (6.3.2) |

such that the distribution of can be well modeled as a mixture of subspaces, say , such that the rate reduction is maximized while the sparsity is minimized:

| (6.3.3) |

where the functions and are defined in (4.2.2) and (4.2.3), respectively.

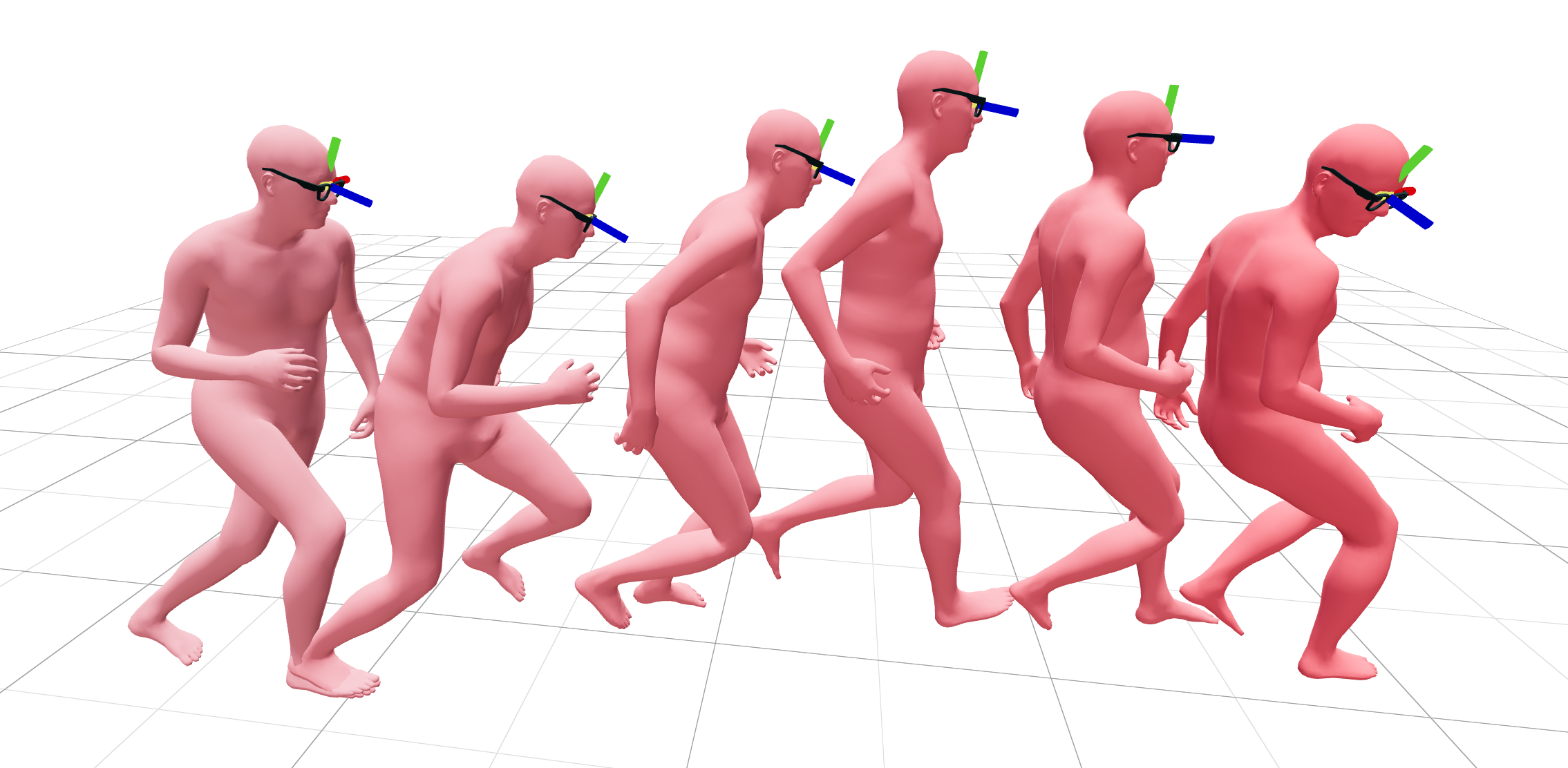

As we have shown in the previous Chapter 4, the encoder that minimizes the above objective can be constructed as a sequence of transformer-like operators. As shown in the work of [PBW+24], the decoder can be viewed and hence constructed explicitly as the inverse process of the encoder . Figure 6.5 illustrates the overall architectures of both the encoder and the corresponding decoder at each layer. The parameters of the encoder and decoder can be learned by optimizing the reconstruction loss (6.3.1) via gradient descent.

Figure 6.6 shows some representative results of the thus-designed masked auto-encoder. More implementation details and results of the masked autoencoder for natural image completion can be found in Chapter 7.

![Figure 6.6 : Autoencoding visualizations of CRATE-Base and ViT-MAE-Base [ HCX+22 ] with 75% patches masked. We observe that the reconstructions from CRATE-Base are on par with the reconstructions from ViT-MAE-Base, despite using < 1 / 3 <1/3 < 1 / 3 of the parameters.](x28.png)

6.3.2 Conditional Sampling with Measurement Matching

The above (masked) autoencoding problem aims to generate a sample image that is consistent with certain observations or conditions. But let us examine the approach more closely: given the visual part of an image , we try to estimate the masked part . For realizations of the random variable , let

be the conditional distribution of given . It is easy to show that the optimal solution to the MAE formulation (6.3.1) is given by the conditional expectation:

| (6.3.4) |

In general, however, this expectation may not even lie on the low-dimensional distribution of natural images! This partially explains why some of the recovered patches in Figure 6.6 are a little blurry.

For many practical purposes, we would like to learn (a representation of) the conditional distribution , or equivalently , and then get a clear (most likely) sample from this distribution directly. Notice that, when the distribution of is low-dimensional, it is possible that if a sufficient part of , , is observed, it fully determines and hence the missing part . In other words, the distribution is a generalized function—if is fully determined by it is the delta function, and more generally one of its exotic cousins.

Hence, instead of solving the completion task as a conditional estimation problem, we should address it as a conditional sampling problem. To that end, we should first learn the (low-dimensional) distribution of all natural images . If we have sufficient samples of natural images, we can learn the distribution via a denoising process described in Chapter 3. Then the problem of recovering from its partial observation becomes a conditional generation problem – to sample the distribution conditioned on the observation.

![Figure 6.7 : Sampling visualizations from models trained via ambient diffusion [ DSD+23a ] with 80% of the pixels masked. Using a similar ratio of masked pixels as in Figure 6.6 , the ambient diffusion sampling algorithm recovers a much sharper image than the blurry image recovered by the MAE-based method. The former method samples from the distribution of natural images, while the latter approximates the conditional expectation (i.e., average) of this distribution given the observation; this averaging causes the blurriness.](chapters/chapter6/figs/ambient_diffusion.png)

General linear measurements.

In fact, we may even consider recovering from a more general linear observation model:

| (6.3.5) |

where is a linear operator on matrix space555i.e., if we imagine unrolling into a long vector then takes the role of a matrix on -space and . The masking operator in the image completion task is one example of such a linear model. Then it has been shown by [DSD+23] that

| (6.3.6) |

satisfies the condition that:

| (6.3.7) |

Notice that in the special case when is of full column rank, we have . Hence, in the more general case, it has been suggested by [DSD+23] that one could still use the so obtained to replace the in the normal denoising process for :

| (6.3.8) |

This usually works very well in practice, say for many image restoration tasks, as shown in [DSD+23]. Compared to the blurry images recovered from MAE, the images recovered by the above method are much sharper as it leverages a learned distribution of natural images and samples a (sharp) image from the distribution that is consistent with the measurement, as shown in Figure 6.7 (cf Figure 6.6).

General nonlinear measurements.

To generalize the above (image) completion problems and make things more rigorous, we may consider that a random vector is partially observed through a more general observation function:

| (6.3.9) |

where usually stands for some random measurement noise, say of a Gaussian distribution . It is easy to see that, for and so related, their joint distribution is naturally nearly degenerate if the noise is small. To a large extent, we may view as a noisy version of a hypersurface defined by the function in the joint space . Practically speaking, we will consider a setting more akin to masked autoencoding than to pure matrix completion, where we always have access to a corresponding clean sample for every observation we receive.666In some more specialized applications, in particular in scientific imaging, it is of interest to be able to learn to generate samples from the posterior without access to any clean/ground-truth samples of . We give a brief overview of methods for this setting in the end-of-chapter notes.

Like image/matrix completion, we are often faced with a setting where denotes a degraded or otherwise “lossy” observation of the input . This can manifest in quite different forms. For example, in various scientific or medical imaging problems, the measured data may be a compressed and corrupted observation of the underlying data ; whereas in 3D vision tasks, may represent an image captured by a camera of a physical object with an unknown (low-dimensional) pose . Generally, by virtue of mathematical modeling (and, in some cases, co-design of the measurement system), we know and can evaluate it on any input, and we can exploit this knowledge to help reconstruct and sample .

At a technical level, we want the learned representation of the data to facilitate us to sample the conditional distribution , also known as the posterior, effectively and efficiently. More precisely, write to denote a realization of the random variable . We want to generate samples such that:

| (6.3.10) |

Recall that in Section 3.2, we have developed a natural and effective way to produce unconditional samples of the data distribution . The ingredients are the denoisers , or their learned approximations , for different levels of noisy observations (and for their realizations) under Gaussian noise , and with a choice of times at which to perform the iterative denoising, starting from (recall Equation 3.2.66).777Recall from our discussion in Section 3.2.2 that a few small improvements to this basic iterative denoising scheme are sufficient to bring competitive practical performance. For clarity as we develop conditional sampling, we will focus here on the simplest instantiation. We could directly apply this scheme to generate samples from the posterior if we had access to a dataset of samples for each realization of , by generating noisy observations and training denoisers to approximate , the mean of the posterior under the noisy observation (see Figure 6.8(a)). However, performing this resampling given only paired samples from the joint distribution (say by binning the samples over values of ) requires prohibitively many samples for high-dimensional data, and alternate approaches explicitly or implicitly rely on density estimation, which similarly suffers from the curse of dimensionality.

Fortunately, it turns out that this is not necessary. Consider the alternate statistical dependency diagram in Figure 6.8(b), which corresponds to the random variables in the usual denoising-diffusion process, together with the measurement . Because our assumed observation model (6.3.9) implies that and are independent conditioned on , we have for any realization of

| (6.3.11) |

Above, the first line recognizes an equivalence between the distributions arising in Figure 6.8 (a,b); the second line applies this together with conditional independence of and given ; the third line uses the definition of conditional probability; and the final line marginalizes over . Thus, the denoisers from the conceptual posterior sampling process are equal to , which we can learn solely from paired samples , and by Tweedie’s formula (Theorem 3.3), we can express these denoisers in terms of the score function of , which, by Bayes’ rule, satisfies

| (6.3.12) |

Recall that the density of is given by , where denotes the standard Gaussian density with zero mean and covariance and denotes convolution. This is nothing but the unconditional score function obtained from the standard diffusion training that we developed in Section 3.2! The conditional score function then satisfies, for any realization of ,

| (6.3.13) |

giving (by Tweedie’s formula) our proposed denoisers as

| (6.3.14) |

The resulting operators are interpretable as a corrected version of the unconditional denoiser for the noisy observation, where the correction term (the so-called “measurement matching” term) enforces consistency with the observations . The reader should take care to note to which argument the gradient operators are applying in the above score functions in order to fully grasp the meaning of this operator.

The key remaining issue in making this procedure computational is to prescribe how to compute the measurement matching correction, since in general we do not have a closed-form expression for the likelihood except for when . Before taking up this problem, we discuss an illustrative concrete example of the entire process, continuing from those we have developed in Section 3.2.

Example 6.2.

Consider the case where the data distribution is Gaussian with mean and covariance , i.e., . Assume that is nonzero. Moreover, in the measurement model (6.3.9), suppose we obtain linear measurements of with independent Gaussian noise, where and with independent of . Then , where is independent of and is the unique positive square root of the covariance matrix , and after some algebra, we can then write

By independence, we have that is jointly Gaussian, which means that is also jointly Gaussian, as the affine image of a jointly Gaussian vector. Its covariance matrix is given by

Now, we apply the fact that conditioning a random vector with joint Gaussian distribution on a subset of coordinates is again a Gaussian distribution (Exercise 3.2). By this, we obtain that

| (6.3.15) |

By the equivalence we have derived above, we get by another application of Exercise 3.2

| (6.3.16) |

The functional form of this denoiser is quite simple, but it carries an unwieldy dependence on the problem data , , , and . We can gain further insight into its behavior by comparing it with Equation 6.3.14. We have as usual

| (6.3.17) |

which is rather simple—suggesting that the measurement matching term is rather complicated. To confirm this, we can calculate the likelihood directly using the following expression for the joint distribution of :

| (6.3.18) |

where independent of the other Gaussians. This is again a jointly Gaussian distribution; restricting to only the final two rows, we have the covariance

Another application of Exercise 3.2 then gives us

| (6.3.19) |

Now notice that . So, by the chain rule,

| (6.3.20) |

This gives us a more interpretable decomposition of the conditional posterior denoiser (6.3.16): following Equation 6.3.14, it is the sum of the unconditional posterior denoiser (6.3.17) and the measurement matching term (6.3.20). We can further analyze the measurement matching term. Notice that

| (6.3.21) |

If we let denote an eigenvalue decomposition of , where are the columns of , we can further write

| (6.3.22) | ||||

| (6.3.23) |

Then for any eigenvalue of equal to zero, the corresponding summand is zero; and writing for the smallest positive eigenvalue of (it has at least one positive eigenvalue, by assumption), we have (in a sense that can be made quantitatively precise) that whenever , it holds

| (6.3.24) |

So, when , we have the approximation

| (6.3.25) |

The righthand side of this approximation is equal to . So we have in turn

| (6.3.26) |

Equation 6.3.26 is, of course, a direct consequence of our calculations above. However, notice that if we directly interpret this approximation, it is ab initio tractable: the likelihood is a simple Gaussian distribution centered at the observation, and the approximation to the measurement matching term that we arrive at can be interpreted as simply evaluating the log-likelihood at the conditional expectation , then taking gradients with respect to (and backpropagating through the conditional expectation, which is given here by Equation 6.3.17). Nevertheless, note that the approximation in Equation 6.3.26 requires , and that it is never accurate in general when this condition does not hold, even in this Gaussian setting.

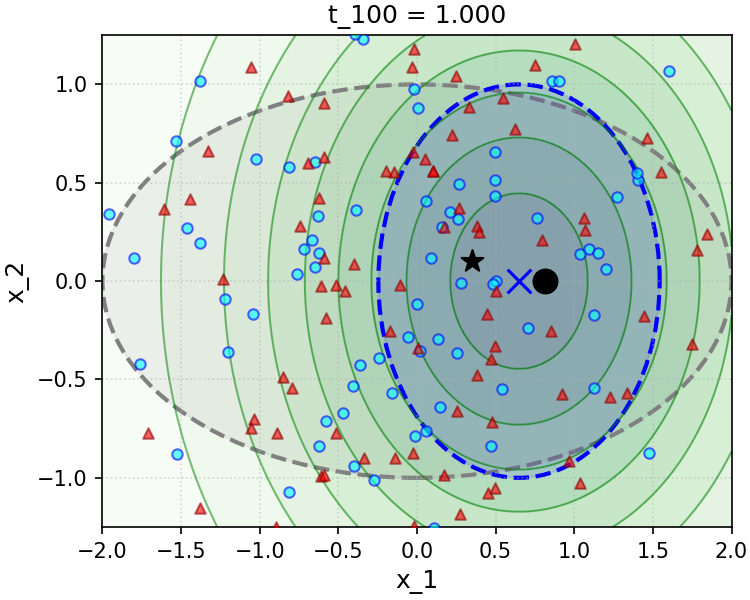

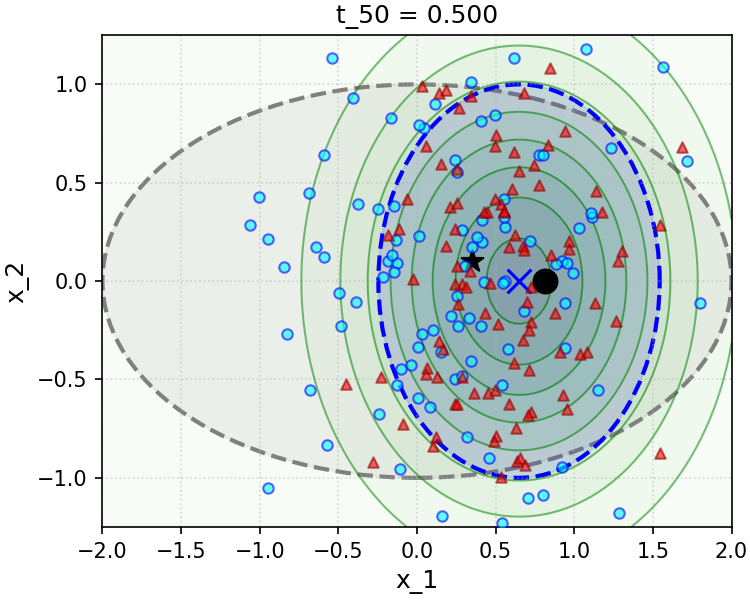

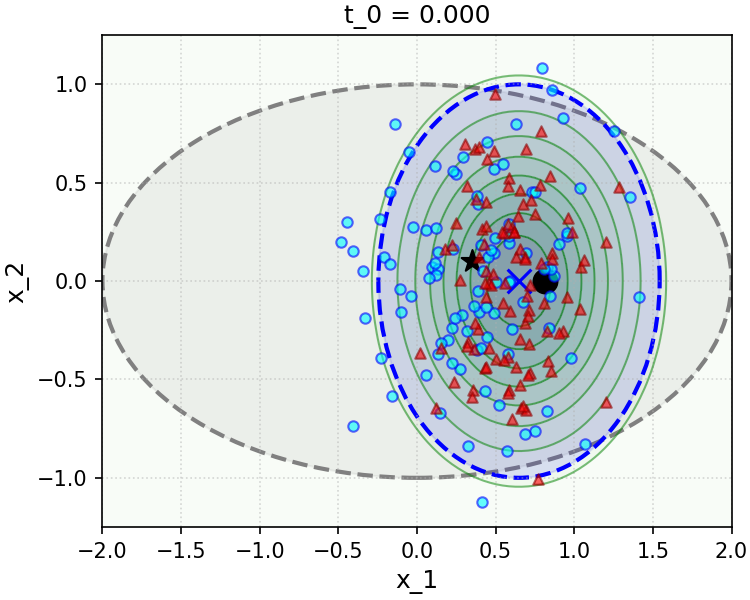

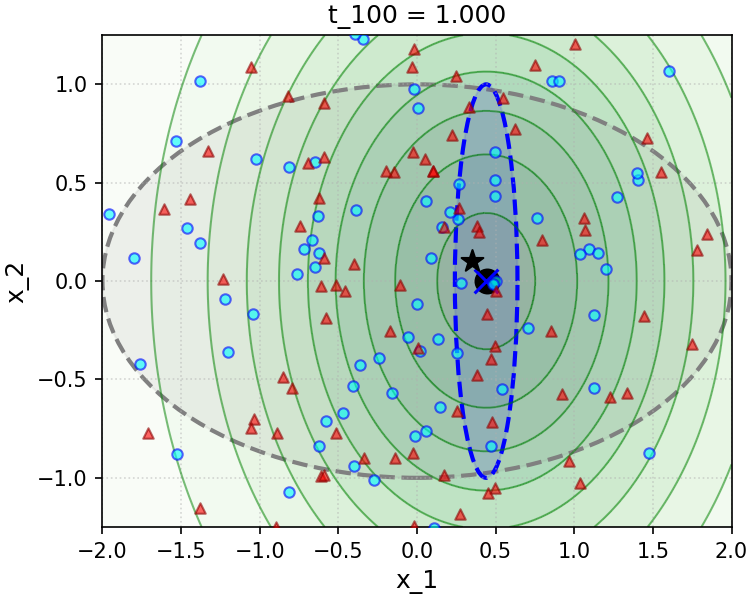

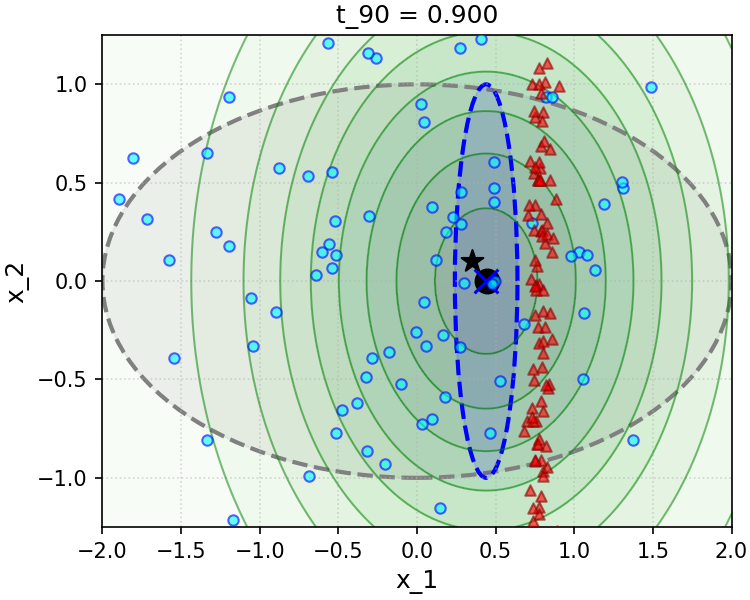

To gain insight into the effect of the convenient approximation (6.3.26), we implement and simulate a simple numerical experiment in the Gaussian setting in Figure 6.9. The sampler we implement is a direct implementation of the simple scheme (3.2.66) we have developed in Chapter 3 and recalled above, using the true conditional posterior denoiser, i.e. Equation 6.3.16 (top row of Figure 6.9), and the convenient approximation to this denoiser made with the decomposition (6.3.14), the posterior denoiser (6.3.17), and the measurement matching approximation (6.3.26) (bottom row of Figure 6.9). We see that even in the simple Gaussian setting, the approximation to the measurement matching term we have made is not without its drawbacks—specifically, at small noise levels , it leads to rapid collapse of the variance of the sampling distribution along directions that are parallel to the rows of the linear measurement operator , which cannot be corrected by later iterations of sampling. We can intuit this from the approximation (6.3.26) and the definition of the denoising iteration (3.2.66), given Equation 6.3.14: for , early steps of sampling effectively take gradient descent steps with a very large step size on the likelihood, via Equation 6.3.26, which leads the sampling distribution to get “stuck” in a collapsed state.

Example 6.2 suggests a convenient approximation for the measurement matching term (6.3.26), which can be made beyond the Gaussian setting of the example. To motivate this approximation in greater generality, notice that by conditional independence of and given , we can write

| (6.3.27) |

Formally, when the posterior is a delta function centered at its mean , the approximation (6.3.26) is exact. More generally, when the posterior is highly concentrated around its mean, the approximation (6.3.26) is accurate. This holds, for example, for sufficiently small , which we saw explicitly in the Gaussian setting of Example 6.2. Although the numerical simulation in Figure 6.9 suggests that this approximation is not without its caveats in certain regimes, it has proved to be a reliable baseline in practice, after being proposed by Chung et al. as “Diffusion Posterior Sampling” (DPS) [CKM+23]. In addition, there are even principled and generalizable approaches to improve it by incorporating better estimates of the posterior variance (which turn out to be exact in the Gaussian setting of Example 6.2), which we discuss further in the end-of-chapter summary.

Thus, with the DPS approximation, we arrive at the following approximation for the conditional posterior denoisers , via Equation 6.3.14:

| (6.3.28) |

And, for a neural network or other model trained as in Section 3.2 to approximate the denoisers for each , we arrive at the learned conditional posterior denoisers

| (6.3.29) |

Note that the approximation (6.3.28) is valid for arbitrary forward models in the observation model (6.3.9), including nonlinear , and even to arbitrary noise models for which a clean expression for the likelihood is known. Indeed, in the case of Gaussian noise, we have

| (6.3.30) |

Hence, evaluating the righthand side of (6.3.29) requires only

-

1.

A pretrained denoiser for the data distribution (of , learned as in Section 3.2 via Algorithm 3.2;

-

2.

Forward and backward pass access to the forward model for the measurements (6.3.9);

-

3.

A forward and backward pass through , which can be evaluated efficiently using (say) backpropagation.

Combining this scheme with the basic implementation of unconditional sampling we developed in Section 3.2, we obtain a practical algorithm for conditional sampling of the posterior given measurements following (6.3.9). Algorithm 6.1 records this scheme for the case of Gaussian observation noise with known standard deviation , with minor modifications to extend to a general noising process, as in Equation 3.2.69 and the surrounding discussion in Chapter 3 (our discussion above made the simplifying choices , , and , as for Equation 3.2.66 in Section 3.2).

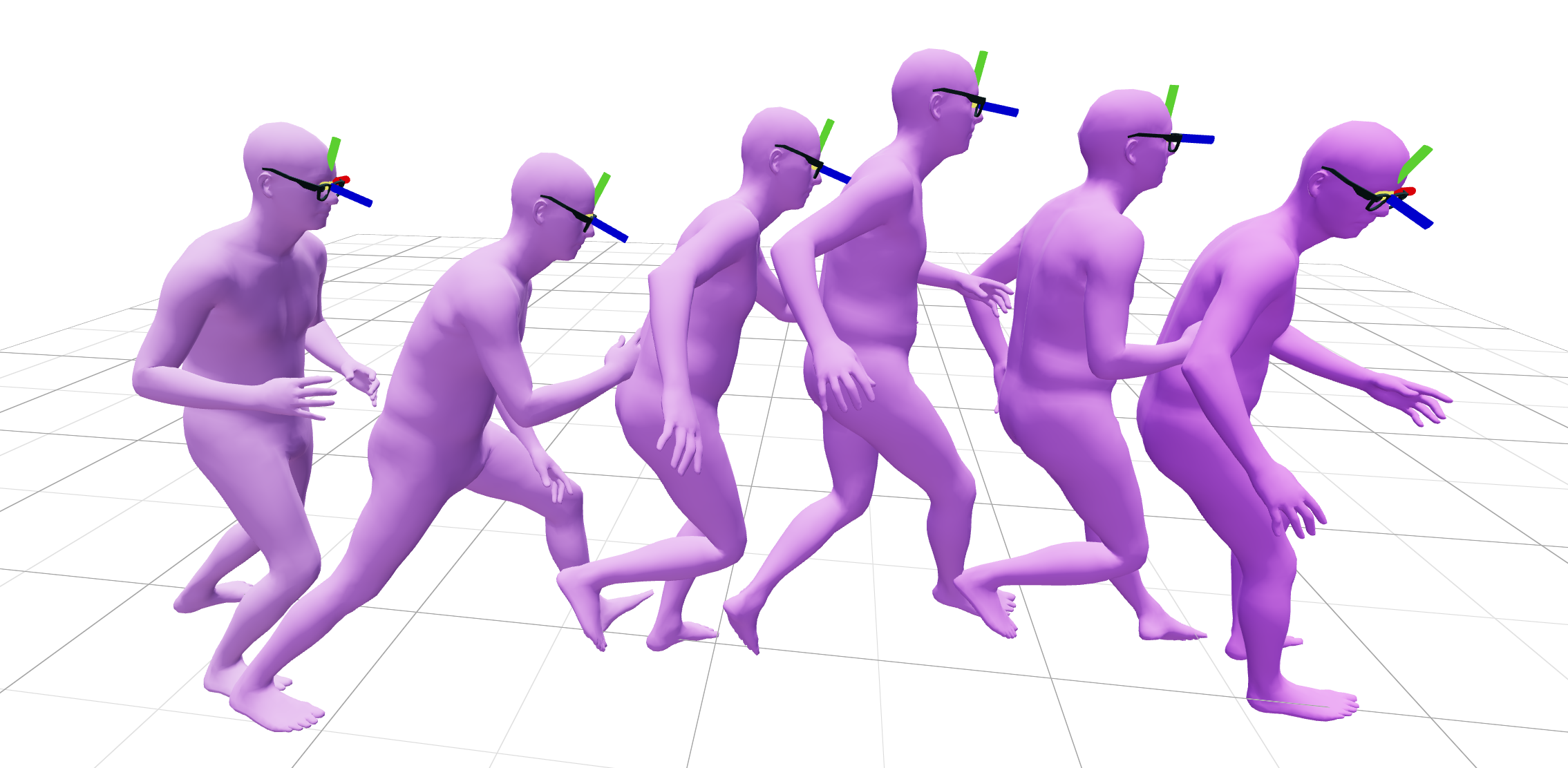

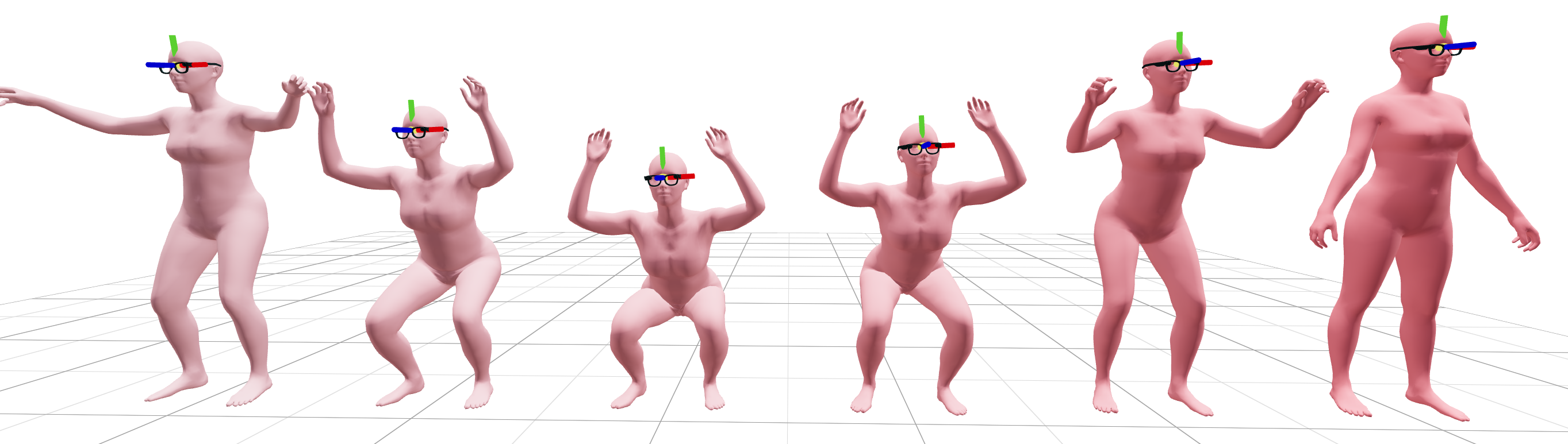

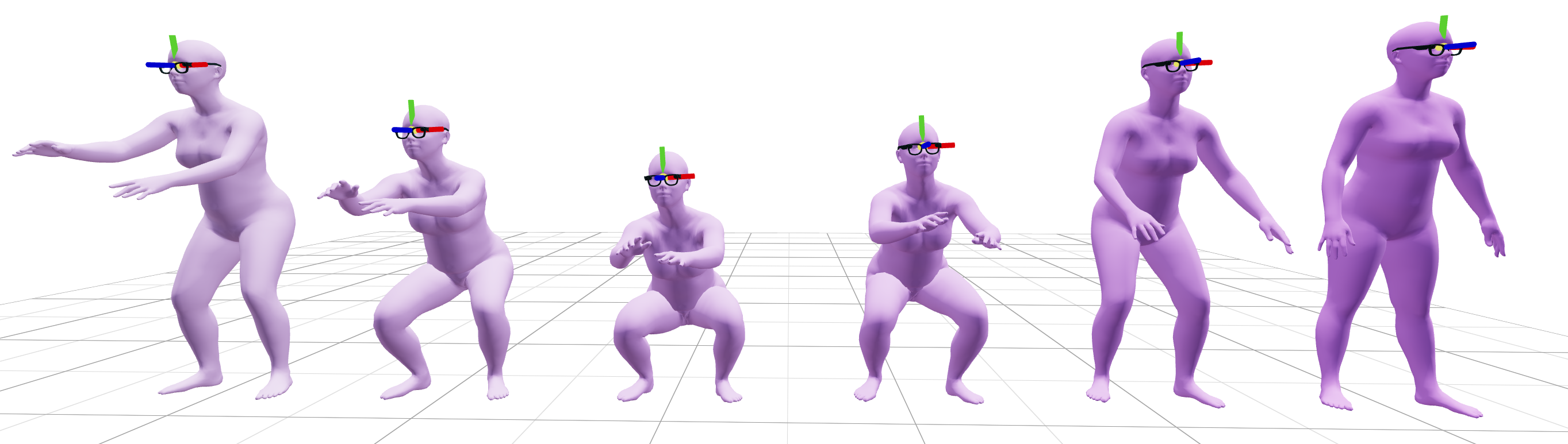

6.3.3 Body Pose Generation Conditioned on Head and Hands

This type of conditional estimation or generation problem arises rather naturally in many practical applications. A typical problem of this kind is how to estimate and generate body pose and hand gesture conditioned on a given head pose and egocentric images, as illustrated in Figure 6.10. This is often the problem we need to solve when one is wearing a head-mounted device such as the Vision Pro from Apple or the Project Aria from Meta. The pose of the whole body and the gesture of the hands need to be inferred so that we can use the information to control virtual objects that the person interacts with.

Notice that in this case, one only has the head pose provided by the device and a very limited field of view for part of one’s hands and upper limbs. The pose of the rest of the body needs to be “inferred” or “completed” based on such partial information. The only way one can estimate the body pose over time is by learning the joint distribution of the head and body pose sequences in advance and then sampling this prior distribution conditioned on the real-time partial inputs. Figure 6.11 outlines a system called EgoAllo [YYZ+24] to solve this problem based on a learned conditional diffusion-denoising model.

![Figure 6.11 : Overview of technical components of EgoAllo [ YYZ+24 ] . A diffusion model is pretrained that can generate body pose sequence based on local body parameters (middle). An invariant parameterization g ( ⋅ ) g(\cdot) italic_g ( ⋅ ) of SLAM poses (left) is used to condition the diffusion model. These can be placed into the global coordinate frame via global alignment to input poses. When available, egocentric video is used for hand detection (left) via HaMeR [ PSR+23 ] , which can be incorporated into samples via guidance by the generated gesture.](x30.png)

Figure 6.12 compares some ground truth motion sequences with sampled results generated by the EgoAllo. Although the figure shows one result for each input head pose sequence, different runs can generate different body pose sequences that are consistent with the given head pose, all drawing from the distribution of natural full-body motion sequences.

Strictly speaking, the solution proposed in EgoAllo [YYZ+24] does not enforce measurement matching using the techniques introduced above. Instead it heuristically enforces the condition by utilizing the cross-attention mechanism in a transformer architecture. As we will describe with more precision in the paired data setting in Section 6.4.2, there is reason to believe that the cross-attention mechanism is in a way approximately realizing the conditional sampling of the denoising a posteriori. We believe the more principled techniques introduced here, if properly implemented, can lead to better methods that further improve the body pose and hand gesture estimation.

6.4 Conditional Inference with Paired Data and Measurements

In many practical applications, we do not know either the distribution of the data of interest or the explicit relationship between the data and certain observed attributes of the data. We only have a (large) set of paired samples from which we need to infer the data distribution and a mapping that models their relationship:

| (6.4.1) |

The problem of image classification can be viewed as one such example. In a sense, the classification problem is to learn an (extremely lossy) compressive encoder for natural images. Say, given a random sample of an image , we would like to predict its class label that best correlates the content in . We know the distribution of natural images of objects is low-dimensional compared to the dimension of the pixel space. From the previous chapters, we have learned that given sufficient samples, in principle, we can learn a structured low-dimensional representation for through a learned compressive encoding:

| (6.4.2) |

The representation can also be viewed as a learned (lossy but structured) code for . It is rather reasonable to assume that if the class assignment truly depends on the low-dimensional structures of and the learned code truly reflects such structures, and can be made highly correlated and hence their joint distribution should be extremely low-dimensional. Therefore, we may combine the two desired codes and together and try to learn a combined encoder:

| (6.4.3) |

where the joint distribution of is highly low-dimensional.

From our study in previous chapters, the mapping is usually learned as a sequence of compression or denoising operators in the same space. Hence to leverage such a family of operations, we may introduce an auxiliary vector that can be viewed as an initial random guess of the class label . In this way, we can learn a compression or denoising mapping:

| (6.4.4) |

within a common space. In fact, the common practice of introducing an auxiliary “class token” in the training of a transformer for classification tasks, such as in ViT, can be viewed as learning such a representation by compressing (the coding rate of) given (noisy) samples of . If the distribution of the data is already a mixture of (low-dimensional) Gaussians, the work [WTL+08] has shown that classification can be done effectively by directly minimizing the (lossy) coding length associated with the given samples.

6.4.1 Class Conditioned Image Generation

While a learned classifier allows us to classify a given image to its corresponding class, we often would like to generate an image of a given class, by sampling the learned distribution of natural images. To some extent, this can be viewed as the “inverse” problem to image classification. Let denote the distribution of natural images, say modeled by a diffusion-denoising process. Given a class label random variable with realization , say an “Apple”, we would like to sample the conditional distribution to generate an image of an apple:

| (6.4.5) |

We call this class-conditioned image generation.

In Section 6.3.2, we have seen how to use the denoising-diffusion paradigm for conditional sampling from the posterior given model-based measurements (Equation 6.3.9), culminating in the DPS algorithm (Algorithm 6.1). This is a powerful framework, but it does not apply to the class (or text) conditioned image generation problem here, where an explicit generative model for the observations/attributes is not available due to the intractability of analytical modeling. In this section, we will present techniques for extending conditional sampling to this setting.

Thus, we now assume only that we have access to samples from the joint distribution of :

| (6.4.6) |

As in the previous section, we define with independent of , as in Equation 3.2.69 in Chapter 3, and we will repeatedly use the notation to denote realizations of and .

To proceed, we note that our development of conditional sampling under measurements only explicitly used the forward model in making the DPS approximation (6.3.26). In particular, the conditional posterior denoiser decomposition (6.3.14) still holds in the paired data setting, by virtue of Bayes’ rule and conditional independence of and given (recall Figure 6.8). Thus we can still write in the paired data setting

| (6.4.7) |

A natural ideal is then to directly implement the likelihood correction term in (6.4.7) using a deep network with parameters , as in Equation 6.4.4:

| (6.4.8) |

This expression combines the final representations (which also depend on ) of the noisy inputs with a classification head , which maps the representations to a probability distribution over the possible classes. As is common in practice, it also takes the time in the noising process as input. Thus, with appropriate training, it provides an approximation to the log-likelihood , and differentiating with respect to its input allows an approximation to the second term in Equation 6.4.7:

| (6.4.9) |

where, as usual, we approximate the first term in Equation 6.4.7 via a learned unconditional denoiser for with parameters , and where we write for to denote the -th canonical basis vector for (i.e., the vector with a one in the -th position, and zeros elsewhere). The reader should note that the conditional denoiser requires two separate training runs, with separate losses: one for the classifier parameters , on a classification loss,888In Chapter 7, we review the process of training such a classifier in full detail. and one for the denoiser parameters , on a denoising loss. Such an approach to conditional sampling was already recognized and exploited to perform conditional sampling in pioneering early works on diffusion models, notably those by [SWM+15] and by [SSK+21].

However, this straightforward methodology has two key drawbacks (which is why we label it as “naive”). The first is that, empirically, such a trained deep network classifier frequently does not provide a strong enough guidance signal (in Equation 6.4.7) to ensure that generated samples reflect the conditioning information . This was first emphasized by [DN21], who noted that in the setting of class-conditional ImageNet generation, the learned deep network classifier’s probability outputs for the class being conditioned on were frequently around —large enough to be the dominant class, but not large enough to provide a strong guidance signal—and that upon inspection, generations were not consistent with the conditioning class . [DN21] proposed to address this heuristically by incorporating an “inverse temperature” hyperparameter into the definition of the naive conditional denoiser (6.4.9), referring to the resulting conditional denoiser as having incorporated “classifier guidance” (CG):

| (6.4.10) |

with the case coinciding with (6.4.9). [DN21] found that a setting performed best empirically. One possible interpretation for this is as follows: note that, in the context of the true likelihood term Equation 6.4.7, scaling by gives equivalently

| (6.4.11) |

which suggests the natural interpretation of the parameter performing (inverse) temperature scaling on the likelihood , which is precise if we consider the renormalized distribution . However, note that this is not a rigorous interpretation in the context of Equation 6.4.7, because the gradients are taken with respect to , and the normalization constant in the temperature-scaled distribution is in general a function of . Instead, the parameter should simply be understood as amplifying large values of the deep network classifier’s output probabilities relative to smaller ones, which effectively amplifies the guidance signal provided in cases where the deep network assigns it the largest probability among the classes.

Nevertheless, classifier guidance does not address the second key drawback of the naive methodology: it is both cumbersome and wasteful to have to train an auxiliary classifier in addition to the unconditional denoiser , given that it is not possible to directly adapt a pretrained classifier due to the need for it to work well on noisy inputs and incorporate other empirically-motivated architecture modifications. In particular, [DN21] found that it was necessary to explicitly design the architecture of the deep network implementing the classifier to match that of the denoiser. Moreover, from a purely practical perspective—trying to obtain the best possible performance from the resulting sampler—the best-performing configuration of classifier guidance-based sampling departs even further from the idealized and conceptually sound framework we have presented above. To obtain the best performance, [DN21] found it necessary to provide the class label as an additional input to the denoiser . As a result, the idealized classifier-guided denoiser (6.4.10), derived by [DN21] as we have done above from the conditional posterior denoiser decomposition (6.4.7), is not exactly reflective of the best-performing denoiser in practice—such a denoiser actually combines a conditional denoiser for given with an additional guidance signal from an auxiliary classifier!

This state of affairs, empirically motivated as it is, led [HS22] in subsequent work to propose a more empirically pragmatic methodology, known as classifier-free guidance (CFG). Instead of representing the conditional denoiser (6.4.7) as a weighted sum of an unconditional denoiser for with a log-likelihood correction term (with possibly modified weights, as in classifier guidance), they accept the apparent necessity of training a conditional denoiser for given , as demonstrated by the experimental results of [DN21], and replace the log-likelihood gradient term with a correctly-weighted sum of this conditional denoiser with an unconditional denoiser for given .999That said, [HS22] actually proposed to use a different weighting than what we present here, based on the fact that [DN21] heuristically replaced the unconditional denoiser in (6.4.7) with a conditional denoiser. In fact, the weighting we derive and present here reflects modern practice, and in particular is used in state-of-the-art diffusion models such as Stable Diffusion 3.5 [EKB+24]. To see how this structure arises, we begin with an ‘idealized’ version of the classifier guidance denoiser defined in (6.4.10), for which the denoiser and the classifier perfectly approximate their targets, via (6.4.7):

| (6.4.12) |

We then use Bayes’ rule, in the form

| (6.4.13) |

together with Tweedie’s formula (Theorem 3.3, modified as in Equation 3.2.70) to convert between score functions and denoisers, to obtain

| (6.4.14) |

where in the last line, we apply Equation 6.3.11. Now, Equation 6.4.14 suggests a natural approximation strategy: we combine a learned unconditional denoiser for given , as previously, with a learned conditional denoiser for given and .

However, following [HS22] and the common practice of training deep network denoisers, it is standard to use the same deep network to represent both the conditional and unconditional denoisers by introducing an additional label, which we will denote by , to denote the “unconditional” case. This leads to the form of the CFG denoiser:

| (6.4.15) |

To train a denoiser for use with classifier-free guidance sampling, where , we proceed almost identically to the unconditional training procedure in Algorithm 3.2, but with two modifications:

-

1.

When we sample from the dataset, we sample a pair rather than just a sample .

-

2.

Every time we sample a pair from the dataset, we sample the augmented label via

(6.4.16) Here, is a new hyperparameter. This can be viewed as a form of dropout [SHK+14].

In this way, we train a conditional denoiser suitable for use in classifier-free guidance sampling. We summarize the overall sampling process for class-conditioned sampling with classifier-free guidance in Algorithm 6.2.

[HS22] reports strong empirical performance for class-conditional image generation with classifier-free guidance, and it has become a mainstay of the largest-scale practical diffusion models, such as Stable Diffusion [RBL+22] and its derivatives. At the same time, its derivation is rather opaque and empirically motivated, giving little insight into the mechanisms behind its strong performance. A number of theoretical works have studied this, providing explanations for some parts of the overall CFG methodology [BN24a, LWQ25, WCL+24]—itself encompassing denoiser parameterization and training, as well as configuration of the guidance strength and performance at sampling time. Below, we will give an interpretation in the simplifying setting of a Gaussian mixture model data distribution and denoiser, which will demonstrate an insight into the parameterization of the denoiser in the presence of such low-dimensional structures.

Example 6.3.

Let us recall the low-rank mixture of Gaussians data generating process we studied in Example 3.2 (and specifically, the form in Equation 3.2.42). Given classes, we assume that

| (6.4.17) |

where each is a matrix with orthogonal columns, and . Moreover, we assume that the class label is a deterministic function of mapping an example to its corresponding mixture component. Applying the analysis in Example 3.2 (and the subsequent analysis of the low-rank case, culminating in Equation 3.2.56), we obtain for the class-conditional optimal denoisers

| (6.4.18) |

for each , and for the optimal unconditional denoiser, we obtain

| (6.4.19) |

As a result, we can express the CFG denoiser with guidance strength as

| (6.4.20) |

This denoiser has a simple, interpretable form. The first term, corresponding to the unconditional denoiser, performs denoising of the signal against an average of the denoisers associated with each subspace, weighted by how correlated is with each subspace. The second term, corresponding to the conditional denoiser, simply performs denoising with the conditioning class’s denoiser. The CFG scheme further averages these two denoisers: the effect can be gleaned from the refactoring

| (6.4.21) |

We have

| (6.4.22) |

and each summand is nonnegative, hence also bounded above by . So we can conclude two regimes for the terms in Equation 6.4.21:

-

1.

Well-correlated regime: If correlates well with , then the normalized weight corresponding to the summand in the unconditional denoiser is near to . Then

(6.4.23) all other weights are necessarily near to zero, and the CFG denoiser is approximately equal to the denoiser associated to the conditioning class .

-

2.

Poorly-correlated regime: In contrast, if does not correlate well with (say because is large), then the normalized weight corresponding to the summand in the unconditional denoiser is near to . As a result,

(6.4.24) and thus the guidance strength places a large positive weight on the denoiser associated to . Meanwhile, in the second term of Equation 6.4.21, any classes that are well-correlated with receive a large negative weight from the coefficient. This simultaneously has the effect of making the denoised signal vastly more correlated with the conditioning class , and making it negatively correlated with the previous iterate (i.e., the iterate before denoising). In other words, CFG steers the iterative denoising process towards the conditioning class and away from the previous iterate, a different dynamics from purely conditional sampling (i.e., the case ).

We now perform a further analysis of the form of this guided denoiser in order to make some inferences about the role of CFG. Many of these insights will be relevant to general data distributions with low-dimensional geometric structure, as well. First, notice that the CFG denoiser (6.4.20) takes a simple form in the setting where correlates significantly more strongly with a single subspace than any other . Indeed, because the ratio of weights in the class-conditional denoiser is given by

| (6.4.25) |

a large separation between the correlation of with and other subspaces implies that the sum over concentrates on the summand, giving that the CFG denoiser remains equal to the class-conditioned denoiser. Moreover, when , the magnitude of any such gap is amplified in the exponential, making this concentration on the summand even stronger. In particular, for small times (i.e., near to the support of the data distribution), CFG denoising is no different from standard class-conditional denoising—implying that it will converge stably once it has reached such a configuration. Thus, the empirical benefits of CFG should be due to its behavior in cases where is not unambiguously from a single class.

Next, we consider the problem of parameterizing a learnable denoiser to represent the optimal denoiser (6.4.20). Here, it may initially seem that the setting of classification of a mixture distribution is too much of a special case relative to learning practical data distributions, as the ideal denoiser (6.4.20) has in this setting the simple form of a hard assignment of the noisy signal to the (denoiser associated to) the subspace corresponding to the true class label of , averaged with the soft assignment denoiser associated to all subspaces , with weights given by the correlations of with these different subspaces. However, we can extract a more general form for the class-conditional denoiser in this example which is relevant for practical parameterization using the geometric structure of the mixture of Gaussians distribution, which actually parallels the kinds of geometric structure common in real-world data. More precisely, we add an additional assumption associated to the subspaces being ‘distinguishable’ from one another, which is natural in practice: specifically, we assume that for any pair of indices with , we can find a set of directions such that

| (6.4.26) |

This is a slightly stronger assumption than simple distinguishability, but it should be noted that it is not overly restrictive: for example, it still allows the subspaces to have significant correlations with one another.101010 More generally, this assumption is naturally formulated as an incoherence condition between the subspaces , a familiar notion from the theory of compressive sensing. These vectors can then be thought of as embeddings of the class label , and we can use them to define a more general operator that can represent both the unconditional and class-conditional denoisers. More precisely, consider the mapping

| (6.4.27) |

If we substitute for some , we get

| (6.4.28) | ||||

Now, because , if the subspace dimension is sufficiently large—for example, if we consider a large-scale, asymptotic regime where with their ratio converging to a fixed constant—we have for that is close to , by the concentration of measure phenomenon111111One of a handful of blessings of dimensionality—see [WM22].. Then by the argument in the previous paragraph, we have in this regime that for almost all realizations of , the following approximation holds:

| (6.4.29) |

This argument shows that the operator (6.4.27) is, with overwhelming probability, equal to the optimal class-conditional denoiser (6.4.18) for when ! In intuitive terms, at small noise levels —corresponding to the structure-enforcing portion of the denoising process—plugging in the embedding for a given class to the second argument of the operator (6.4.27) leads the resulting function of to well-approximate the optimal class-conditional denoiser (6.4.18) for . Moreover, it is evident that plugging in to the operator (6.4.27) yields (exactly) the optimal unconditional denoiser (6.4.19) for . Thus, this operator provides a unified way to parameterize the constituent operators in the optimal denoiser for within a single ‘network’: it is enough to add the output of an instantiation of (6.4.27) with input to an instantiation with input ). The resulting operator is a function of , and computationally, the subspaces and embeddings become its learnable parameters.

Example 6.3 shows that in the special case of a low-rank mixture of Gaussians data distribution for with incoherent components, operators of the form

| (6.4.30) |

provide a sufficiently rich class of operators to parameterize the MMSE-optimal denoiser for noisy observations of , in the setting of classifier-free guidance where one network is to be used to represent both the unconditional and class-conditional denoisers for . For such operators, the auxiliary input can be taken as either or a suitable embedding of the class label in order to realize such a denoiser. Based on the framework in Chapter 4, which develops deep network architectures suitable for transforming more general data distributions to structured representations using the low-rank mixture of Gaussians model as a primitive, it is natural to imagine that operators of the type (6.4.30) may be leveraged in denoisers for general data distributions with low-dimensional geometrically-structured components that are sufficiently distinguishable (say, incoherent) from one another. The next section demonstrates that this is indeed the case.

6.4.2 Caption Conditioned Image Generation

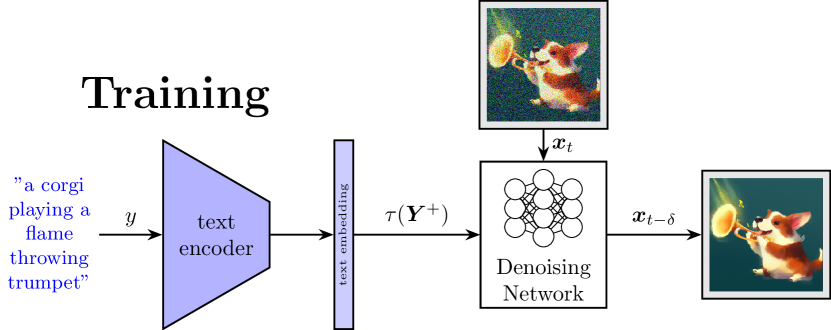

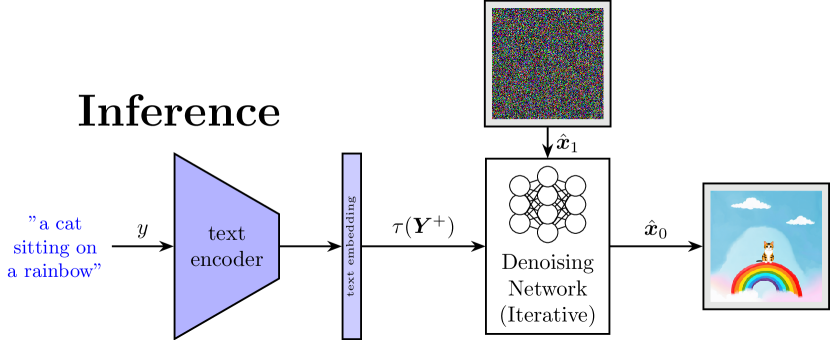

In the previous subsection, we formulated denoisers for class-conditional denoising with classifier-free guidance, a ubiquitous practical methodology used in the largest-scale diffusion models, and showed how to parameterize them (in Example 6.3) in the special case of a low-rank Gaussian mixture model data distribution. One interesting byproduct of this example is that it highlights the crucial role of embeddings of the class label into a common space with the image in order to provide a concise and unified scheme for parameterizing the optimal denoisers (conditional and unconditional). Below, we will describe an early such instantiation, which formed the basis for the original open-source Stable Diffusion implementation [RBL+22]. In this setting, the embedding and subsequent conditioning is performed not on a class label, but a text prompt, which describes the desired image content (Figure 6.13). We denote the raw tokenized text prompt as in this context, since it corresponds to a sequence of vectors—in Section 7.4, we describe the process of encoding a text sequence as a vector representation in detail.

Stable Diffusion follows the conditional generation methodology we outline in Section 6.4.1, with two key modifications: (i) The conditioning signal is a tokenized text prompt , rather than a class label; (ii) Image denoising is performed in “latent” space rather than on raw pixels, using a specialized, pretrained variational autoencoder pair , (see Section 5.1.4), where is the encoder and is the decoder. Subsequent model development has shown that point (ii) is an efficiency issue, rather than a core conceptual one, so we will not focus on it, other than to mention that it simply leads to the following straightforward modifications to the text-to-image pipeline sketched in Figure 6.13:

-

1.

At training time, the encoder is used to generate the denoising targets, and all denoising is performed on the encoded representations ;

-

2.

At generation time, sampling is performed on the representations , and the final image is generated by applying the decoder .

In contrast, issue (i) is essential, and the approach proposed to address it represents one of the lasting methodological innovations of [RBL+22]. In the context of the iterative conditional denoising framework we have developed in Section 6.4.1, this concerns the parameterization of the denoisers .121212In the setting of text conditioning, the ‘augmented’ label , which is either the encoded text prompt or , denoting unconditional denoising, is often implemented by mapping to the empty string “”, then encoding this text prompt with the tokenizer as usual. This gives a simple, unified way to treat conditional and unconditional denoising with text conditioning. [RBL+22] implement text conditioning in the denoiser using a layer known as cross attention, inspired by the original encoder-decoder transformer architecture of [VSP+17]. Cross attention is implemented as follows. We let denote an encoding network for the text embeddings (often a causal transformer—see Section 7.4), and let denote the mapping corresponding to one of the intermediate representations in the denoiser.131313In practice, text-conditioned denoisers add cross attention layers at regular intervals within the forward pass of the denoiser, so should be seen as layer-dependent, in contrast to . See [RBL+22] for details. Here, is the maximum tokenized text prompt length, and roughly corresponds to the number of image channels (layer-dependent) in the representation, which is fixed if the input image resolution is fixed. Cross attention (with heads, and no bias) is defined as

| (6.4.31) |

where denotes the ubiquitous self attention operation in the transformer (which we recall in detail in Chapter 7: see Equations 7.2.15 and 7.2.16), and for (as well as the output projection ) are the learnable parameters of the layer.

Notice that, by the definition of the self-attention operation, cross attention outputs linear combinations of the value-projected text embeddings weighted by correlations between the image features and the text embeddings. In the denoiser architecture used by [RBL+22], self-attention residual blocks in the denoiser architecture, applied to the image representation at the current layer and defined analogously to those in Equation 7.2.13 for the vision transformer, are followed by cross attention residual blocks of the form (6.4.31). Such a structure requires the text encoder to, in a certain sense, share some structure in its output with the image feature embedding : this can be enforced either by appropriate joint text-image pretraining (such as with CLIP [RKH+21]) or by joint training with the denoiser itself (which was proposed and demonstrated by [RBL+22], but has fallen out of favor due to high data and training costs for strong performance). Conceptually, this joint text-image embedding space and the cross attention layer itself bear a strong resemblance to the conditional mixture of Gaussians denoiser that we derived in the previous section (recall (6.4.27)), in the special case of a single token sequence. Deeper connections can be drawn in the multi-token setting following the rate reduction framework for deriving deep network architectures discussed in Chapter 4, and manifested in the derivation of the CRATE transformer-like architecture.

This same basic design has been further scaled to even larger model and dataset sizes, in particular in modern instantiations of Stable Diffusion [EKB+24], as well as in competing models such as FLUX.1 [LBB+25], Imagen [SCS+22], and DALL-E [RDN+22]. The conditioning mechanism of cross attention has also become ubiquitous in other applications, as in EgoAllo (Section 6.3.3) for conditioned pose generation and in Michelangelo [ZLC+23] for conditional 3D shape generation based on images or texts.

6.5 Conditional Inference with Measurement Self-Consistency

In this last section, we consider the more extreme, but actually ubiquitous, case for distribution learning in which we only have a set of observed samples of the data , but no samples of directly! In general, the observation is of lower dimension than . To make the problem well-defined, we do assume that the observation model between and is known to belong to a certain family of analytical models, denoted as , with either known or not known.

Let us first try to understand the problem conceptually with the simple case when the measurement function is known and the observed is informative about . That is, we assume that is surjective from the space of to that of and the support of the distribution is low-dimensional. This typically requires that the extrinsic dimension of is higher than the intrinsic dimension of the support of the distribution of . Without loss of generality, we may assume that there exist functions:

| (6.5.1) |

Notice that here we may assume that we know but not . Let be the support of . In general, is a superset of . That is, we have .

6.5.1 Linear Measurement Models

First, for simplicity, let us consider that the measurement is a linear function of the data of interest:

| (6.5.2) |

Here the matrix is of full row rank and is typically smaller than . We assume is known for now. We are interested in how to learn the distribution of from such measurements. Since we no longer have direct samples of , we wonder whether we can still develop a denoiser for with observations . Let us consider the following diffusion process:

| (6.5.3) |

where .

Without loss of generality, we assume is of full row rank, i.e., under-determined. Let us define the corresponding process as one that satisfies:

| (6.5.4) |

From the denoising process of , we have

| (6.5.5) |

Then we have:

| (6.5.6) |

for a small . So and need to satisfy:

| (6.5.7) |

Among all that satisfy the above constraint, we arbitrarily choose the one that minimizes the distance . Therefore, we obtain a “denoising” process for :

| (6.5.8) |

Notice that this process does not sample from the distribution of . In particular, there are components of in the null space/kernel of that can never be recovered from observations. Thus more information is needed to recover the full distribution of , strictly speaking. But this recovers the component of that is orthogonal to the null space of .

6.5.2 3D Visual Model from Calibrated Images

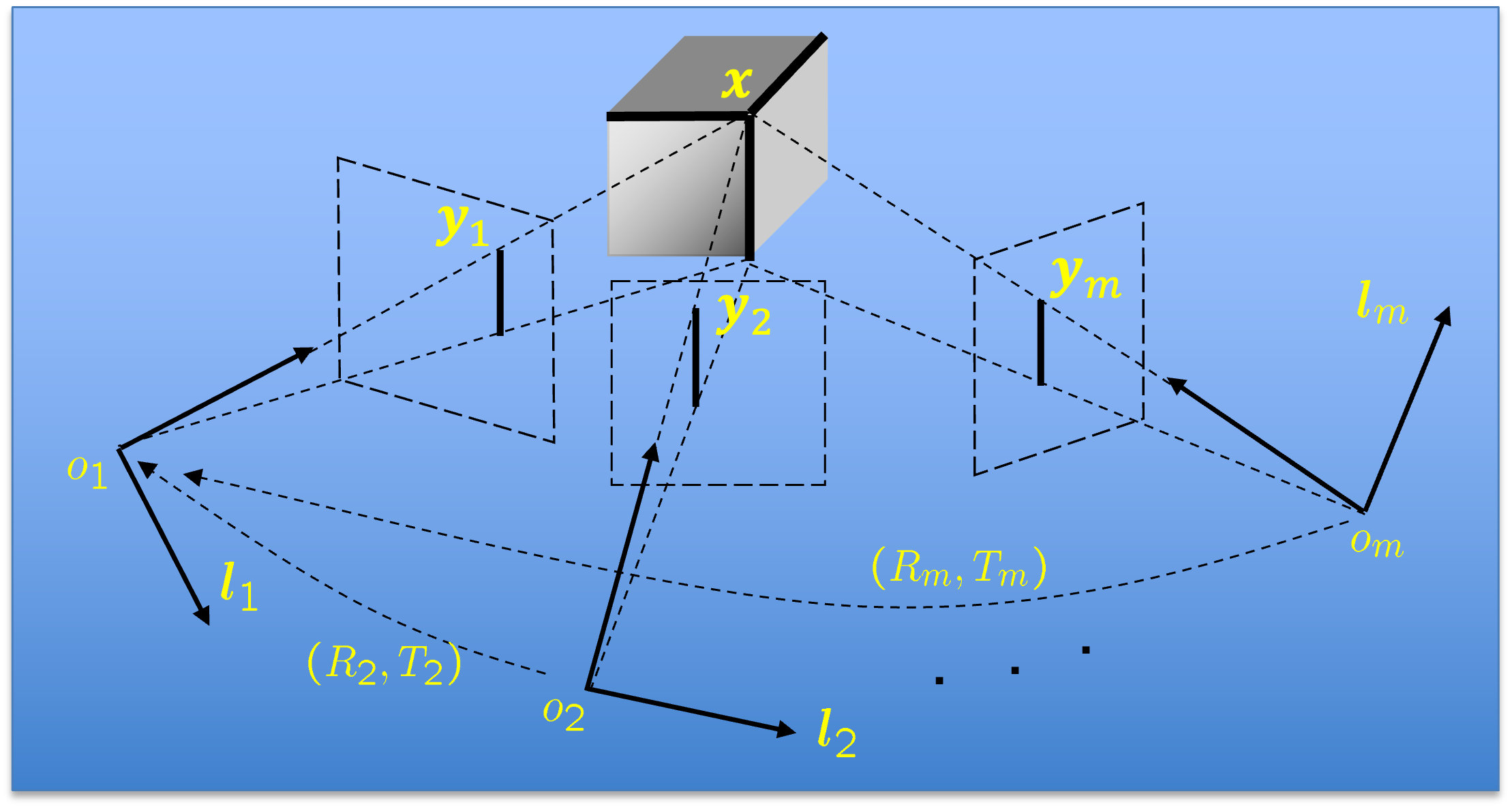

In practice, the measurement model is often nonlinear or only partially known. A typical problem of this kind is actually behind how we can learn a working model of the external world from the images perceived, say through our eyes, telescopes or microscopes. In particular, humans and animals are able to build a model of the 3D world (or 4D for a dynamical world) through a sequence of its 2D projections—a sequence of 2D images (or stereo image pairs). The mathematical or geometric model of the projection is generally known:

| (6.5.9) |

where represents a (perspective) projection of the 3D (or 4D) scene from a certain camera view at time to a 2D image (or a stereo pair) and is some possibly additive small measurement noise. Figure 6.14 illustrates this relationship concretely, while Figure 6.15 illustrates the model problem in the abstract. A full exposition of geometry related to multiple 2D views of a 3D scene is beyond the scope of this book. Interested readers may refer to the book [MKS+04]. For now, all we need to proceed is that such projections are well understood and multiple images of a scene contain sufficient information about the scene.

In general, we would like to learn the distribution of the 3D (or 4D) world scene 141414Here by abuse of notation, we use to represent either a point in 3D or a sample of an entire 3D object or a scene that consists of many points. from the perceived 2D images of the world so far. The primary function of such a (visual) world model is to allow us to recognize places where we had been before or predict what the current scene would look like in a future time at a new viewpoint.

Let us first examine the special but important case of stereo vision. In this case, we have two calibrated views of the 3D scene :

| (6.5.10) |

where parameters and for the view poses can be assumed to be known. and are two 2D-projections of the 3D scene . We may also assume that they have the same marginal distribution and we have learned a diffusion and denoising model for it. That is, we know the denoiser:

| (6.5.11) |

Or, furthermore, we may assume that we have a sufficient number of samples of stereo pairs and have also learned the joint distribution of the pairs. By a little abuse of notation, we also use to indicate the pair and as the learned probability distribution of the pair (say via a denoiser as above).

The main question now is: How to learn (a representation for) the distribution of the 3D scene from its two projections with known relationships? People might question the rationale for doing this: why is this necessary if the function is largely invertible? That is, the observation can largely determine the unknown , which is kind of the case for stereo—in general, two (calibrated) images contain sufficient information about the scene depth, from the given vantage point. However, 2D images are far from the most compact representation of the 3D scene as the same scene can produce infinitely many (highly correlated) 2D images or image pairs. In fact, a good representation of a 3D scene should be invariant to the viewpoint. Hence, a correct representation of the distribution of 3D scenes should be much more compact and structured than the distribution of 2D images, stereo pairs, or image-depth pairs.

Consider the (inverse) denoising process for the diffusion: in (6.5.11), where is standard Gaussian. From the denoising process of (6.5.11), we have

| (6.5.12) |

We try to find a corresponding “denoising” process of such that is related to as:

| (6.5.13) |

Then we have:

| (6.5.14) |

for a small . Suppose for some vector and small increment . We have

| (6.5.15) |

Hence, we have

| (6.5.16) |

Geometrically the vector in the domain of can be viewed as the pullback of the vector field under the map . In general, as before, we may (arbitrarily) choose to be the minimum 2-norm vector that satisfies the pullback relationship. Hence, we can express approximately as:

| (6.5.17) |

Remark 6.2 (Parallel Sensing and Distributed Denoising.).

There is something very interesting about the above equation (6.5.17). It seems to suggest we could try to learn the distribution of through a process that is coupled with (many of) its (partial) observations:

| (6.5.18) |

In this case, we obtain a set of equations that the vector field in the domain of should satisfy:

| (6.5.19) |

where . The final can be chosen as a “centralized” solution that satisfies all the above equations, or it could be chosen as a certain (stochastically) “aggregated” version of all :

| (6.5.20) |

that are computed in a parallel and distributed fashion? An open question here is exactly what the so-defined “denoising” process for converges to, even in the linear measurement model case. When would it converge to a distribution that has the same low-dimensional support as the original , as converges to ?

Visual World Model from Uncalibrated Image Sequences

In the above derivation, we have assumed that the measurement model is fully known. In the case of stereo vision, this is rather reasonable as the relative pose (and calibration) of the two camera views (or two eyes151515The relative pose of our two eyes is well known to our brain.) is usually known in advance. Hence, through the stereo image pairs, in principle we should be able to learn the distribution of 3D scenes, at least the ego-centric distribution of 3D scenes. However, the low-dimensional structures of the so-called learned distribution contain variation caused by changing the viewpoints. That is, the appearance of the stereo images varies when we change our viewpoints with respect to the same 3D scene. For many practical vision tasks (such as localization and navigation), it is important that we can decouple this variation of viewpoints from an invariant representation of (the distribution of) 3D scenes.

Remark 6.3.

Note that the above goal aligns well with Klein’s Erlangen Program for modern geometry, which is to study invariants of a manifold under a group of transformations. Here, we may view the manifold of interest as the distribution of ego-centric representations of 3D scenes. We have learned that it admits a group of three-dimensional rigid-body motion acting on it. It is remarkable that our brain has learned to effectively decouple such transformations from the observed 3D world.

Notice that we have studied learning representations that are invariant to translation and rotation in a limited setting in Chapter 4. We know that the associated compression operators take the necessary form of (multi-channel) convolutions, hence leading to the (deep) convolutional neural networks. Nevertheless, operators that are associated with compression or denoising that are invariant to more general transformation groups remain elusive to characterize [CW16a]. For the 3D Vision problem in its most general setting, we know the change of our viewpoints can be well modeled as a rigid-body motion. However, the exact relative motion of our eyes between different viewpoints is usually not known. More generally, there could also be objects (e.g., cars, humans, hands) moving in the scene and we normally do not know their motion either. How can we generalize the problem of learning the distribution of 3D scenes with calibrated stereo pairs to such more general settings? More precisely, we want to learn a compact representation of the 3D scenes that is invariant to the camera/eye motions. Once such a representation is learned, we could sample and generate a 3D scene and render images or stereo pairs from arbitrary poses.

To this end, note that we can model a sequence of stereo pairs as:

| (6.5.21) |

where represents the projection map from 3D to 2D. denotes the rigid-body motion parameters of the th view, with respect to some canonical frame in the world. represents the 3D scene at time . If the scene is static, should all be the same . To simplify the notation, we may denote the set of equations as one:

| (6.5.22) |

We may assume that we are given many samples of such stereo image sequences . The problem is how to recover the associated motion sequence and learn the distribution of the scene (that is invariant to the motion). To the best of our knowledge, this remains an open challenging problem, probably as the final frontier for the 3D Vision problem.

6.6 Summary and Notes

Measurement matching without clean samples.