Chapter 2 Learning Linear and Independent Structures

“The art of doing mathematics consists in finding that special case which contains all the germs of generality.”

– David Hilbert

Real data has low-dimensional structure. To see why this is true, let us consider the unassuming case of static on a TV when the satellite isn’t working. At each frame (approximately every seconds), the RGB static on a screen of size is, roughly, sampled independently from a uniform distribution on . In theory, the static could resolve to a natural image on any given frame, but even if you spend a thousand years looking at the TV screen, it will not. This discrepancy is explained by the fact that the set of natural images takes up a vanishingly small fraction of the hypercube . In particular, it is extremely low-dimensional, compared to the ambient space dimension. Similar phenomena occur for all other types of natural data, such as text, audio, and video. Thus, when we design systems and methods to process natural data and learn its structure or distribution, this is a central property of natural data which we need to take into account.

Therefore, our central task is to learn a distribution that has low intrinsic dimension in a high-dimensional space. In the remainder of this section, we discuss several classical methods to perform this task for several somewhat idealistic models for the distribution, namely models that are geometrically linear or statistically independent. While these models and methods are important and useful in their own right, we discuss them here as they motivate, inspire, and serve as a predecessor or analogue to more modern methods for more general distributions that involve deep (representation) learning.

Our main approach (and general problem formulation) can be summarized as:

Problem: Given one or several (noisy or incomplete) observations of a ground truth sample from the data distribution, obtain an estimate of this sample.

This approach underpins several classical methods for data processing, which we discuss in this chapter.

-

•

Section 2.1 — Principal Components Analysis (PCA): Given noisy samples from a distribution supported on one low-dimensional subspace, obtain an estimate of the true sample that lies on this subspace.

-

•

Section 2.2 — Complete Dictionary Learning and Independent Components Analysis (ICA): Given noisy samples from a distribution supported on a union (not the span) of a few low-dimensional subspaces, obtain an estimate of the true samples.

-

•

Section 2.3 — Sparse Coding and Overcomplete Dictionary Learning: Given noisy samples from a distribution supported on combinations of a few incoherent vectors, such as the coordinate axes, obtain an estimate of the true sample, which also has this property.

As we will soon reveal in later chapters, in the deep learning era, modern methods essentially adopt the same approach to learn.

In this chapter, as described above, we make simplifying modeling assumptions that essentially assume the data have geometrically (nearly, piece-wise) linear structures and statistically independent components. In Chapter 1, we have referred to such models of the data as “analytical models”. These modeling assumptions allow us to derive efficient algorithms with provable efficiency guarantees111Both in terms of data and computational complexity. for processing data at scale. However, they are imperfect models for often-complex real-world data distributions, and so their underlying assumptions only approximately hold. This means that the guarantees afforded by detailed analysis of these algorithms also only approximately hold in the case of real data. Nonetheless, the techniques discussed in this chapter are useful in their own right, and beyond that, serve as the “special case with the germ of generality”, so to speak, in that they present a guiding motivation and intuition for the more general paradigms within (deep) learning of more general distributions that we later address.

2.1 A Low-Dimensional Subspace

2.1.1 Principal Components Analysis (PCA)

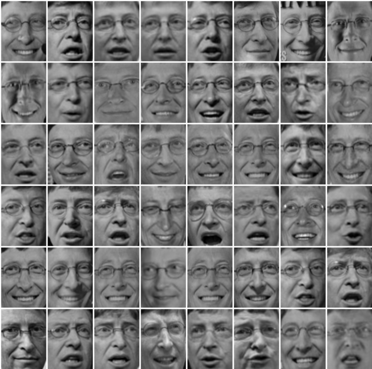

Perhaps the simplest modeling assumption possible for low-dimensional structure is the so-called low-rank assumption. Letting be the dimension of our data space, we assume that our data belong to a low-dimensional subspace of dimension , possibly plus some small disturbances. This ends up being a nearly valid assumption for some surprisingly complex data, such as images of handwritten digits and face data [RD03] as shown in Figure 2.1, yet as we will see, it will lend itself extremely well to comprehensive analysis.

Problem formulation.

To write this in mathematical notation, we represent a subspace of dimension by an orthonormal matrix such that the columns of span . Then, we say that our data have (approximate) low-rank structure if there exists an orthonormal matrix , vectors , and small vectors such that

| (2.1.1) |

Here are disturbances that prevent the data from being perfectly low rank; their presence in our model allows us to quantify the degree to which our analysis remains relevant in the presence of deviations from our model. The true supporting subspace is , the span of the columns of . To process all we can from these data, we need to recover ; to do this it is sufficient to recover , also called the principal components. Fortunately, this is a computationally tractable task named Principal Component Analysis, and we discuss now how to solve it.

Given data distributed as in (2.1.1), we aim to recover the model . A natural approach is to find the subspace and latent vectors which yield the best approximation . Namely, we aim to solve the problem

| (2.1.2) |

where is constrained to be an orthonormal matrix, as above. We will omit this constraint in similar statements below for the sake of concision.

Subspace encoding-decoding via denoising.

To simplify this problem, for a fixed , we have (proof as exercise):

| (2.1.3) | ||||

| (2.1.4) |

That is, the optimal solution to the above optimization problem has .

Now, we can write the original optimization problem in and as an optimization problem just over , i.e., to obtain the basis and compact codes it suffices to solve either of the two following equivalent problems:

| (2.1.5) |

Note that the problem on the right-hand side of (2.1.5) is a denoising problem: given noisy observations of low-rank data, we aim to find the noise-free copy of , which under the model (2.1.1) is . That is, the denoised input . Notice that this is the point on the subspace that is closest to , as visualized in Figure 2.2. Here by solving the equivalent problem of finding the best subspace, parameterized by the learned basis , we learn an approximation to the denoiser, i.e., the projection matrix that projects the noisy data point onto the subspace .

Putting the above process together, we essentially obtain a simple encoding-decoding scheme that maps a data point in to a lower-dimensional (latent) space and then back to :

| (2.1.6) |

Here, can be viewed as the low-dimensional compact code (or a latent representation) of a data point and the learned subspace basis as the associated codebook whose columns are the (learned) optimal code words. The process achieves the function of denoising by projecting it onto the subspace spanned by .

Computing the subspace basis.

For now, we continue our calculation. Let be the matrix whose columns are the observations . We have (proof as exercise)

| (2.1.7) | ||||

| (2.1.8) |

Thus, to compute the principal components, we find the orthogonal matrix which maximizes the term . We can prove via induction that this matrix has columns which are the top unit eigenvectors of . We leave the whole proof to the reader in Exercise 2.1, but we handle the base case of the induction here. Suppose that . Then we only have a single unit vector to recover, so the above problem reduces to

| (2.1.9) |

This is the so-called Rayleigh quotient of . By invoking the spectral theorem we diagonalize , where is orthogonal and is diagonal with non-negative entries. Hence

| (2.1.10) |

Since is an invertible orthogonal transformation, is a unit vector, and optimizing over is equivalent to optimizing over . Hence, we can write

| (2.1.11) |

whose optimal solutions among unit vectors are one-hot vectors whose only nonzero (hence unit) entry is in one of the indices corresponding to the largest eigenvalue of . This means that , the optimal solution to the original problem, corresponds to a unit eigenvector of (i.e., column of ) which corresponds to the largest eigenvalue. Suitably generalizing this to the case , and summarizing all the previous discussion, we have the following informal Theorem.

Theorem 2.1.

Suppose that our dataset can be written as

| (2.1.12) |

where captures the low-rank structure, are the compact codes of the data, and are small vectors indicating the deviation of our data from the low-rank model. Then the principal components of our dataset are given by the top eigenvectors of , where , and approximately correspond to the optimal linear denoiser: .

We do not give explicit rates of approximation here as they can become rather technical. In the special case that for all , the learned spans the support of the samples . If in addition the are sufficiently diverse (say, spanning all of ) then we would have perfect recovery: .

Remark 2.1.

In some data analysis tasks, the data matrix is formatted such that each data point is a row rather than a column as is presented here. In this case the principal components are the top eigenvectors of .

Remark 2.2 (Basis Selection via Denoising Eigenvalues).

In many cases, either our data will not truly be distributed according to a subspace-plus-noise model, or we will not know the true underlying dimension of the subspace. In this case, we have to choose ; this problem is called model selection. In the restricted case of PCA, one way to perform model selection is to compute and look for instances where adjacent eigenvalues sharply decrease; this is one indicator that the index of the larger eigenvalue is the “true dimension ”, and the rest of the eigenvalues of are contributed by the noise or disturbances . Model selection is a difficult problem and, nowadays in the era of deep learning where it is called “hyperparameter optimization”, is usually done via brute force or Bayesian optimization.

Remark 2.3 (Denoising Samples).

The expression on the right-hand side of (2.1.5), that is,

| (2.1.13) |

is what’s known as a denoising problem, thusly named because it is an optimization problem whose solution removes the noise from the samples so it fits on the subspace. Denoising—learning a map which removes noise from noisy samples so that it fits on the data structure (such as in (2.1.1), but maybe more complicated)—is a common method for learning distributions that will be discussed in the sequel and throughout the manuscript. Note that we have already discussed this notion, but it bears repeating due to its central importance in later chapters.

Remark 2.4 (Neural Network Interpretation).

If we do a PCA, we approximately recover the support of the distribution encoded by the parameter . The learned denoising map then takes the form . On top of being a denoiser, this can be viewed as a simple two-layer weight-tied linear neural network: the first layer multiplies by , and the second layer multiplies by , namely

| (2.1.14) |

Contrasting this to a standard two-layer neural network, we see a structural similarity:

| (2.1.15) |

In particular, PCA can be interpreted as learning a simple two-layer denoising autoencoder,222In fact, as we have mentioned in the previous chapter, PCA was one of the first problems that neural networks were used to solve [Oja82, BH89]. one of the simplest examples of a non-trivial neural network. In this framework, the learned representations would just be . In this way, PCA serves as a model problem for (deep) representation learning, which we will draw upon further in the monograph. Notice that in this analogy, the representations reflect, or are projections of, the input data towards a learned low-dimensional structure. This property will be particularly relevant in the future.

2.1.2 Pursuing Low-rank Structure via Power Iteration

There is a computationally efficient way to estimate the top eigenvectors of or any symmetric positive semidefinite matrix , called power iteration. This method is the building block of several algorithmic approaches to high-dimensional data analysis that we discuss later in the chapter, so we discuss it here.

Let be a symmetric positive semidefinite matrix. There exists an orthonormal basis for consisting of eigenvectors of , with corresponding eigenvalues . By definition, any eigenvector satisfies . Therefore, for any , is a “fixed point” to the following equation:

| (2.1.16) |

Theorem 2.2 (Power Iteration).

Assume that for all . If we compute the fixed point of (2.1.16) using the following iteration:

| (2.1.17) |

then, in the limit, will converge to a top unit-norm eigenvector of .

Proof.

First, note that for all , we have

| (2.1.18) |

Thus, has the same direction as and is unit norm, so we can write

| (2.1.19) |

Because all the eigenvectors of form an orthonormal basis for , we can write

| (2.1.20) |

where because is Gaussian the are all nonzero with probability . Thus, we can use our earlier expression for to write

| (2.1.21) |

Now, let us consider the case where . (The case with repeated top eigenvalues goes similarly.) Then we can write

| (2.1.22) |

Because for all , the terms inside the summation go to exponentially fast, and the remainder is the limit

| (2.1.23) |

which is a top unit eigenvector of . The top eigenvalue of can be estimated by , which converges similarly rapidly to . ∎

To find the second top eigenvector, we apply the power iteration algorithm to , which has eigenvectors and corresponding eigenvalues . By repeating this procedure times in sequence, we can very efficiently estimate the top eigenvectors of for any symmetric positive semidefinite matrix . Thus we can also apply it to to recover the top principal components, which is what we were after in the first place. Notice that this approach recovers one principal component at a time; we will contrast this to other algorithmic approaches, such as gradient descent on global objective functions, in future sections.

2.1.3 Probabilistic PCA

Notice that the above formulation makes no statistical assumptions on the data-generating process. However, it is common to include statistical elements within a given data model, as it may add further enlightening interpretations about the result of the analysis. As such, we ask the natural question: what is the statistical analogue to low-dimensional structure? Our answer is that a low-dimensional distribution is one whose support is concentrated around a low-dimensional geometric structure.

To illustrate this point, we discuss probabilistic principal component analysis (PPCA). This formulation can be viewed as a statistical variant of regular PCA. Mathematically, we now consider our data as samples from a random variable taking values in (also sometimes called a random vector). We say that has (approximate) low-rank statistical structure if and only if there exists an orthonormal matrix , a random variable taking values in , and a small random variable taking values in such that and are independent, and

| (2.1.24) |

Our goal is again to recover . Towards this end, we set up the analogous problem as in Section 2.1.1, i.e., optimizing over subspace supports and random variables to solve the following problem:

| (2.1.25) |

Since we are finding the best such random variable we can find its realization separately for each value of . Performing the same calculations as in Section 2.1.1, we obtain

| (2.1.26) |

again re-emphasizing the fact that the estimated subspace with principal components corresponds to a denoiser which projects onto that subspace. As before, we obtain

| (2.1.27) | ||||

| (2.1.28) |

and the solution to the latter problem is just the top principal components of the second moment matrix . Actually, the above problems are visually very similar to the equations for computing the principal components in the previous subsection, except with replacing . In fact, the latter quantity is an estimate for the former. Both formulations effectively do the same thing, and have the same practical solution—compute the left singular vectors of the data matrix , or equivalently the top eigenvectors of the estimated covariance matrix . The statistical formulation, however, has an additional interpretation. Suppose that and . We have

| (2.1.29) |

so that . Now working out we have

| (2.1.30) |

In particular, if is small, it holds that is approximately a low-rank matrix, in particular rank . Thus the top eigenvectors of essentially summarize the whole covariance matrix. But they are also the principal components, so we can interpret principal component analysis as performing a low-rank decomposition of .

Remark 2.5.

By using the probabilistic viewpoint of PCA, we achieve a clearer and more quantitative understanding of how it relates to denoising. First, consider the denoising problem in (2.1.26), namely

| (2.1.31) |

It is not too hard to prove that if has columns and if is an isotropic Gaussian random variable, i.e., with distribution ,333Other distributions work so long as they support all of , but the Gaussian is the easiest to work with here. then for any optimal solution to this problem, we have

| (2.1.32) |

and so the true supporting subspace, say , is recovered as the span of columns of , since

| (2.1.33) |

In particular, the learned denoising map is an orthogonal projection onto , pushing noisy points onto the ground truth supporting subspace. We can establish a similar technical result in the case where we only have finite samples, as in Theorem 2.1, but this takes more effort and technicality. Summarizing this discussion, we have the following informal Theorem.

Theorem 2.3.

Suppose that the random variable can be written as

| (2.1.34) |

where captures the low-rank structure, is a random vector taking values in , and is a random vector taking values in such that and are independent, and is small. Then the principal components of our dataset are given by the top eigenvectors of , and approximately correspond to the optimal linear denoiser: .

2.1.4 Matrix Completion

In the previous subsections, we discussed the problem of learning a low-rank geometric or statistical distribution, where the data were sampled from a subspace with additive noise. But this is not the only type of disturbance from a low-dimensional distribution that is worthwhile to study. In this subsection, we introduce one more class of non-additive errors which become increasingly important in deep learning. Let us consider the case where we have some data generated according to (2.1.1). Now we arrange them into a matrix . Unlike before, we do not observe directly; we instead imagine that our observation was corrupted en route and we obtained

| (2.1.35) |

where is a mask that is known by us, and is element-wise multiplication. In this case, our goal is to recover (from which point we can use PCA to recover , etc), given only the corrupted observation , the mask , and the knowledge that is (approximately) low-rank. This is called low-rank matrix completion.

There are many excellent resources discussing algorithms and approaches to solve this problem [WM22]. Indeed, this and similar generalizations of this low-rank structure recovery problem are resolved by “robust PCA”. We will not go into the solution method here. Instead, we will discuss under what conditions this problem is plausible to solve. On one hand, in the most absurd case, suppose that each entry of the matrix were chosen independently from all the others. Then there would be no hope of recovering exactly even if only one entry were missing and we had entries. On the other hand, suppose that we knew that has rank 1 exactly, which is an extremely strong condition on the low-dimensional structure of the data, and we were dealing with the mask

| (2.1.36) |

Then we know that the data are distributed on a line, and we know a vector on that line—it is just the first column of the matrix . From the last coordinate of each column, also revealed to us by the mask, we can solve for each column since for each final coordinate there is only one vector on the line with this coordinate. Thus we can reconstruct with perfect accuracy, and we only need a linear number of observations .

In the real world, actual problems are somewhere in between the two limiting cases discussed above. Yet the differences between these two extremes, as well as the earlier discussion of PCA, reveal a general kernel of truth:

The lower-dimensional and more structured the data distribution is, the easier it is to process, and the fewer observations are needed—provided that the algorithm effectively utilizes this low-dimensional structure.

As is perhaps predictable, we will encounter this motif repeatedly in the remainder of the manuscript, starting in the very next section.

2.2 A Mixture of Complete Low-Dimensional Subspaces

As we have seen, low-rank signal models are rich enough to provide a full picture of the interplay between low-dimensionality in data and efficient and scalable computational algorithms for representation and recovery under errors. These models imply a linear and symmetric representation learning pipeline (2.1.6):

which can be provably learned from finite samples of with principal component analysis (solved efficiently, say, with the power method) whenever the distribution of truly is linear. This is a restrictive assumption—for as Harold Hotelling, the distinguished 20th century statistician,444By coincidence, also famous for his contributions to the development and naming of Principal Component Analysis [Hot33]. objected following George Dantzig’s presentation of his theory of linear programming for the first time [Dan02],

“…we all know the world is nonlinear.”

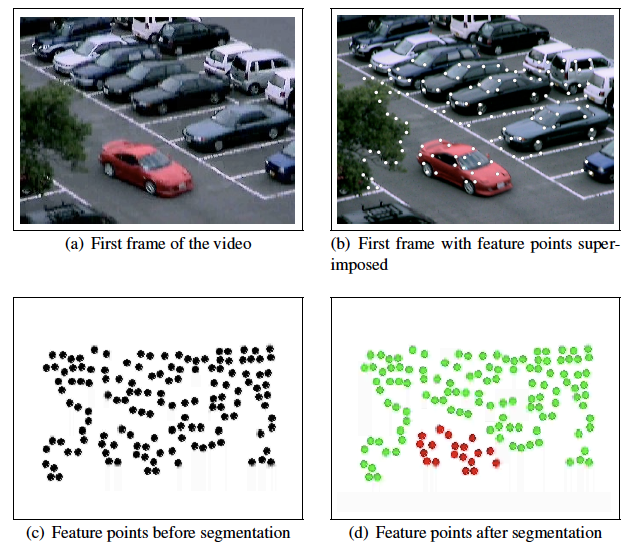

Even accounting for its elegance and simplicity, the low-rank assumption is too restrictive to be broadly applicable to modeling real-world data. A key limitation is the assumption of a single linear subspace that is responsible for generating the structured observations. In many practical applications, structure generated by a mixture of distinct low-dimensional subspaces provides a more realistic model. For example, consider a video sequence that captures the motion of several distinct objects, each subject to its own independent displacement (Figure 2.3 left). Under suitable assumptions on the individual motions, each object becomes responsible for an independent low-dimensional subspace in the concatenated sequence of video frames [VM04]. As another example, consider modeling natural images via learning a model for the distribution of patches, spatially-contiguous collections of pixels, within an image (Figure 2.3 right). Unlike in the Eigenface example we saw previously, where images of faces with matched poses can be well-approximated by a single low-dimensional subspace, the patch at a specific location in a natural image can correspond to objects with very different properties—for example, distinct color or shape due to occlusion boundaries. Therefore, modeling the distribution of patches with a single subspace is futile, but a mixture of subspaces, one for each region, performs surprisingly well in practice, say for segmenting or compressing purposes [MRY+11].555We will return to this observation in Chapter 4, where we will show it can be significantly generalized to learn representations for large-scale modern datasets. We will see a concrete example in the next chapter (Example 3.12).

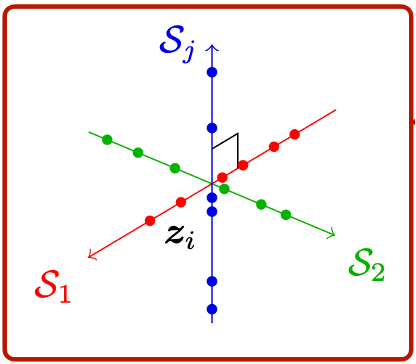

In this section, we will begin by discussing the conceptual and algorithmic foundations for structured representation learning when the data distribution can be modeled by a mixture of low-dimensional subspaces, as illustrated in Figure 2.4. In this setting, the decoder mapping will be almost as simple as the case of a single subspace: we simply represent via

| (2.2.1) |

where are a set of sparse weighting random variables, such that only a single subspace is selected in the sum. However, the task of encoding such data to suitable representations , and learning such an encoder-decoder pair from data, will prove to be more involved.

We will see how ideas from the rich literature on sparse representation and independent component analysis lead to a natural reformulation of the above decoder architecture through the lens of sparsity, the corresponding encoder architecture (obtained through a power-method-like algorithm analogous to that of principal component analysis), and strong guarantees of correctness and efficiency for learning such encoder-decoder pairs from data. In this sense, the case of mixed linear low-dimensional structure already leads to many of the key conceptual components of structured representation learning that we will develop in far greater generality in this book.

2.2.1 Mixtures of Subspaces and Sparsely-Used Dictionaries

Let , each of size , denote a collection of orthonormal bases for subspaces of dimension in . To say that follows a mixture-of-subspaces distribution parameterized by means, geometrically speaking, that

| (2.2.2) |

The statistical analogue of this geometric model, as we saw for the case of PCA and linear structure, is that follows a mixture of Gaussians distribution: that is,

| (2.2.3) |

In other words, for each , is Gaussian on the low-dimensional subspace with probability .

Remark 2.6 (A Mixture of Gaussians v.s. A Superposition of Gaussians).

One should be aware that the above model (2.2.3) is a mixture of Gaussian distributions, not to be confused with a mixture of Gaussian variables by superposition, say

| (2.2.4) |

where are independent random Gaussian vectors and are a set of fixed weights. As we know from the properties of Gaussian vectors, such a superposition will remain a Gaussian distribution.

For now, we focus on the geometric perspective offered by (2.2.2). There is an algebraically convenient alternative to this conditional representation. Consider a lifted representation vector , such that is -sparse with support on one of the consecutive non-overlapping blocks of coordinates out of . Then (2.2.2) can be written equivalently as

| (2.2.5) |

Here, the “norm” measures sparsity by counting the number of nonzero entries:

| (2.2.6) |

and the matrix is called a dictionary with all the as code words. In general, if the number of subspaces in the mixture is large enough, there is no bound on the number of columns contained in the dictionary . In the case where , is called undercomplete; when , it is called complete; and when , it is called overcomplete.

Now, (2.2.5) suggests a convenient relaxation for tractability of analysis: rather than modeling as coming from a mixture of specific subspaces , we may instead start with a dictionary , where may be smaller or larger than , and simply seek to represent with the sparsity sufficiently small. This leads to the sparse dictionary model for :

| (2.2.7) |

where represents an unknown noise vector. Geometrically, this implies that lies close to the span of a subset of columns of , making this an instantiation of the mixture-of-subspaces model (2.2.2) with a very large value of , and specific correlations between the subspaces .

Orthogonal dictionary for sparse coding.

Now we can formulate the structured representation learning problem for mixtures of low-dimensional subspaces that we will study in this section. We assume that we have samples from an unknown sparse dictionary model (2.2.7), possibly with added noises . Let us begin from the assumption that the dictionary in the sparse dictionary model (2.2.7) is complete and orthogonal,666It can be shown that for the complete case, we do not lose any generality by making the orthogonal assumption (Exercise 2.4). and that each coefficient vector is -sparse, with . In this setting, representation learning amounts to correctly learning the orthogonal dictionary via optimization: we can then take as the encoder and as the decoder, and . In diagram form:

| (2.2.8) |

We see that the autoencoding pair for complete dictionary learning is symmetric, as in the case of a single linear subspace, making the computational task of encoding and decoding no more difficult than in the linear case. On the other hand, the task of learning the dictionary is strictly more difficult than learning a single linear subspace by PCA. To see why we cannot simply use PCA to learn the orthogonal dictionary correctly, note that the loss function that gave rise to PCA, namely (2.1.5), is completely invariant to rotations of the rows of the matrix : that is, if is any orthogonal matrix, then and are both feasible and have an identical loss for (2.1.5). The sparse dictionary model is decidedly not invariant to such transformations: if we replaced by and made a corresponding rotation of the representation coefficients , we would in general destroy the sparsity structure of , violating the modeling assumption. Thus, we need new algorithms for learning orthogonal dictionaries.

2.2.2 Complete Dictionary Learning

In this section, we will derive algorithms for solving the orthogonal dictionary learning problem. To be more precise, we assume that the observed vector follows a statistical model

| (2.2.9) |

where is an unknown orthogonal dictionary, is a random vector with statistically independent components , each with zero mean, and is an independent random vector of small (Gaussian) noises. The goal is to recover (and hence ) from samples of .

Here we assume that each independent component is distributed as

That is, it is the product of a Bernoulli random variable with probability of being and of being , and an independent Gaussian random variable with variance . This distribution is formally known as the Bernoulli-Gaussian distribution. The normalization is chosen so that and hence . This modeling assumption implies that the vector of independent components is typically very sparse: we calculate , which is small when is inversely proportional to .

Remark 2.7 (The Orthogonal Assumption).

At first sight, the assumption that the dictionary is orthogonal might seem to be somewhat restrictive. But there is actually no loss of generality. One may consider a complete dictionary to be any square invertible matrix . With samples generated from this dictionary: , it is easy to show that with some preconditioning of the data matrix :

| (2.2.10) |

then there exists an orthogonal matrix such that

| (2.2.11) |

See Exercise 2.4 or [SQW17a] for more details.

Dictionary learning via the MSP algorithm.

Now suppose that we are given a set of observations:

| (2.2.12) |

Let and . The goal is to recover from the data . Therefore, given any orthogonal matrix ,

| (2.2.13) |

would be nearly sparse if (as by assumption the noise is of small magnitude).

Also, given is orthogonal and the fact is small, the vector has a predictable expected norm, i.e., . It is a known fact that for vectors on a sphere, maximizing the norm is equivalent to minimizing the norm (for promoting sparsity),

| (2.2.14) |

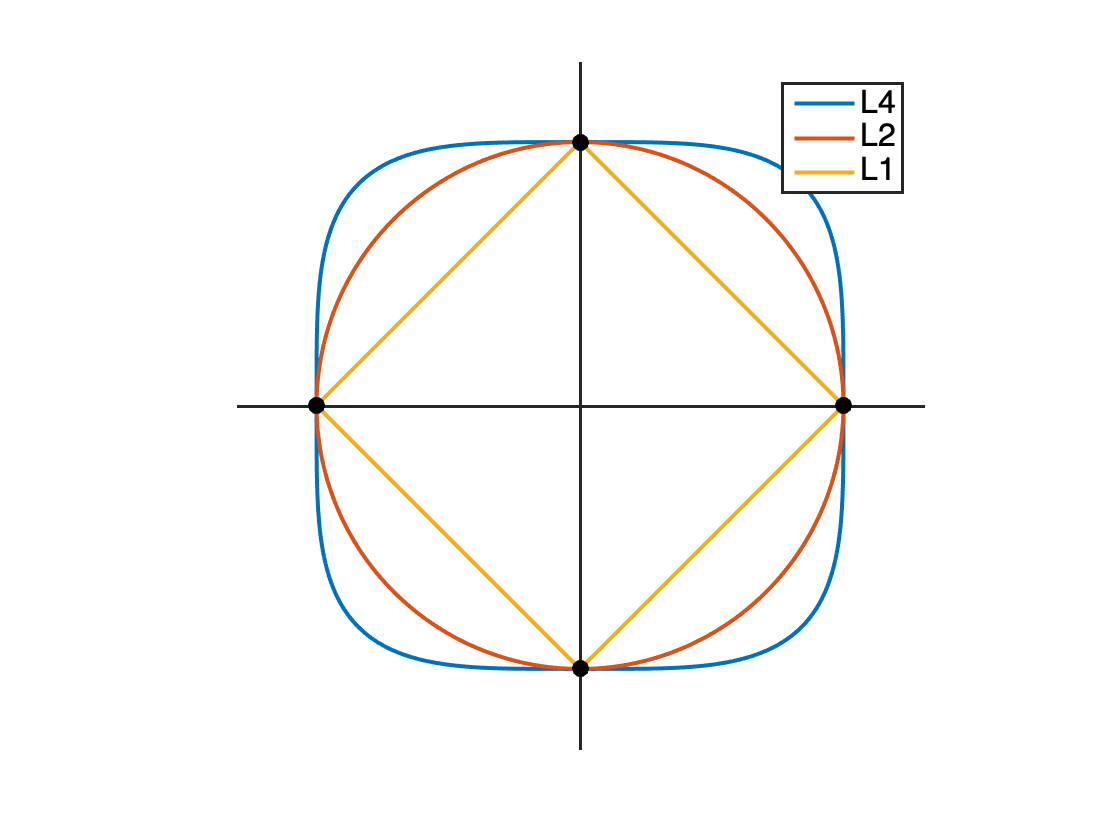

This is illustrated in Figure 2.5.

An orthogonal matrix preserves the Euclidean () norm: . Therefore, to find the correct orthogonal dictionary from , we may try to solve the following optimization program:

| (2.2.15) |

This is known as the maximization problem [ZMZ+20]. After we find the solution , we can take the transpose .

Remark 2.8.

It is also known that for vectors on a sphere, minimizing the norm is equivalent to minimizing the norm (for promoting sparsity),

which is also illustrated in Figure 2.5. This fact can also be exploited to learn the dictionary effectively and efficiently. This was actually explored earlier than the norm used here. Interested readers may refer to the work of [QZL+20a].

Note that the above problem is equivalent to the following constrained optimization problem:

| (2.2.16) |

As shown in [WM22], using the Lagrange multiplier method, one can derive that the optimal solution to the problem should satisfy the following “fixed point” condition:

| (2.2.17) |

where is a projection onto the space of orthogonal matrices .777For any matrix with SVD , . We leave this as an exercise for the reader.

To compute the fixed point for the above equation, similar to how we computed eigenvectors for PCA (2.1.16), we may take the following power iteration:

| (2.2.18) |

This is known as the matching, stretching, and projection (MSP) algorithm proposed by [ZMZ+20]. It was shown that under broad conditions such a greedy algorithm indeed converges to the correct solution at a superlinear rate.

Remark 2.9 (Global Optimality of Maximization).

The constrained maximization problem is a nonconvex program. In general one should not expect that any greedy (say gradient-descent type) algorithms would converge to the globally optimal solution. Surprisingly, one can show that, unlike general nonconvex programs, the landscape of maximization over a sphere

| (2.2.19) |

is very benign: All local minima are close to the global optima and all critical points are saddle points with a direction of negative curvature. Hence, any descent method with the ability of escaping strict saddle points provably finds global optimal solutions. For more precise statements, interested readers may refer to [QZL+20].

Remark 2.10 (Stable Deep Linear Network).

The above iterative process of computing the dictionary has a natural incremental “deep learning” interpretation. Let us define and , then it is easy to show that

If converges to the correct dictionary , then the above iterative encoding process is essentially equivalent to a “deep linear network”:

Note that the computation of the increment transforms at each layer depends only on the feature output from the previous layer . The network is naturally stable as each layer is a norm-preserving orthogonal transform. Despite its resemblance to a linear deep network, backpropagation is unnecessary to learn each layer. All layers are learned in one forward pass!

2.2.3 Connection to ICA and Kurtosis

With the Bernoulli-Gaussian model, the variables are independent and non-Gaussian. Then, there is a clear correspondence between the dictionary learning and the classic independent component analysis (ICA), to the extent that algorithms to solve one problem can be used to solve the other.888We explore this issue in more depth in Exercise 2.3, where a connection between non-Gaussianity of the independent components and the purely geometric notion of symmetry is made. This issue is related to our observation above that PCA does not work for recovering sparsely-used orthogonal dictionaries: in the statistical setting, it can be related to rotational invariance of the Gaussian distribution (Exercise 2.2).

Towards deriving an algorithm based on ICA, we focus on an objective function known as kurtosis, which is used in ICA as a direct consequence of the non-Gaussianity of the components. The kurtosis, or fourth-order cumulant, of a zero-mean random variable is defined as

| (2.2.20) |

If we have only finite samples from the random variable arranged into a vector , we define kurtosis through their empirical average, which yields

| (2.2.21) |

Finally, for random vectors, we define their kurtosis as the sum of each component’s scalar kurtosis. Kurtosis is a natural loss function for ICA because for Gaussian , kurtosis is zero; the reader can verify further that the Bernoulli-Gaussian distribution has positive kurtosis. Thus a natural procedure for seeking non-Gaussian independent components is to search for a set of mutually-orthogonal directions such that has maximal kurtosis, where is the Bernoulli-Gaussian ICA data matrix. Formally, we seek to solve the problem

| (2.2.22) |

At one extreme, we may set and seek to recover the entire dictionary in a single shot. It can be shown that this problem can be solved with the MSP algorithm we have seen previously. At the other extreme, we may set and seek to recover a single direction (column of ) at a time, performing deflation, i.e., replacing the data matrix by , after each step before finding another direction. There is a natural tradeoff between the scalability of the incremental approach and the efficiency and robustness of the approach.

Incremental ICA: correctness and FastICA algorithm.

The FastICA algorithm, advanced by Hyvärinen and Oja [HO97], is a fast fixed-point algorithm for solving the kurtosis maximization scheme for ICA. The problem at hand is

| (2.2.23) |

First, we will perform some very basic analysis of this objective to verify its correctness. Notice by the change of variables that this problem is equivalent to

This objective is simple enough that we can make strong statements about its correctness as a formulation for recovering the dictionary . For example, in the population setting where , we may use additivity properties of the kurtosis (Exercise 2.5) and our assumed normalization on the independent components to write the previous problem equivalently as

| (2.2.24) |

It can be shown that under the Bernoulli-Gaussian assumption, the optimization landscape of this problem is “benign” (Exercise 2.7)—meaning that all local maxima of the objective function correspond to the recovery of one of the independent components. One efficient and scalable way to compute one of these maxima is via first-order optimization algorithms, which iteratively follow the gradient of the objective function and project onto the constraint set . Since we have assumed that each satisfies , we have for large

| (2.2.25) |

We can then derive a corresponding approximation to the gradient:

The FastICA algorithm uses a fixed-point method to compute directions of maximum kurtosis. It starts from the first-order optimality conditions for the kurtosis maximization problem, given the preceding gradient approximation and the constraint set, which read

| (2.2.26) |

where the specific value of is determined using the unit norm constraint on . Exercise 2.6 describes the mathematical background necessary to derive these optimality conditions from first principles. Equation (2.2.26) is satisfied by any critical point of the kurtosis maximization problem; we want to derive an equation satisfied by only the maximizers. After noticing that , we equivalently re-express (2.2.26) as the modified equation

| (2.2.27) |

and realize that any maximizer of (2.2.23) must satisfy , assuming that is sufficiently large. Hence, we may normalize both sides of (2.2.27), giving the following fixed-point equation satisfied by every maximizer of (2.2.23):

| (2.2.28) |

Iterating the mapping defined by the lefthand side of this fixed point expression then yields the FastICA algorithm of Hyvärinen and Oja [HO97]:

| (2.2.29) |

It turns out that the FastICA algorithm converges extremely rapidly (actually at a cubic rate) to columns of the dictionary ; interested readers may consult [HO97] for details. Comparing the FastICA algorithm (2.2.29) to the power method studied in Section 2.1.1 for the PCA problem and the MSP algorithm (2.2.18), we notice a striking similarity. Indeed, FastICA is essentially a modified power method, involving the gradient of the empirical kurtosis rather than the simpler linear gradient of the PCA objective.

2.3 A Mixture of Overcomplete Low-Dimensional Subspaces

As we have seen, complete dictionary learning enjoys an elegant computational theory in which we maintain a symmetric autoencoding structure , , with a scalable power-method-like algorithm (the MSP algorithm) for learning an orthogonal dictionary/codebook from data. Nevertheless, for learning representations of general high-dimensional data distributions, one must expand the size of the codebook beyond the orthogonality requirement—meaning that we must have , with , corresponding to the case of an overcomplete dictionary/codebook,999We change the notation here from to in order to emphasize the non-orthogonality and non-square-shape of the overcomplete dictionary . and the signal model

| (2.3.1) |

There are both geometric and physical/modeling motivations for passing to the overcomplete case. Geometrically, recall that in our original reduction from the mixture of subspace data model to the sparse dictionary model, a mixture of subspaces in , each of dimension , led to a dictionary of shape . In other words, overcomplete dictionaries correspond to richer mixtures of subspaces, with more distinct modes of variability for modeling the high-dimensional data distribution. On the modeling side, we may run a computational experiment on real-world data that reveals the additional modeling power conferred by an overcomplete representation.

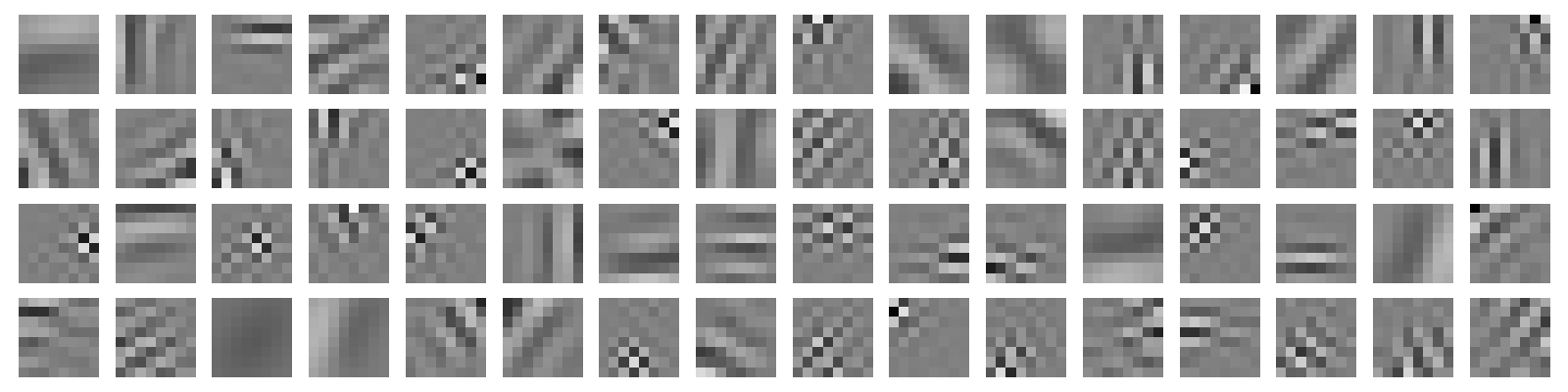

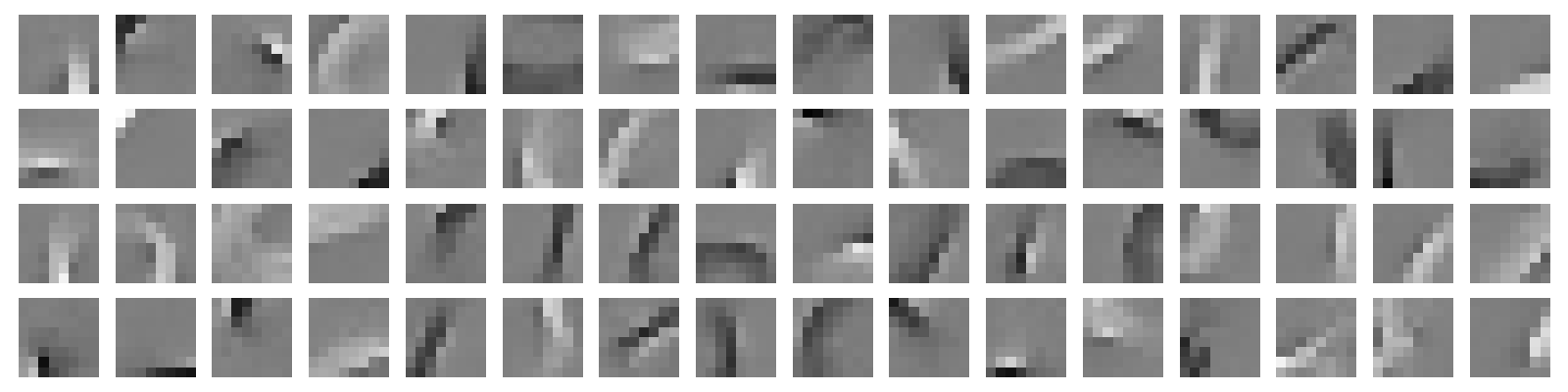

Example 2.1.

Given sampled images of hand-written digits, Figure 2.6(a) shows the result of fitting an orthogonal dictionary to the dataset. In contrast, Figure 2.6(b) shows the result of running an optimization algorithm for learning overcomplete dictionaries (which we will present in detail later in the Chapter) on these samples. Notice that the representations become far sparser and the codebooks far more interpretable—they consist of fundamental primitives for the strokes composing the digits, i.e. oriented edges.

In fact, overcomplete dictionary learning was originally proposed as a biologically plausible algorithm for image representation based on empirical evidence of how early stages of the visual cortex represent visual stimuli [OF96, OF97].

In the remainder of this section, we will overview the conceptual and computational foundations of overcomplete dictionary learning. Supposing that the model (2.3.1) is satisfied with sparse codes , overcomplete dictionary , and sparsity level , and given samples of , we want to learn an encoder mapping each to its sparse code , and a decoder reconstructing each from its sparse code. In diagram form:

| (2.3.2) |

We will start from the construction of the encoder . We will work incrementally: first, given the true dictionary , we will show how the problem of sparse coding gives an elegant, scalable, and provably-correct algorithm for recovering the sparse code of . Although this problem is NP-hard in the worst case, it can be solved efficiently and scalably for dictionaries which are incoherent, i.e. having columns that are not too correlated. The encoder architecture encompassed by this solution will no longer be symmetric: we will see it has the form of a primitive deep network, which depends on the dictionary .

Then we will proceed to the task of learning the decoder , or equivalently the overcomplete dictionary . We will derive an algorithm for overcomplete dictionary learning that allows us to simultaneously learn the codebook and the sparse codes , using ideas from sparse coding. Finally, we will discuss a more modern perspective on learnable sparse coding that leads us to a fully asymmetric encoder-decoder structure, as an alternative to (2.3.2). Here, the decoder will correspond to an incremental solution to the sparse dictionary learning problem, and yield a pair of deep network-like encoder decoders for sparse dictionary learning. This structure will foreshadow many developments to come in the remainder of the monograph, as we progress from analytical models to modern neural networks.

2.3.1 Sparse Coding with an Overcomplete Dictionary

In this section, we will consider the data model (2.3.1), which accommodates sparse linear combinations of many motifs, or atoms. Given data satisfying this model, i.e. expressible as

| (2.3.3) |

for some dictionary with atoms, sparse codes such that , and small errors , the sparse coding problem is to recover the codes as accurately as possible from the data , given the dictionary . Efficient algorithms to solve this problem succeed when the dictionary is incoherent in the sense that the inner products are uniformly small, hence the atoms are nearly orthogonal.101010As it turns out, in a high-dimensional space, it is rather easy to pack a number of nearly orthogonal vectors that is much larger than the ambient dimension [WM22].

Note that we can collect the into , collect the into , and collect the into , to rewrite (2.3.3) as

| (2.3.4) |

A natural approach to solving the sparse coding problem is to seek the sparsest signals that are consistent with our observations, and this naturally leads to the following optimization problem:

| (2.3.5) |

where the norm , taken elementwise, is known to promote sparsity of the solution [WM22]. This optimization problem is known as the LASSO problem.

However, unlike PCA or the complete dictionary learning problem, there is no clear power iteration-type algorithm to recover . A natural alternative is to consider solving the above optimization problem with gradient descent type algorithms. Let . Conceptually, we could try to find with the following iterations:

| (2.3.6) |

However, because the term associated with the norm is non-smooth, we cannot just run gradient descent. For this type of function, we need to replace the gradient with something that generalizes the notion of gradient, known as the subgradient :

| (2.3.7) |

However, it is known that the convergence of subgradient descent is usually very slow. Hence, for this type of optimization problems, it is conventional to adopt a so-called proximal gradient descent-type algorithm. We give a technical overview of this method in Section A.1.3.

Applying proximal gradient to the LASSO objective function (2.3.5) leads to the classic iterative shrinkage-thresholding algorithm (ISTA), which implements the iteration

| (2.3.8) | |||||

| (2.3.9) |

with step size , and the soft-thresholding operator defined on scalars by

| (2.3.10) | ||||

| (2.3.11) |

and applied element-wise to the input matrix. As a proximal gradient descent algorithm applied to a convex problem, convergence to a global optimum is assured, and a precise convergence rate can be derived straightforwardly [WM22].

We now take a moment to remark on the functional form of the update operator in (2.3.9). It takes the form

| (2.3.12) |

This functional form is very similar to that of a residual network layer, namely,

| (2.3.13) |

only decoupling the linear maps (i.e., matrix multiplications), adding a bias, and moving the nonlinearity. The nonlinearity in (2.3.9) is notably similar to the commonly-used ReLU activation function in deep learning—in particular, the soft-thresholding operator is like a “signed” ReLU activation, which is also shifted by a bias. The ISTA, then, can be viewed as a forward pass of a primitive (recurrent one-layer) neural network. We argue in Chapter 4 that such operations are essential to deep representation learning.

2.3.2 Overcomplete Dictionary Learning

Recall that we have the data model

| (2.3.14) |

where is sparse, and our goal previously was to estimate given knowledge of the data and the dictionary atoms . Now we turn to the more practical and more difficult case where we do not know either or and seek to learn them from a large dataset.

A direct generalization of Equation 2.3.5 suggests solving the problem

| (2.3.15) |

However, the bilinear term in Equation 2.3.15 introduces a scale ambiguity that hinders convergence: given any point and any constant , the substitution gives loss value

| (2.3.16) |

This loss is evidently minimized over by taking , which corresponds to making the rescaled dictionary go ‘to infinity’. In particular, the iterates of any optimization algorithm solving (2.3.15) will not converge.

This issue with (2.3.15) is dealt with by adding a constraint on the scale of the columns of the dictionary . For example, it is common to assume that each column of the dictionary in (2.3.14) has bounded norm—say, without loss of generality, by . We then enforce this as a constraint, giving the objective

| (2.3.17) |

Constrained optimization problems such as (2.3.17) can be solved by a host of sophisticated algorithms [NW06]. However, a simple and scalable one is actually furnished by the same proximal gradient descent algorithm that we used to solve the sparse coding problem in the previous section. We can encode each constraint as additional regularization term, via the characteristic function for the constraint set—details are given in Example A.1. Applying proximal gradient descent to the resulting regularized problem is equivalent to projected gradient descent, in which, at each iteration, the iterates after taking a gradient descent step are projected onto the constraint set.

Remark 2.11 ( maximization versus minimization).

Note that the above problem formulation follows naturally from the LASSO formulation (2.3.5) for sparse coding. We promote the sparsity of the solution via the norm. Nevertheless, if we are only interested in recovering the over-complete dictionary , the maximization scheme introduced in Section 2.2.2 also generalizes to the over-complete case, without any significant modification. Interested readers may refer to the work of [QZL+20].

The above problem (2.3.17), which we call overcomplete dictionary learning, is nonconvex as here both and are unknown. It cannot be solved easily by the standard convex optimization toolkit. Nevertheless, because it is interesting, simple to state, and practically important, there have been many important works dedicated to different algorithms and theoretical analysis for this problem. Here, for the interest of this manuscript, we present an idiomatic approach to solve this problem which is closer to the spirit of deep learning.

From our experience with the LASSO problem above, it is easy to see that, for the two unknowns and , if we fix one and optimize the other, each subproblem is in fact convex and easy to solve. This naturally suggests that we could attempt to solve the above program (2.3.17) by minimizing against or alternatively, say using gradient descent. Coupled with a natural choice of initialization, this leads to the following iterative scheme:

| (2.3.18) | |||

| (2.3.19) | |||

| (2.3.20) | |||

| (2.3.21) |

where the projection operation in the update for ensures each column has at most unit norm, via , and where is initialized with each column i.i.d. . The above consists of one ‘block’ of alternating minimization, and we repeatedly perform such blocks, each with independent initializations, until convergence. Above, we have used two separate indices and to indicate the iterations. As we will see later, this allows us to interpret the two updates separately in the context of deep learning.

Despite the dictionary learning problem being a nonconvex problem, it has been shown that alternating minimization type algorithms indeed converge to the correct solution, at least locally. See, for example, [AAJ+16]. As a practical demonstration, the above algorithm (with ) was used to generate the results for overcomplete dictionary learning in Figure 2.6.

2.3.3 Learned Deep Sparse Coding

The main insight from the alternating minimization algorithm for overcomplete dictionary learning in the previous section (Equations 2.3.18 and 2.3.20) is to notice that when we fix , the ISTA update for (2.3.18) looks like the forward pass of a deep neural network with weights given by (and ). But in general, we do not know the true , and the current estimate could be erroneous. Hence it needs to be further updated using (2.3.20) based on the residual of using the current estimate of the sparse codes to reconstruct . The alternating minimization algorithm iterates these two procedures until convergence. But we can instead extrapolate, and design other learning procedures by combining these insights with techniques from deep learning. This leads to more interpretable network architectures, which will be a recurring theme throughout this manuscript.

Learned ISTA.

The above deep-network interpretation of the alternating minimization is more conceptual than practical, as the process could be rather inefficient and take many layers or iterations to converge. But this is mainly because we try to infer both and from . The problem can be significantly simplified and the above iterations can be made much more efficient in the supervised setting, where we have a dataset of input and output pairs distributed according to (2.3.14) and we only seek to learn for the layerwise learnable sparse coding iterations (2.3.29):

| (2.3.22) |

If we denote the operator for each iteration as , the above iteration can be illustrated in terms of a diagram:

Thus, given the sequential architecture, to learn the operator at each layer, it is completely natural to learn it, say via back propagation (BP),111111See Section A.2 for a brief description of BP. by minimizing the error between the final code and the ground truth :

| (2.3.23) |

This is the basis of the Learned ISTA (LISTA) algorithm [GL10], which can be viewed as the learning algorithm for a deep neural network, which tries to emulate the sparse encoding process from to . In particular, it can be viewed as a simple representation learning algorithm. In fact, this same methodology can be used as a basis to understand the representations computed in more powerful network architectures, such as transformers. We develop these implications in detail in Chapter 4.

Sparse Autoencoders.

The original motivation for overcomplete dictionary learning was to provide a simple generative model for high-dimensional data. We have seen with LISTA that, in addition, iterative algorithms for learning sparsely-used overcomplete dictionaries provide an interpretation for ReLU-like deep networks, which we will generalize in later chapters to more complex data distributions than (2.3.14). But it is also worth noting that even in the modern era of large models, the data generating model (2.3.14) provides a useful practical basis for interpreting features in pretrained large-scale deep networks, such as transformers, following the hypothesis that the (non-interpretable, a priori) features in these networks consist of sparse “superpositions” of underlying features, which are themselves interpretable [EHO+22a]. These unsupervised learning paradigms are generally more data friendly than LISTA, as well, which requires large amounts of labeled pairs for supervised training.

We can use our development of the LISTA algorithm above to understand common practices in this field of research. In the most straightforward instantiation (see [HCS+24, GTT+25]), a large number of features from a pretrained deep network are collected from different inputs , which themselves are chosen based on a desired interpretation task.121212For example, the inputs could correspond to texts containing samples of computer code in different programming languages, with our task being to try to identify interpretable features in a transformer feature map corresponding to different salient aspects of the input, such as the specific programming language (distinct across input “classes”) or the need to insert a matching parenthesis at the current position (common across input “classes”). We discuss the use of deep networks, and in particular transformers, for text representation learning in greater detail in Chapter 7. For simplicity, we will use to denote the pre-selected feature map in question, with -dimensional features; given sample inputs, let denote the full matrix of features of . Then a so-called sparse autoencoder , with decoder , is trained via the LASSO loss (2.3.5):

| (2.3.24) |

where the sparse autoencoder takes the form of a one-layer neural network, i.e. , where is the ReLU activation function, and the decoder is linear, so that .

The parameterization and training procedure (2.3.24) may initially seem to be an arbitrary application of deep learning to the sparse coding problem, but it is actually highly aligned with the algorithms we have studied above for layerwise sparse coding with a learned dictionary. In particular, recall the LISTA architecture . In the special case , we have

| (2.3.25) |

Let us assume that the sparse codes in question are nonnegative, i.e., that .131313In the data generating model (2.3.14), an arbitrary dictionary-and-sparse-code pair can be replaced by one in which simply by doubling the number of columns in , so from a modeling perspective, this is a very mild assumption. Then (see Example A.3), we can consider an equivalent LISTA architecture obtained from the sparse coding objective with an additional nonnegativity constraint on as

| (2.3.26) |

and after some algebra, express this as

| (2.3.27) |

Given the ability to change the sparse code initialization as a learnable parameter (which, in the current framework, must have all columns equal to the same learnable vector), this has the form of a ReLU neural network with learnable bias—identical to the sparse autoencoder ! Moreover, to decode the learned sparse codes , it is natural to apply the learned dictionary . Then the only difference between this and the SAE decoder is the additional bias , which can technically be absorbed into and in the training objective (2.3.24).

Thus, the SAE parameterization and training procedure coincides with LISTA training with , and a modified training objective—using the LASSO objective (2.3.5), which remains unsupervised, instead of the supervised reconstruction loss (2.3.23) used in vanilla LISTA. In particular, we can understand the SAE architecture in terms of our interpretation of the LISTA architecture in terms of layerwise sparse coding in (2.3.29). This connection is suggestive of a host of new design strategies for improving practical interpretability methodology, many of which remain tantalizingly unexplored. We begin to lay out some connections to broader autoencoding methodology in Chapter 5.

Layerwise learned sparse coding?

In the supervised setting, LISTA provides a deep neural network analogue of the sparse coding iteration, with layerwise-learned dictionaries, inspired by alternating minimization; even in the unsupervised setting, the same methodology can be applied to learning, as with sparse autoencoders. But the connection between low-dimensional-structure-seeking optimization algorithms and deep network architectures goes much deeper than this, and suggests an array of scalable and natural neural learning architectures which may even be usable without backpropagation.

As a simple illustration, we return the alternating minimization iterations (2.3.18) and (2.3.20). This scheme randomly re-initializes the dictionary on every such update. An improvement uses instead warm starting, where the residual is generated using the previous estimate for the dictionary. If we then view each ISTA update (2.3.18) as a layer and allow the associated dictionary, now coupled with the sparse code updates as , to update in time, this leads to a “layerwise-learnable” sparse coding scheme:

| (2.3.28) | |||

| (2.3.29) | |||

| (2.3.30) |

Note that this iteration corresponds to a relabeling of (2.3.18) and (2.3.20) for , over infinitely many blocks. Each of the ‘inner’ steps updating can be considered as a one-layer forward pass, while each of the ‘outer’ steps updating can be considered as a one-layer backward pass, of a primitive deep neural network. In particular, this algorithm is the simplest case in which a clear divide between forward optimization and backward learning manifests. This divide is still observed in current neural networks and autoencoders—we will have much more to say about it in Chapter 4 and in Chapter 5.

Notice that the above layer-wise scheme also suggests a plausible alternative to the current end-to-end optimization strategy that primarily relies on back propagation (BP) detailed in Section A.2.3. Freeing training large networks from BP would be one of the biggest challenges and opportunities in the future, as we will discuss more at the end of the book in Chapter 8.

2.4 Summary and Notes

The idealistic models we have presented in this chapter—PCA, ICA, and dictionary learning—were developed over the course of the twentieth century. Many books have been written solely about each method, so we will only attempt here to give a broad overview of the key works and history.

Jolliffe [Jol86] attributes principal component analysis to Pearson [Pea01], and independently Hotelling [Hot33]. In mathematics, the main result on the related problem of low-rank approximation in unitarily invariant norms is attributed to Eckart and Young [EY36], and to Mirsky for full generality [Mir60]. PCA continues to play an important role in research as perhaps the simplest model problem for unsupervised representation learning: as early as the 1980s, works such as [Oja82] and [BH89] used the problem to understand learning in primitive neural networks, and more recently, it has served as a tool for understanding more complex representation learning frameworks, such as diffusion models [WZZ+24].

Independent component analysis was proposed by [BJC85] and pioneered by Aapo Hyvärinen in the 1990s and early 2000s in a series of influential works: see [HO00a] for a summary. As a simple model for structure that arises in practical data, it initially saw significant use in applications such as blind source separation, where each independent component represents an independent source (such as sound associated to a distinct instrument in a musical recording) that is superimposed to produce the observation .

The problem of dictionary learning can, in the complete or orthogonal case, be seen as one of the foundational problems of twentieth-century signal processing, particularly in linear systems theory, where the Fourier basis plays the key role; from the 1980s onward, the field of computational harmonic analysis crystallized around the study of alternate such dictionaries for classes of signals in which optimal approximation could only be realized in a basis other than Fourier (e.g., wavelets) [DVD+98]. However, the importance of the case of redundant bases, or overcomplete dictionaries, was only highlighted following the pioneering work of Olshausen and Field [OF96, OF97]. Early subsequent work established the conceptual and algorithmic foundations for learning sparsely-used overcomplete dictionaries, often aimed at representing natural images [Don01, DM03, EA06, MK07, AEB06, MBP14, GJB15]. Later, a significant amount of theoretical interest in the problem, as an important and nontrivial model problem for unsupervised representation learning, led to its study by the signal processing, theoretical machine learning, and theoretical computer science communities, in particular focused on conditions under which the problem could be provably and efficiently solved. A non-exhaustive list of notable works in this line include those of [SWW12] and [SQW17] on the complete case; [AGM+15]; [BKS15]; and [QZL+20]. Many deep theoretical questions about this simple-to-state problem remain open, perhaps in part due to a tension with the problem’s worst-case NP-hardness (e.g., see [Til15]).

| Problem | Algorithm | Iteration | Type of Structure Enforced |

|---|---|---|---|

| PCA | Power Method | -dim. subspace (unit vector) | |

| ICA | FastICA | -dim. subspace (unit vector) | |

| Complete DL | MSP Algorithm | -dim. subspace (orthogonal matrix) |

One point that we wish to highlight from the study of these classical analytical models for low-dimensional structure is the common role played by various generalized power methods—algorithms that very rapidly converge, at least locally, to various types of low-dimensional structures. The terminology for this class of algorithms follows the work of [MYP+10]. At a high level, modeled on the classical power iteration for computation of the top eigenvector of a semidefinite matrix , that is

| (2.4.1) |

this class of algorithms consists of a “powering” operation involving a matrix associated to the data, along with a “projection” operation that enforces a desired type of structure. Table 2.1 presents a summary of the algorithms we have studied in this chapter that follow this structure. The reader may appreciate the applicability of this methodology to different types of low-dimensional structure, and different losses (i.e., both the quadratic loss from PCA, and the kurtosis-type losses from ICA), as well as the lack of such an algorithm for overcomplete dictionary learning, despite the breadth of the literature on these algorithms. We see the development of power methods for further families of low-dimensional structures, particularly those relevant to applications where deep learning is prevalent, as one of the more important (and open) research questions suggested by this chapter.

The connection we make in Section 2.2.1 between the geometric mixture-of-subspaces distributional assumption and the more analytically-convenient sparse dictionary assumption has been mentioned in prior work, especially by those focused on generalized principal component analysis and applications such as subspace clustering, e.g., work of [VMS16]. The mixture of subspaces assumption will continue to play a significant role throughout this manuscript, both as an analytical test case for different algorithmic paradigms, and as a foundation for deriving different deep network architectures, as with LISTA in Section 2.3.3, but which can scale to more complex data distributions.

2.5 Exercises and Extensions

Exercise 2.1.

Prove that, for any symmetric matrix , the solution to the problem is the matrix whose columns are the top unit eigenvectors of .

Exercise 2.2.

Let be a Gaussian random variable with independent components, each with variance . Prove that for any orthogonal matrix (i.e., ), the random variable is distributed identically to . (Hint: recall the formula for the Gaussian probability density function, and the formula for the density of a linear function of a random variable.)

Exercise 2.3.

The notion of statistical identifiability discussed above can be related to symmetries of the model class, allowing estimation to be understood in a purely deterministic fashion without any statistical assumptions.

Consider the model for matrices of compatible sizes.

-

1.

Show that if is any square invertible matrix of compatible size, then the pair also equals under the model. We call this a symmetry.

-

2.

Suppose each column of is an independent and identically distributed observation from a common statistical model , which moreover has zero mean and independent components with positive variance. Show that for any square invertible matrix , if has uncorrelated components, then can be written as , where is an orthogonal matrix and , are diagonal matrices. This links the “independence” assumption in ICA to a “symmetry breaking” effect, which only allows scale and rotational symmetries.

Exercise 2.4.

Consider the model , where with is fixed and has rank , and is a zero-mean random variable. Let denote i.i.d. observations from this model.

-

1.

Show that the matrix has rank no larger than , and therefore there is an orthonormal matrix so that , where . (Hint: use PCA.)

-

2.

Show that the whitened matrix exists in expectation whenever is nonsingular, and that it has identity empirical covariance.141414In particular, it can be proved mathematically that this is enough to guarantee that the whitened matrix exists with high probability whenever satisfies a suitable concentration inequality and is sufficiently large.

-

3.

Show, by using the singular value decomposition of , that the matrix can be chosen so that the whitened matrix satisfies , where is an orthonormal matrix.

Exercise 2.5.

Let and be zero-mean independent random variables.

-

1.

Show that .

-

2.

For any , show that .

Exercise 2.6.

Let be a given twice-continuously-differentiable objective function. Consider the spherically-constrained optimization problem

| (2.5.1) |

In this exercise, we will derive the expressions we gave in the FastICA derivation for maximizing kurtosis over the sphere via a gradient ascent algorithm. These expressions are special cases of a rich theory of calculus and optimization on manifolds, of which the sphere is a particular example. A deep technical study of this field is out-of-scope for our purposes, so we only mention two key references for the interested reader: the pioneering textbook by Absil, Mahony, and Sepulchre [AMS09], and a more recent introductory treatise by Boumal [Bou23].

-

1.

For any constraint set that is a differentiable submanifold of , the tangent space at a point is, informally, the best local linear approximation to the manifold at the point . In the important special case where is defined locally at as a level set of a function , that is

for some open set with , the tangent space to at can be calculated via differentiation:

It is easily seen that the sphere has the defining equation . Show, using these facts, that the tangent space to the sphere at is given by

and that the orthogonal projection onto this subspace is .

-

2.

The vector field

(2.5.2) is known as the Riemannian gradient of the function restricted to the sphere. The first order optimality conditions for the optimization problem (2.5.1) can be expressed in terms of the Riemann gradient:

Geometrically, this says that the Euclidean gradient of at must be orthogonal to the tangent space to the sphere at . Now suppose is nonzero. Show that

using the first-order optimality conditions.

-

3.

In optimization over , one checks the second-order optimality conditions (to determine whether a critical point is a maximizer, a minimizer, or a saddle point) using the Hessian matrix . Show, by differentiating the Riemann gradient for the sphere with respect to as in the first part of this exercise, that the corresponding object for determining second-order optimality conditions for sphere-constrained optimization is the Riemannian Hessian, defined as

(2.5.3)

Exercise 2.7.

In this exercise, we sketch an argument referred to in the literature as a landscape analysis for the spherically-constrained population kurtosis maximization problem (2.2.24). We will show that when there is at least one independent component with positive kurtosis, its global maximizers indeed lead to the recovery of one column of the dictionary . For simplicity, we will assume that for each .

-

1.

Using the results of Part 1 of Exercise 2.6, show that the first-order optimality condition for (2.2.24) is

(2.5.4) where the kurtosis is calculated elementwise, denotes elementwise multiplication of vectors and denotes the elementwise cube of its argument.

-

2.

Show that the vectors with unit norm that also satisfy (2.5.4) all take the following form. Let , and . Let be a subset either of or . Then

(2.5.5) satisfies (2.5.4), where is the vector with a in the -th position and s elsewhere, and the sign denotes the choice of either a positive or negative sign.

-

3.

Assume that there is at least one such that . Using the results of Part 2 of Exercise 2.6, show that the only local maxima of the objective of (2.2.24) are the signed one-sparse vectors with . Conclude that the global maximizers of (2.2.24) are the signed one-sparse vectors corresponding to components with maximum kurtosis. (Hint: count the number of positive and negative eigenvalues of the Riemannian Hessian (2.5.3) at each critical point.)

-

4.

Now assume that for every . This corresponds to an “over-deflated” instantiation of the kurtosis maximization problem. Using again the results of Part 2 of Exercise 2.6, show that the only local maxima of the objective of (2.2.24) are the signed dense vectors . This shows that the optimization formulation (2.2.24) cannot be applied naively.

Exercise 2.8.

This exercise follows the structure and formalism introduced in Exercise 2.6, but applies it instead to the orthogonal group . Consult the description of Exercise 2.6 for the necessary conceptual background; the formalism applies identically to the case where the ambient space is the set of matrices as long as one recalls that the relevant inner product on matrices is . An excellent general reference for facts about optimization on the orthogonal group is Edelman, Arias, and Smith [EAS98].

Let be a given twice-continuously-differentiable objective function. Consider the orthogonally-constrained optimization problem

| (2.5.6) |

-

1.

It is easily seen that the orthogonal group has the defining equation . Show, using this fact, that the tangent space to the orthogonal group at is given by

and that the orthogonal projection onto this subspace is

where is the orthogonal projection onto the set of skew-symmetric matrices. The vector field

(2.5.7) is known as the Riemannian gradient of the function restricted to the orthogonal group. The first order optimality conditions for the optimization problem (2.5.6) can be expressed in terms of the Riemann gradient:

-

2.

Show, by differentiating the Riemann gradient for the orthogonal group with respect to as in the first part of this exercise, that the Riemannian Hessian is given by

(2.5.8) where denotes the orthogonal projection onto the set of symmetric matrices, and denotes the Kronecker product of matrices. Take care to interpret the operators appearing in the previous expression as linear transformations on matrices, not as matrices themselves. The second-order optimality conditions for the optimization problem (2.5.6) can be expressed in terms of the Riemann Hessian:

For a minimization problem, the sign is reversed.

(Hint: The key is to manipulate one’s calculations to obtain the form (2.5.8), which is as compact as possible. To this end, make use of the following isomorphism of the Kronecker product: if , , and are matrices of compatible sizes, then one has

where denotes the “left-to-right” stacking of the columns of the matrix argument into a vector. We use this isomorphism in (2.5.8) in order to define the Kronecker product of two matrices as an operator on matrices in a canonical way.)

-

3.

Now suppose is full-rank. In this and the next part of the exercise, we consider the projection onto the orthogonal group of :

(2.5.9) We will prove that the solution to this problem is given by

where is a singular value decomposition of .

-

(a)

Using the first and second-order optimality conditions, show that every local minimizer of (2.5.9) satisfies

(Hint: use linearity of the Kronecker product in either of its two arguments when the other is fixed.)

-

(b)

Using these conditions, argue that at every local minimizer of (2.5.9), one has . (Hint: Use the fact from linear algebra that if is a symmetric positive semidefinite matrix, then .)

-

(c)

Using the singular value decomposition , conclude that

-

(a)