Chapter 1 An Informal Introduction

“Just as the constant increase of entropy is the basic law of the universe, so it is the basic law of life to be ever more highly structured and to struggle against entropy.”

– Václav Havel

1.1 Intelligence, Cybernetics, and Artificial Intelligence

The world we inhabit is neither fully random nor completely unpredictable.111If the world were fully random, an intelligent being would have no need to learn or memorize anything. Instead, it follows certain orders, patterns, and laws that render it largely predictable.222Some deterministic, some probabilistic. The very emergence and persistence of life depend on this predictability. Only by learning and memorizing what is predictable in the environment can life survive and thrive, since sound decisions and actions hinge on reliable predictions. Because the world offers seemingly unlimited predictable phenomena, intelligent beings—animals and humans—have evolved ever more acute senses: vision, hearing, touch, taste, and smell. These senses harvest high-throughput sensory data to perceive environmental regularities. Hence, a fundamental task for all intelligent beings is to

learn and memorize predictable information from massive amounts of sensed data.

Before we can understand how this is accomplished, we must address three questions:

-

•

How can predictable information be modeled and represented mathematically?

-

•

How can such information be computationally learned effectively and efficiently from data?

-

•

How should this information be best organized to support future prediction and inference?

This book aims to provide some answers to these questions. These answers will help us better understand intelligence, especially the computational principles and mechanisms that enable it. Evidence suggests that all forms of intelligence—from low-level intelligence seen in early primitive life to the highest form of intelligence, the practice of modern science—share a common set of principles and mechanisms. We elaborate below.

Emergence and evolution of intelligence.

A necessary condition for the emergence of life on Earth about four billion years ago is that the environment is largely predictable. Life has developed mechanisms that allow it to learn what is predictable about the environment, encode this information, and use it for survival. Generally speaking, we call this ability to learn knowledge of the world intelligence. To a large extent, the evolution of life is the mechanism of intelligence at work [Ben23]. In early stages of life, intelligence is mainly developed through two types of learning mechanisms: phylogenetic and ontogenetic [Wie61].

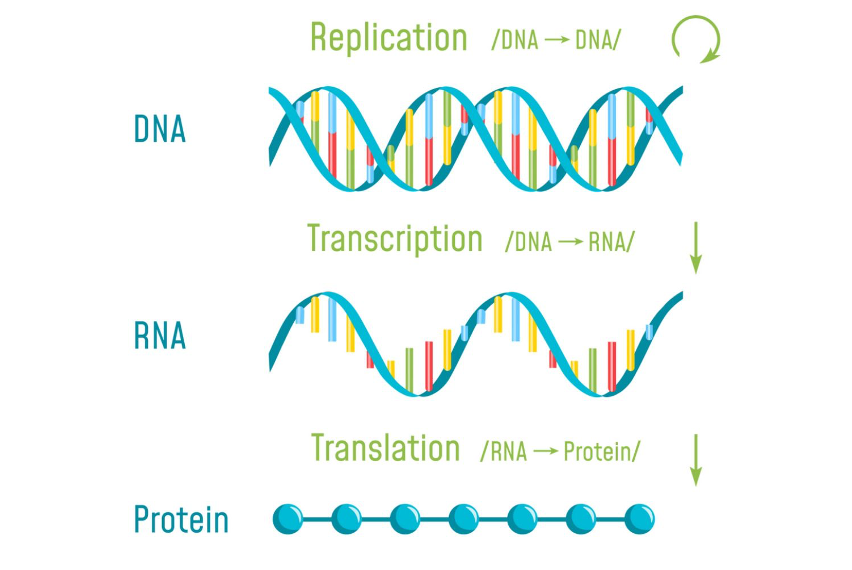

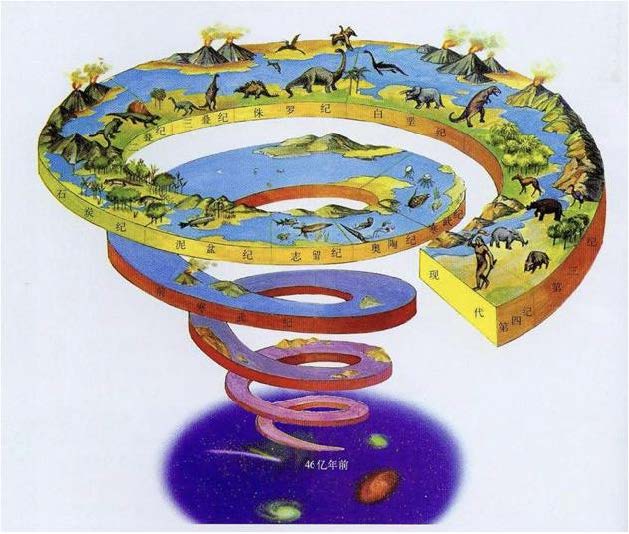

Phylogenetic intelligence refers to learning through the evolution of species. Species inherit and survive mainly based on knowledge encoded in the DNA or genes of their parents. To a large extent, we may call DNA nature’s pre-trained large models because they play a very similar role. The main characteristic of phylogenetic intelligence is that individuals have limited learning capacity. Learning is carried out through a “trial-and-error” mechanism based on random mutation of genes, and species evolve based on natural selection—survival of the fittest—as shown in Figure 1.1. This can be viewed as nature’s implementation of what is now known as “reinforcement learning,” [SB18] or potentially “neural architecture search” [ZL17]. However, such a “trial-and-error” process can be extremely slow, costly, and unpredictable. From the emergence of the first life forms, about 4.4–3.8 billion years ago [BBH+15], life has relied on this form of evolution.333Astute readers may notice an uncanny similarity between how early life evolves and how large language models evolve today.

![Figure 1.2 : Evolution of life, from the ancestor of all life today (LUCA—last universal common ancestor), a single-cell-like organism that lived 3.5–4.3 billion years ago [ MÁM+24 ] , to the emergence of the first nervous system in worm-like species (middle), about 550 million years ago [ WVM+25 ] , to the explosion of life forms in the Cambrian period (right), about 530 million years ago.](chapters/chapter1/figs/luca.jpeg)

![Figure 1.2 : Evolution of life, from the ancestor of all life today (LUCA—last universal common ancestor), a single-cell-like organism that lived 3.5–4.3 billion years ago [ MÁM+24 ] , to the emergence of the first nervous system in worm-like species (middle), about 550 million years ago [ WVM+25 ] , to the explosion of life forms in the Cambrian period (right), about 530 million years ago.](chapters/chapter1/figs/Worm.jpeg)

![Figure 1.2 : Evolution of life, from the ancestor of all life today (LUCA—last universal common ancestor), a single-cell-like organism that lived 3.5–4.3 billion years ago [ MÁM+24 ] , to the emergence of the first nervous system in worm-like species (middle), about 550 million years ago [ WVM+25 ] , to the explosion of life forms in the Cambrian period (right), about 530 million years ago.](chapters/chapter1/figs/Cambrian.jpg)

Ontogenetic intelligence refers to the learning mechanisms that allow an individual to learn through its own senses, memories, and predictions within its specific environment, and to improve and adapt its behaviors. Ontogenetic learning became possible after the emergence of the nervous system about 550–600 million years ago (in worm-like organisms) [WVM+25], shown in Figure 1.2 middle. With a sensory and nervous system, an individual can continuously form and improve its own knowledge about the world (memory), in addition to what is inherited from DNA or genes. This capability significantly enhanced individual survival and contributed to the Cambrian explosion of life forms about 530 million years ago [Par04]. Compared to phylogenetic learning, ontogenetic learning is more efficient and predictable, and can be realized within an individual’s resource limits.

Both types of learning rely on feedback from the external environment—penalties (death) or rewards (food)—applied to a species’ or an individual’s actions.444Gene mutation of the species or actions made by the individual. This insight inspired Norbert Wiener to conclude in his Cybernetics program [Wie48] that all intelligent beings, whether species or individuals, rely on closed-loop feedback mechanisms to learn and improve their knowledge about the world. Furthermore, from plants to fish, birds, and mammals, more advanced species increasingly rely on ontogenetic learning: they remain with and learn from their parents for longer periods after birth, because individuals of the same species must survive in very diverse environments.

Evolution of human intelligence.

Since the emergence of Homo sapiens about 2.5 million years ago [Har15], a new, higher form of intelligence has emerged that evolves more efficiently and economically. Human societies developed languages—first spoken, later written—as shown in Figure 1.3. Language enables individuals to communicate and share useful information, allowing a human community to behave as a single intelligent organism that learns faster and retains more knowledge than any individual. Written texts thus play a role analogous to DNA and genes, enabling societies to accumulate and transmit knowledge across generations. We may refer to this type of intelligence as societal intelligence, distinguishing it from the phylogenetic intelligence of species and the ontogenetic intelligence of individuals. This knowledge accumulation underpins (ancient) civilizations.

![Figure 1.3 : The development of verbal communication and spoken languages (70,000–30,000 years ago), written languages (about 3000 BC) [ Sch14 ] , and abstract mathematics (around 500–300 BC) [ Hea+56 ] mark three key milestones in the evolution of human intelligence.](chapters/chapter1/figs/Spoken-language.jpg)

![Figure 1.3 : The development of verbal communication and spoken languages (70,000–30,000 years ago), written languages (about 3000 BC) [ Sch14 ] , and abstract mathematics (around 500–300 BC) [ Hea+56 ] mark three key milestones in the evolution of human intelligence.](chapters/chapter1/figs/Cuneiform.png)

![Figure 1.3 : The development of verbal communication and spoken languages (70,000–30,000 years ago), written languages (about 3000 BC) [ Sch14 ] , and abstract mathematics (around 500–300 BC) [ Hea+56 ] mark three key milestones in the evolution of human intelligence.](chapters/chapter1/figs/adopt-euclid1685-2.jpg)

About two to three thousand years ago, human intelligence took another major leap, enabling philosophers and mathematicians to develop knowledge that goes far beyond organizing empirical observations. The development of abstract concepts and symbols, such as numbers, time, space, logic, and geometry, gave rise to an entirely new and rigorous language of mathematics. In addition, the development of the ability to generate hypotheses and verify their correctness through logical deduction or experimentation laid the foundation for modern science. For the first time, humans could proactively and systematically discover and develop new knowledge. We will call this advanced form of intelligence “scientific intelligence” due to its necessity for deductive and scientific discovery.

Hence, from what we can learn from nature, whenever we use the word “intelligence,” we must be specific about which level or form we mean:

| (1.1.1) |

Clear characterization and distinction are necessary because we want to study intelligence as a scientific and mathematical subject. Although all forms may share the common objective of learning useful knowledge about the world, the specific computational mechanisms and physical implementations behind each level could differ. We believe the reader will better understand and appreciate these differences after studying this book. Therefore, we leave further discussion of general intelligence to the last Chapter 8.

Origin of machine intelligence—cybernetics.

In the 1940s, spurred by the war effort, scientists inspired by natural intelligence sought to emulate animal intelligence with machines, giving rise to the “Cybernetics” movement championed by Norbert Wiener [Kli11]. Wiener studied zoology at Harvard as an undergraduate before becoming a mathematician and control theorist. He devoted his life to understanding and building autonomous systems that could reproduce animal-like intelligence. Today, the Cybernetics program is often narrowly interpreted as being mainly about feedback control systems, the area in which Wiener made his most significant technical contributions. Yet the program was far broader and deeper: it aimed to understand intelligence as a whole—at least at the animal level—and influenced the work of an entire generation of renowned scientists, including Warren McCulloch, Walter Pitts, Claude Shannon, John von Neumann, and Alan Turing.

![Figure 1.4 : Norbert Wiener’s book “Cybernetics” (1948) [ Wie48 ] (left) and its second edition (1961) [ Wie61 ] (right).](chapters/chapter1/figs/Cybernetics1.jpg)

![Figure 1.4 : Norbert Wiener’s book “Cybernetics” (1948) [ Wie48 ] (left) and its second edition (1961) [ Wie61 ] (right).](chapters/chapter1/figs/Cybernetics2.jpg)

Wiener was arguably the first to study intelligence as a system, rather than focusing on isolated components or aspects of intelligence. His comprehensive views appeared in the celebrated 1948 book Cybernetics: or Control and Communication in the Animal and the Machine [Wie48]. In that book and its second edition published in 1961 [Wie61] (see Figure 1.4), he attempted to identify several necessary characteristics and mechanisms of intelligent systems, including (but not limited to):

-

•

How to measure and store information (in the brain) and how to communicate with others.555Wiener was the first to point out that “information” is neither matter nor energy, but an independent quantity worthy of study. This insight led Claude Shannon to formulate information theory in 1948 [Sha48].

-

•

How to correct errors in prediction and estimation based on existing information. Wiener himself helped formalize the theory of (closed-loop) feedback control in the 1940s.

-

•

How to learn to make better decisions when interacting with a non-cooperative or even adversarial environment. John von Neumann formalized this as game theory in 1944 [NMR44].

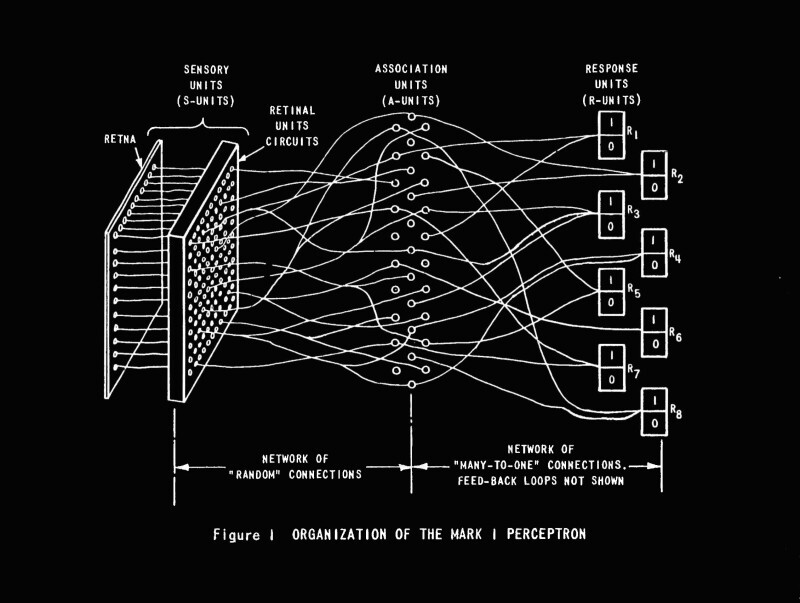

In 1943, inspired by Wiener’s Cybernetics program, cognitive scientist Warren McCulloch and logician Walter Pitts jointly formalized the first computational model of a neuron [MP43], called an artificial neuron and illustrated later in Figure 1.13. Building on this model, Frank Rosenblatt constructed the Mark I Perceptron in the 1950s—a physical machine containing hundreds of such artificial neurons [Ros57]. The Perceptron was the first physically realized artificial neural network; see Figure 1.15. Notably, John von Neumann’s universal computer architecture, proposed in 1945, was also designed to facilitate the goal of building computing machines that could physically realize the mechanisms suggested by the Cybernetics program [Neu58].

Astute readers will notice that the 1940s were truly magical: many fundamental ideas were invented and influential theories formalized, including the mathematical model of neurons, artificial neural networks, information theory, control theory, game theory, and computing machines. Figure 1.5 portrays some of the pioneers. Each of these contributions has grown to become the foundation of a scientific or engineering field and continues to have tremendous impact on our lives. All were inspired by the goal of developing machines that emulate intelligence in nature. Historical records show that Wiener’s Cybernetics movement influenced nearly all of these pioneers and works. To a large extent, Wiener’s program can be viewed as the true predecessor of today’s “embodied intelligence” program; in fact, Wiener himself articulated a vision for such a program with remarkable clarity and concreteness [Wie61].

Although Wiener identified many key characteristics and mechanisms of (embodied) intelligence, he offered no clear recipe for integrating them into a complete autonomous intelligent system. From today’s perspective, some of his views were incomplete or inaccurate. In particular, in the last chapter of the second edition of Cybernetics [Wie61], he stressed the need to deal with nonlinearity if machines are to emulate typical learning mechanisms in nature, yet he provided no concrete, effective solution for this difficult issue. In fairness, even the theory of linear systems was in its infancy at the time, and nonlinear systems seemed far less approachable.

Nevertheless, we cannot help but marvel at Wiener’s prescience. about the importance of nonlinearity. As this book will show, the answer came only recently: nonlinearity can be handled effectively through progressive linearization and transformation realized by deep networks and representations (see Chapter 4). Moreover, we will demonstrate in this book how all the mechanisms listed above can be naturally integrated into a complete system which exhibits certain characteristics of an autonomous intelligent system (see Chapter 5).

Origin of artificial intelligence.

The subtitle of Wiener’s Cybernetics—Control and Communication in the Animal and the Machine—reveals that 1940s research aimed primarily at emulating animal-level intelligence. As noted earlier, the agendas of that era were dominated by Wiener’s Cybernetics movement.

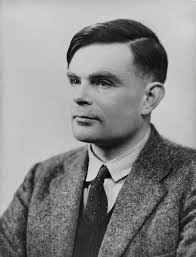

Alan Turing was among the first to recognize this limitation. In his celebrated 1950 paper “Computing Machinery and Intelligence” [Tur50], Turing formally posed the question of whether machines could imitate human-level intelligence, to the point of machine intelligence being indistinguishable from human intelligence—now known as the Turing test.

Around 1955, a group of ambitious young scientists sought to break away from the then-dominant Cybernetics program and establish their own legacy. They accepted Turing’s challenge of imitating human intelligence and proposed a workshop at Dartmouth College to be held in the summer of 1956. Their proposal stated [MMR+06]:

“The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.”

They aimed to formalize and study the higher-level intelligence that distinguishes humans from animals. Their agenda included abstraction, symbolic methods, natural language, and deductive reasoning (causal inference, logical deduction, etc.), i.e., scientific intelligence. The organizer of the workshop, John McCarthy—then a young assistant professor of mathematics at Dartmouth College—coined the now-famous term “Artificial Intelligence” (AI) to describe machines that exhibit scientific intelligence in order to formally describe the problems and goals of the workshop.

Renaissance of “artificial intelligence” or “cybernetics”?

Over the past decade, machine intelligence has undergone explosive development, driven largely by deep artificial neural networks, sparked by the 2012 work of Geoffrey Hinton and his students [KSH12]. This period is hailed as the “Renaissance of AI.” Yet, in terms of the tasks actually tackled (recognition, generation, prediction) and the techniques developed (reinforcement learning, imitation learning, encoding, decoding, denoising, and compression), we are largely emulating mechanisms common to the intelligence of early life and animals. Even in this regard, as we will clarify in this book, current “AI” models and systems have not fully or correctly implemented all necessary mechanisms for intelligence at the animal level which were known to the 1940s Cybernetics movement.

Strictly speaking, the recent advances of machine intelligence in the past decade do not align well with the 1956 Dartmouth AI program. Instead, what has been achieved is closer to the objectives of Wiener’s classic 1940s Cybernetics program. It is probably more appropriate to call the current era the “Renaissance of Cybernetics.”666The recent rise of so-called “Embodied AI” for autonomous robots aligns even more closely with the goals of the Cybernetics program. Only after we fully understand, from scientific and mathematical perspectives, what we have truly accomplished can we determine what remains and which directions lead to the true nature of intelligence. That is one of the main purposes of this book.

1.2 What to Learn?

1.2.1 Predictability

Data that carry useful information manifest in many forms. In their most natural form, they can be modeled as sequences that are predictable and computable. The notion of a predictable and computable sequence was central to the theory of computing and largely led to the invention of computers [Tur36]. The role of predictable sequences in (inductive) inference was studied by Ray Solomonoff, Andrey Kolmogorov, and others in the 1960s [Kol98] as a generalization of Claude Shannon’s classic information theory [Sha48]. To understand the concept of predictable sequences, we begin with some concrete examples.

Scalar case.

The simplest predictable discrete sequence is arguably the sequence of natural numbers:

| (1.2.1) |

in which the next number is defined as the previous number plus 1:

| (1.2.2) |

One may generalize the notion of predictability to any sequence with if the next number can always be computed from its predecessor :

| (1.2.3) |

where is a computable (scalar) function.777Here we emphasize that the function itself is computable, meaning it can be implemented as a program on a computer. Alan Turing’s seminal work in 1936 [Tur36] gives a rigorous definition of computability. In practice, we often further assume that is efficiently computable and has nice properties such as continuity and differentiability. The necessity of these properties will become clear later once we understand more refined notions of computability and their roles in machine learning and intelligence.

Multivariable case.

The next value may also depend on two predecessors. For example, the famous Fibonacci sequence

| (1.2.4) |

satisfies

| (1.2.5) |

More generally, we may write

| (1.2.6) |

for any computable function that takes two inputs. Extending further, the next value may depend on the preceding values:

| (1.2.7) |

The integer is called the degree of the recursion. The above expression (1.2.7) is called an autoregression, and the resulting sequence is autoregressive. When is linear, we say it is a linear autoregression.

Vector case.

To simplify notation, we define a vector that collects consecutive values in the sequence:

| (1.2.8) |

With this notation, the recursive relation (1.2.7) becomes

| (1.2.9) |

where is uniquely determined by the function in (1.2.7) and maps a -dimensional vector to a -dimensional vector. In different contexts, such a vector is sometimes called a “state” or a “token.” Note that (1.2.7) defines a mapping , whereas here we have .

Controlled prediction.

We may also define a predictable sequence that depends on another predictable sequence as input:

| (1.2.10) |

where with is a (computable) predictable sequence. In other words, the next vector depends on both and . In control theory, the sequence is often referred to as the “control input” and as the “state” or “output” of the system (1.2.10). A classic example is a linear dynamical system:

| (1.2.11) |

which is widely studied in control theory [CD91].

Often the control input is given by a computable function of the state itself:

| (1.2.12) |

As a result, the sequence is given by composing the two computable functions and :

| (1.2.13) |

In this way, the sequence again becomes an autoregressive predictable sequence. When the input depends on the output , we say the resulting sequence is produced by a “closed-loop” system (1.2.13). As the closed-loop system no longer depends on any external input, we say such a system has become autonomous. It can be viewed as a special case of autoregression. For instance, if we choose in the above linear system (1.2.11), the closed-loop system becomes

| (1.2.14) |

which is a linear autoregression.

Continuous processes.

Predictable sequences have natural continuous counterparts, which we call predictable processes. The simplest such process is time itself, .

More generally, a process is predictable if, at every time , its value at is determined by its value at , where is an infinitesimal increment. Typically, is continuous and smooth, so the change is infinitesimally small. Such processes are typically described by (multivariate) differential equations

| (1.2.15) |

In systems theory [CD91, Sas99], the equation (1.2.15) is known as a state-space model. A controlled process is given by

| (1.2.16) |

where is a computable input process.

Example 1.1.

Newton’s second law predicts the trajectory of a moving object under a force :

| (1.2.17) |

When there is no force, i.e., , this reduces to Newton’s first law: the object moves at constant velocity :

| (1.2.18) |

1.2.2 Low Dimensionality

Learning to predict.

Now suppose you have observed or have been given many sequence segments:

| (1.2.19) |

drawn from a predictable sequence . Without loss of generality, assume each segment has length , so

| (1.2.20) |

for some . You are then given a new segment and asked to predict its future values.

The difficulty is that the generating function and its order are unknown:

| (1.2.21) |

The goal is therefore to learn and from the sample segments . The central task of learning to predict is:

Given many sampled segments of a predictable sequence, how can we effectively and efficiently identify the function ?

Predictability and low-dimensionality.

To identify the predictive function , we may notice a common characteristic of segments of any predictable sequence given by (1.2.21). If we take a long segment, say of length , and view it as a vector

| (1.2.22) |

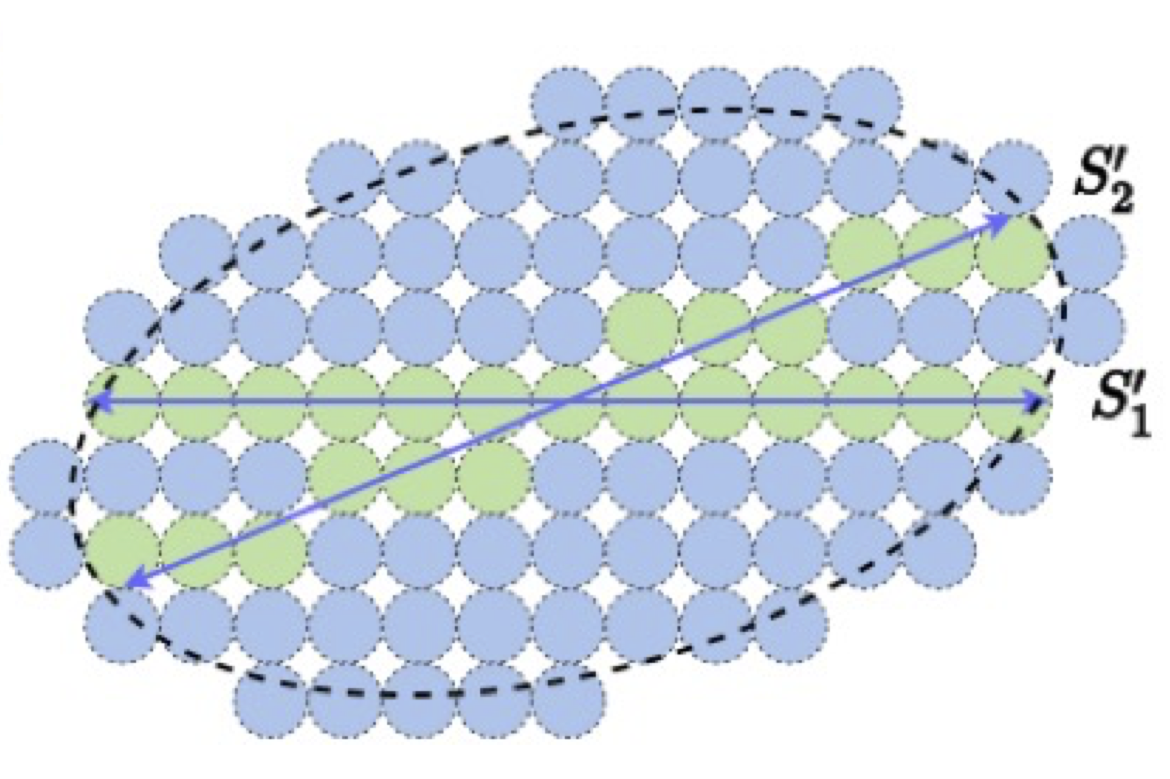

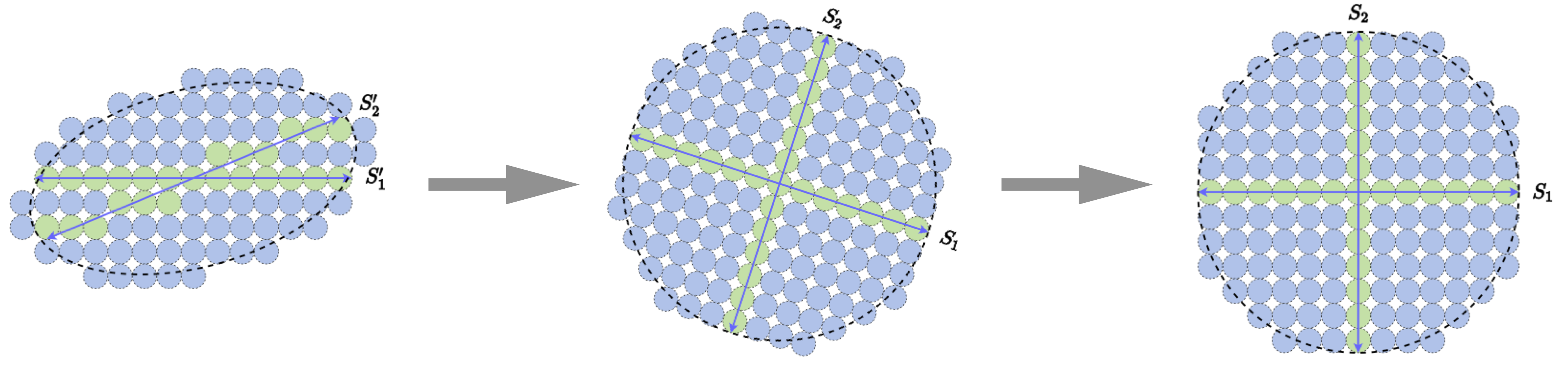

then the set of all such vectors is far from random and cannot occupy the entire space . Instead, it has at most degrees of freedom—given the first entries of any , the remaining entries are uniquely determined. In other words, all lie on a -dimensional surface. In mathematics, such a surface is called a submanifold, denoted .

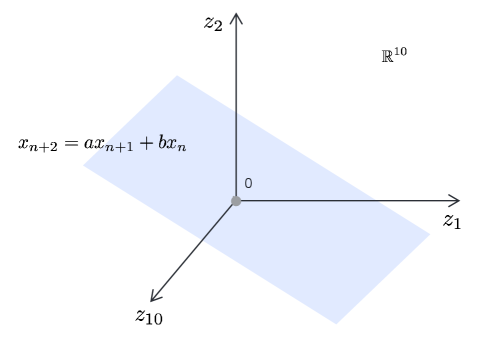

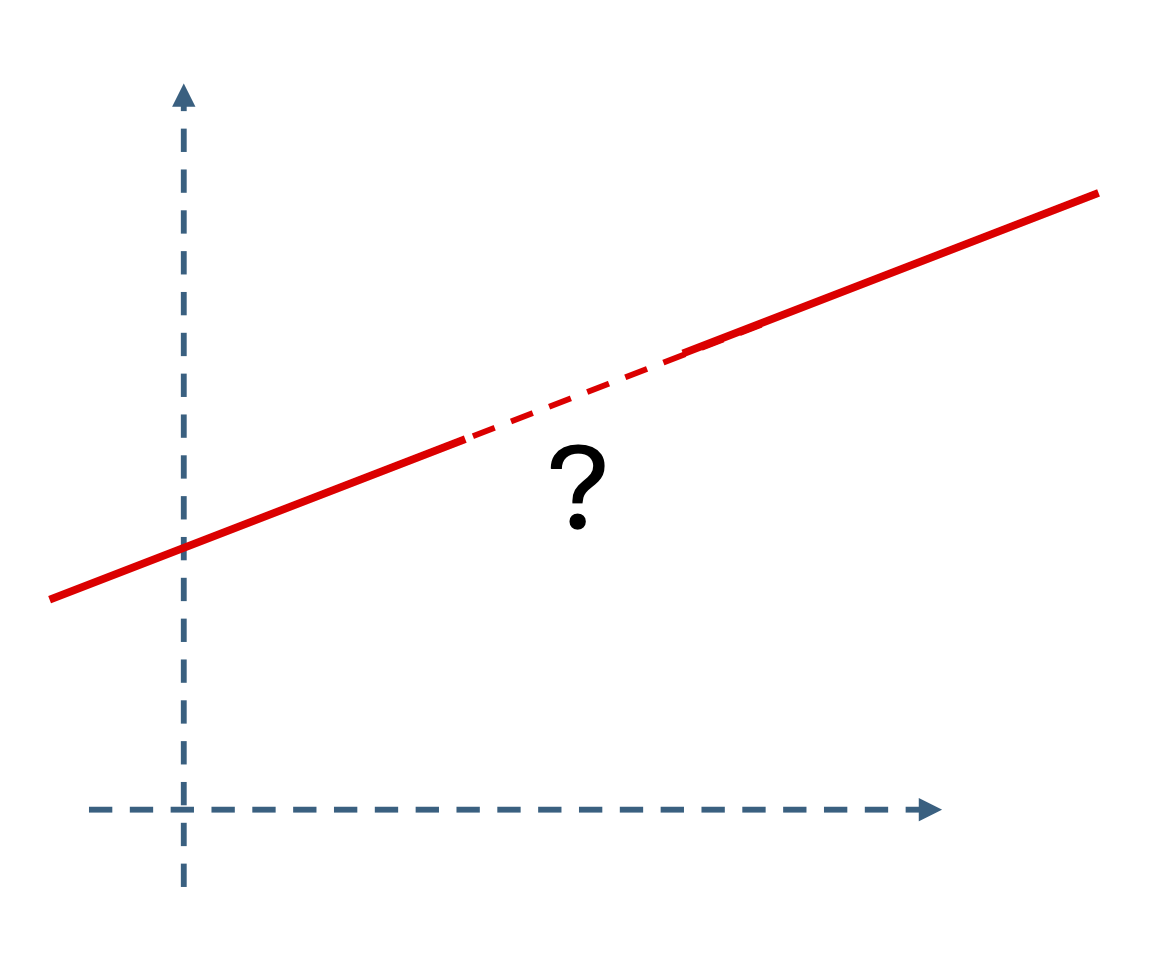

In practice, if we choose the segment length large enough, all segments sampled from the same predicting function lie on a surface with intrinsic dimension , significantly lower than that of the ambient space . For example, if the sequence is given by the linear autoregression

| (1.2.23) |

for some constants , and we sample segments of length , then all samples lie on a two-dimensional plane in , as illustrated in Figure 1.6. Identifying this two-dimensional subspace fully determines the constants and in (1.2.23).

More generally, when the predicting function is linear, as in the systems given in (1.2.11) and (1.2.14), the long segments always lie on a low-dimensional linear subspace. Identifying the predicting function is then largely equivalent to identifying this subspace, a problem known as principal component analysis. We will discuss such classic models and methods in Chapter 2.

This observation extends to general predictable sequences: if we can identify the low-dimensional surface on which the segment samples lie, we can identify the predictive function .888Under mild conditions, there is a one-to-one mapping between the low-dimensional surface and the function . This fact has been exploited in problems such as system identification, which we will discuss later. We cannot overemphasize the importance of this property: All samples of long segments of a predictable sequence lie on a low-dimensional submanifold. As we will see in this book, all modern learning methods exploit this property, implicitly or explicitly.

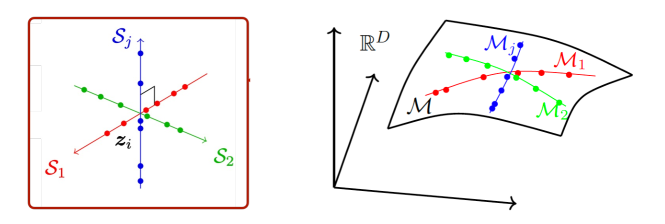

In real-world scenarios, observed data often come from multiple predictable sequences. For example, a video sequence may contain several moving objects. In such cases, the data lie on a mixture of low-dimensional linear subspaces or nonlinear submanifolds, as illustrated in Figure 1.7.

Properties of low-dimensionality.

Temporal correlation in predictable sequences is not the only reason data are low-dimensional. For example, the space of all images is vast, yet most of it consists of structureless random images, as shown in Figure 1.8 (left). Natural images and videos, however, are highly redundant because of strong spatial and temporal correlations among pixel values. This redundancy allows us to recognize easily whether an image is noisy or clean, as shown in Figure 1.8 (middle and right). Consequently, the distribution of natural images has a very low intrinsic dimension relative to the total number of pixels.

Because learning low-dimensional structures is both important and ubiquitous, the book High-Dimensional Data Analysis with Low-Dimensional Models: Principles, Computation, and Applications [WM22] begins with the statement: “The problem of identifying the low-dimensional structure of signals or data in high-dimensional spaces is one of the most fundamental problems that, through a long history, interweaves many engineering and mathematical fields such as system theory, signal processing, pattern recognition, machine learning, and statistics.”

By constraining the observed data point to lie on a low-dimensional surface, we make its entries highly dependent on one another and, in a sense, “predictable” from the values of other entries. For example, if we know the data are constrained to a -dimensional surface in , we can perform several useful tasks beyond prediction:

-

•

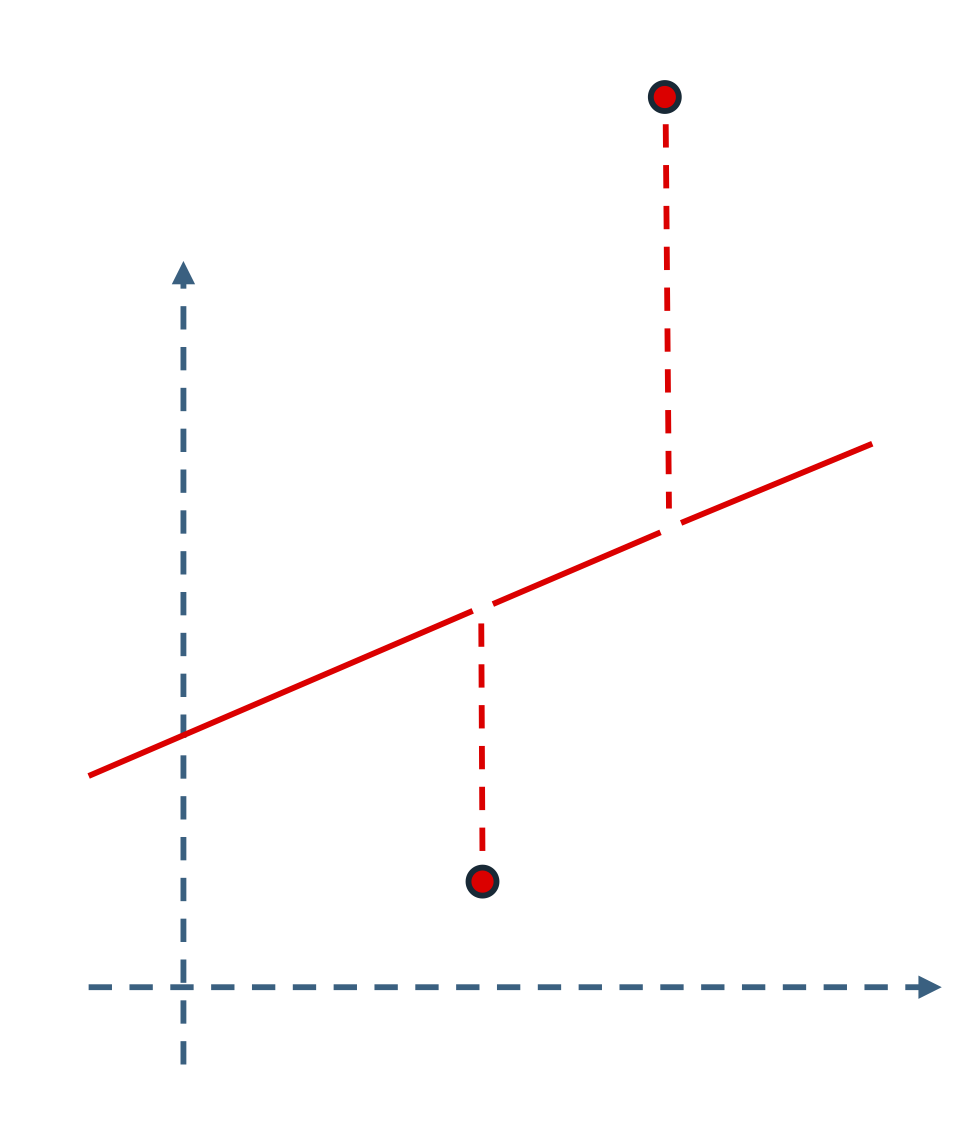

completion: given more than entries of a typical sample , the remaining entries can usually be uniquely determined;999Prediction becomes a special case of this property.

-

•

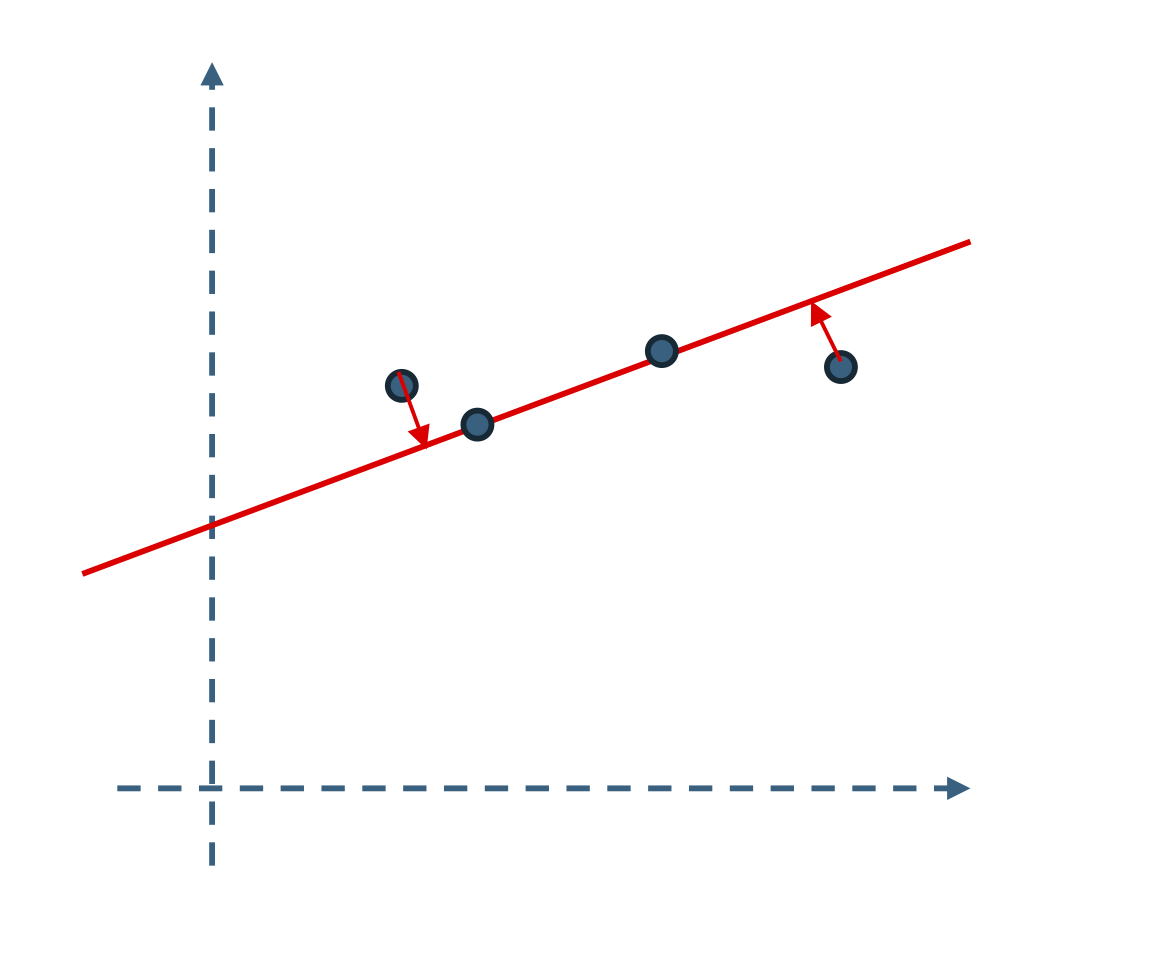

denoising: if the entries of a sample are perturbed by small noise, the noise can be effectively removed by projecting back onto the surface;

-

•

error correction: if a small number of unknown entries of are arbitrarily corrupted, they can be efficiently corrected.

Figure 1.9 illustrates these properties using a low-dimensional linear structure—a one-dimensional line in a two-dimensional plane.

Under mild conditions, these properties generalize to many other low-dimensional structures in high-dimensional spaces [WM22]. As we will see, these useful properties—completion and denoising, for example—inspire effective methods for learning such structures.

For simplicity, we have so far used the deterministic case to introduce the notions of predictability and low-dimensionality, where data lie precisely on geometric structures such as subspaces or surfaces. In practice, however, data always contain some uncertainty or randomness. In this case, we may assume the data follow a probability distribution with density . A distribution is considered “low-dimensional” if its density concentrates around a low-dimensional geometric structure—a subspace, a surface, or a mixture thereof—as shown in Figure 1.7. Once learned, such a density serves as a powerful prior for estimating from partial, noisy, or corrupted observations:

| (1.2.24) |

by computing the conditional estimate or by sampling the conditional distribution .101010Modern generative AI technologies, such as conditioned image generation, rely heavily on this principle, as we will discuss in Chapter 6.

These discussions lead to the single assumption on which this book bases its deductive approach to understanding intelligence and deep networks:

The main assumption: Any intelligent system or learning method should (and can) rely on the predictability of the world; hence, the distribution of observed high-dimensional data samples has low-dimensional support.

The remaining question is how to learn these low-dimensional structures correctly and computationally efficiently from high-dimensional data. As we will see, parametric models studied in classical analytical approaches and non-parametric models such as deep networks, popular in modern practice, are simply different means to the same end.

1.3 How to Learn?

1.3.1 Analytical Approaches

Note that even if a predictive function is tractable to compute, it does not imply that it is tractable or scalable to learn this function from a finite number of sampled segments. One classical approach to ensure tractability is to make explicit assumptions about the family of low-dimensional structures we are dealing with. Historically, due to limited computation and data, simple and idealistic analytical models were the first to be studied, as they often offer efficient closed-form or numerical solutions. In addition, they provide insights into more general problems and already yield useful solutions to important, though limited, cases. In the old days, when computational resources were scarce, analytical models that permitted efficient closed-form or numerical solutions were the only cases that could be implemented. Linear structures became the first class of models to be thoroughly studied.

For example, arguably the simplest case is to assume the data are distributed around a single low-dimensional subspace in a high-dimensional space. Somewhat equivalently, one may assume the data are distributed according to an almost degenerate low-dimensional Gaussian. Identifying such a subspace or Gaussian from a finite number of (noisy) samples is the classical problem of principal component analysis (PCA), and effective algorithms have been developed for this class of models [Jol02]. One can make the family of models increasingly more complex and expressive. For instance, one may assume the data are distributed around a mixture of low-dimensional components (subspaces or low-dimensional Gaussians), as in independent component analysis (ICA) [BJC85], dictionary learning (DL), generalized principal component analysis (GPCA) [VMS05], or the more general class of sparse low-dimensional models that have been studied extensively in recent years in fields such as compressive sensing [WM22].

Across all these analytical model families, the central problem is to identify the most compact model within each family that best fits the given data. Below, we give a brief account of these classical analytical models but leave a more systematic study to Chapter 2. In theory, these analytical models have provided tremendous insights into the geometric and statistical properties of low-dimensional structures. They often yield closed-form solutions or efficient and scalable algorithms, which are very useful for data whose distributions can be well approximated by such models. More importantly, for more general problems, they provide a sense of how easy or difficult the problem of identifying low-dimensional structures can be and what the basic ideas are to approach such a problem.

Linear Dynamical Systems

Wiener filter.

As discussed in Section 1.2.1, a central task of intelligence is to learn what is predictable in sequences of observations. The simplest class of predictable sequences—or signals—are those generated by a linear time-invariant (LTI) process:

| (1.3.1) |

where is the convolution operation, is the input and is the impulse response function.111111Typically, is assumed to have certain desirable properties, such as finite length or a band-limited spectrum. Here denotes additive observation noise. Given the input process and observations of the output process , the goal is to find the optimal such that predicts optimally. Prediction quality (i.e., goodness) is measured by the mean squared error (MSE):

| (1.3.2) |

The optimal solution is called a (denoising) filter. Norbert Wiener—who also initiated the Cybernetics movement—studied this problem in the 1940s and derived an elegant closed-form solution known as the Wiener filter [Wie42, Wie49]. This result, also known as least-variance estimation and filtering, became one of the cornerstones of signal processing. Interested readers may refer to [MKS+04, Appendix B] for a detailed derivation of this type of estimator.

Kalman filter.

The idea of denoising or filtering a dynamical system was later extended by Rudolph Kalman in the 1960s to a linear time-invariant system described by a finite-dimensional state-space model:

| (1.3.3) |

The problem is to estimate the system state from noisy observations of the form

| (1.3.4) |

where is (white) noise. The optimal causal121212This means the estimator can use only observations up to the current time step . The Kalman filter is always causal, whereas the Wiener filter need not be. state estimator that minimizes the minimum-MSE (MMSE) prediction error

| (1.3.5) |

is given in closed form by the so-called Kalman filter [Kal60]. This result is a cornerstone of modern control theory because it enables estimation of a dynamical system’s state from noisy observations. One can then introduce linear state feedback, for example , and render the closed-loop system fully autonomous, as shown in Equation 1.2.13. Interested readers may refer to [MKS+04, Appendix B] for a detailed derivation of the Kalman filter.

Identification of linear dynamical systems.

To derive the Kalman filter, the system parameters are assumed to be known. If they are not given in advance, the problem becomes more challenging and is known as system identification: how to learn the parameters from many samples of the input sequence and observation sequence . This is a classic problem in systems theory. If the system is linear, the input and output sequences jointly lie on a certain low-dimensional subspace131313which has the same dimension as the order of the state-space model (1.3.3).. Hence, the identification problem is essentially equivalent to identifying this low-dimensional subspace [VM96, LV09, LV10].

Note that the above problems have two things in common: first, the noise-free sequences or signals are assumed to be generated by an explicit family of parametric models; second, these models are essentially linear. Conceptually, let be a random variable whose “true” distribution is supported on a low-dimensional linear subspace, say . To a large extent, the Wiener filter and Kalman filter both try to estimate such an from its noisy observations:

| (1.3.6) |

where is typically a random Gaussian noise (or process). Hence, their solutions rely on identifying a low-dimensional linear subspace that best fits the observed noisy data. By projecting the data onto this subspace, one obtains the optimal denoising operations, all in closed form.

Linear and Mixed Linear Models

Principal component analysis.

From the above problems in classical signal processing and system identification, we see that the main task behind all these problems is to learn a single low-dimensional linear subspace from noisy observations. Mathematically, we may model such a structure as

| (1.3.7) |

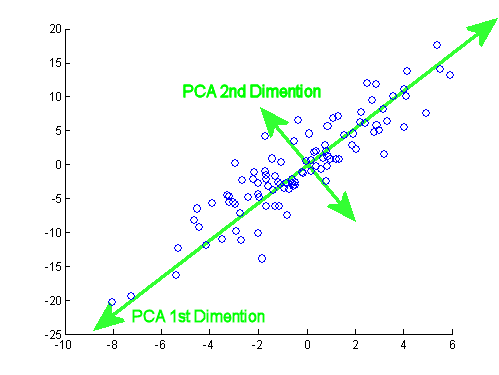

where is small random noise. Figure 1.10 illustrates such a distribution with two principal components.

The problem is to find the subspace basis from many samples of . A typical approach is to minimize the projection error onto the subspace:

| (1.3.8) |

This is essentially a denoising task: once the basis is correctly found, we can denoise the noisy sample by projecting it onto the low-dimensional subspace spanned by :

| (1.3.9) |

If the noise is small and the low-dimensional subspace is correctly learned, we expect . Thus, PCA is a special case of a so-called “auto-encoding” scheme, which encodes the data by projecting it into a lower-dimensional space, and decodes a lower-dimensional code by it into the original space:

| (1.3.10) |

Because of the simple data structure, the encoder and decoder both become simple linear operators (projecting and lifting).

This classic problem in statistics is known as principal component analysis (PCA). It was first studied by Pearson in 1901 [Pea01] and later independently by Hotelling in 1933 [Hot33]. The topic is systematically summarized in the classic book [Jol86, Jol02]. One may also explicitly assume the data is distributed according to a single low-dimensional Gaussian:

| (1.3.11) |

which is equivalent to assuming that in the PCA model (1.3.7) is a standard normal distribution. This problem is known as probabilistic PCA [TB99] and has the same computational solution as PCA.

In this book, we revisit PCA in Chapter 2 from the perspective of learning a low-dimensional distribution. Our goal is to use this simple and idealistic model to convey some of the most fundamental ideas for learning a compact representation of a low-dimensional distribution, including the important notions of compression via denoising and auto-encoding for a consistent representation.

Independent component analysis.

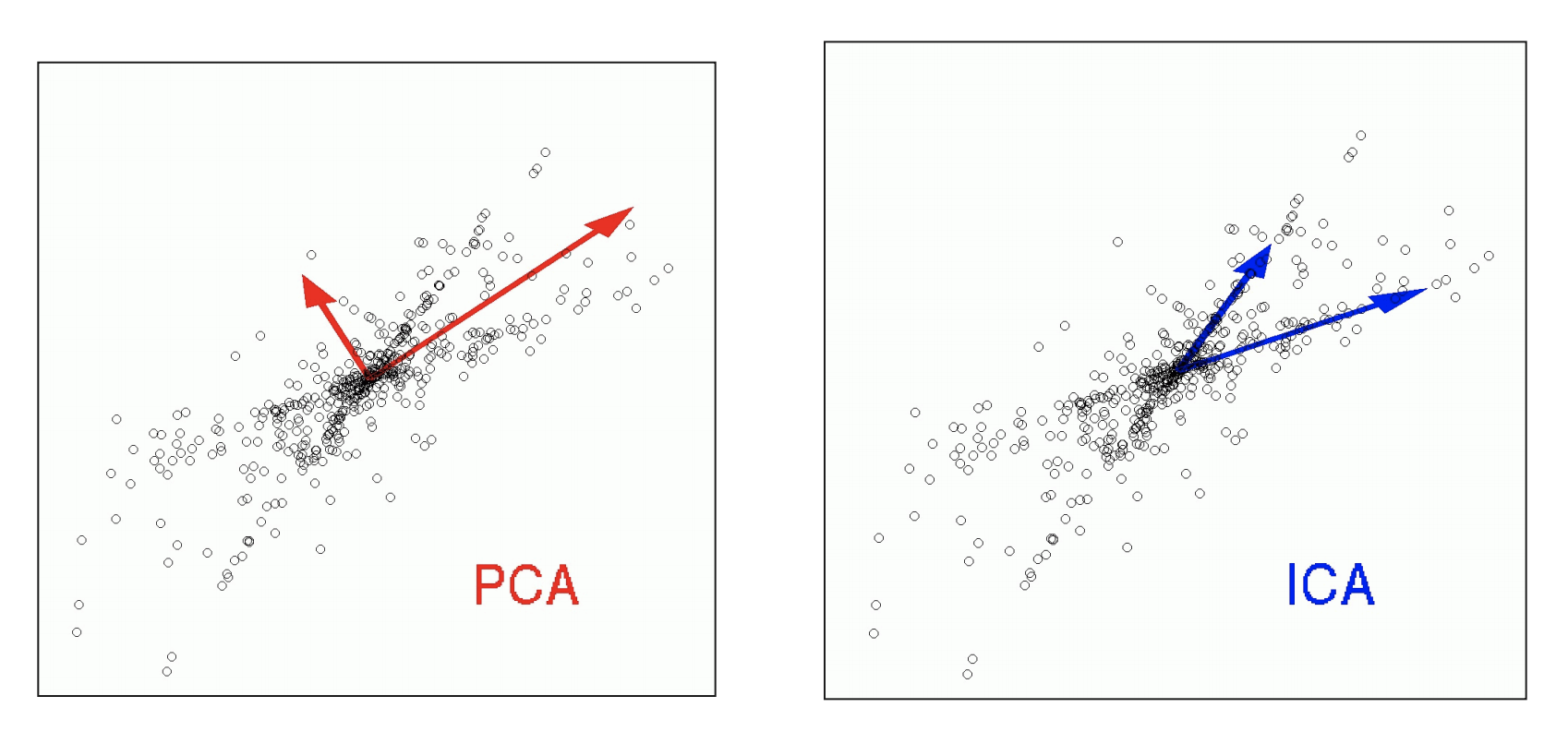

Independent component analysis (ICA) was originally proposed by [BJC85] as a classic model for learning a good representation. In fact, it was originally proposed as a simple mathematical model for our memory. The ICA model takes a deceptively similar form to the above PCA model (1.3.7) by assuming that the observed random variable is a linear superposition of multiple independent components :141414In PCA the “components” are the learned vectors, whereas in ICA they are scalars. This is just a difference in names and convention.

| (1.3.12) |

However, here the components are assumed to be independent non-Gaussian variables. For example, a popular choice is

| (1.3.13) |

where is a Bernoulli random variable and could be a constant value or another random variable, say Gaussian.151515Even if is Gaussian, is no longer a Gaussian variable! The ICA problem aims to identify both and from observed samples of . Figure 1.11 illustrates the difference between ICA and PCA.

Although the (decoding) mapping from to seems linear and straightforward once and are learned, the (encoding) mapping from to can be complicated and may not be represented by a simple linear mapping. Hence, ICA generally gives an auto-encoding of the form:

| (1.3.14) |

Thus, unlike PCA, ICA is somewhat more difficult to analyze and solve. In the 1990s, researchers such as Erkki Oja and Aapo Hyvärinen [HO97, HO00a] made significant theoretical and algorithmic contributions to ICA. In Chapter 2, we will study and provide a solution to ICA from which the encoding mapping will become clear.

Sparse structures and compressive sensing.

If in (1.3.13) is very small, the probability that any component is non-zero is small. In this case we say is sparsely generated and concentrates on a union of linear subspaces whose dimension is . We may therefore extend the ICA model to a more general family of low-dimensional structures known as sparse models.

A -sparse model is the set of all -sparse vectors:

| (1.3.15) |

where counts the number of non-zero entries. Thus is the union of all -dimensional subspaces aligned with the coordinate axes, as illustrated in Figure 1.7 (left). A central problem in signal processing and statistics is to recover a sparse vector from its linear observations

| (1.3.16) |

where is given, typically , and is small noise. This seemingly benign problem is NP-hard to solve and even hard to approximate (see [WM22] for details).

Despite a rich history dating back to the eighteenth century [Bos50], no provably efficient algorithm existed for this class of problems, although many heuristic algorithms were proposed between the 1960s and 1990s. Some were effective in practice but lacked rigorous justification. A major breakthrough came in the early 2000s when mathematicians including David Donoho, Emmanuel Candés, and Terence Tao [Don05, CT05a, CT05] established a rigorous theoretical framework that characterizes precise conditions under which the sparse recovery problem can be solved effectively and efficiently via convex minimization:

| (1.3.17) |

where is the sparsity-promoting norm of a vector and is a small constant. Any solution yields a sparse (auto) encoding

| (1.3.18) |

We will describe such an algorithm (and thus mapping) in Chapter 2, revealing fundamental connections between sparse coding and deep learning.161616Similarities between sparse-coding algorithms and deep networks were noted as early as 2010 [GL10].

Conditions for minimization to succeed are surprisingly general: the minimum number of measurements required for a successful recovery is only proportional to the intrinsic dimension . This is the compressive sensing phenomenon [Can06]. It extends to broad families of low-dimensional structures, including low-rank matrices. These results fundamentally changed our understanding of recovering low-dimensional structures in high-dimensional spaces. David Donoho, among others, celebrated this reversal as the “blessing of dimensionality” [D ̵D00], in contrast to the usual pessimistic belief in the “curse of dimensionality.” The complete theory and body of results is presented in [WM22].

The computational significance of this framework cannot be overstated. It transformed problems previously believed intractable into ones that are not only tractable but scalably solvable using extremely efficient algorithms:

| (1.3.19) |

The algorithms come with rigorous theoretical guarantees of correctness given precise requirements in data and computation. The deductive nature of this approach contrasts sharply with the empirical, inductive practice of deep neural networks. Yet we now know that both approaches share a common goal—pursuing low-dimensional structures in high-dimensional spaces.

Dictionary learning.

Conceptually, an even harder problem than the sparse-coding problem (1.3.16) arises when the observation matrix is unknown and must itself be learned from a set of (possibly noisy) observations :

| (1.3.20) |

Here we are given only , not the corresponding or the noise term , except that the are known to be sparse. This is the dictionary learning problem, which generalizes the ICA problem (1.3.12) discussed earlier. In other words, given that the distribution of the data is the image of a standard sparse distribution under a linear transform , we wish to learn both and its “inverse” mapping so as to obtain an autoencoder:

| (1.3.21) |

PCA, ICA, and dictionary learning all assume that the data distribution is supported on or near low-dimensional linear or piecewise-linear structures. Each method requires learning a (global or local) basis for these structures from noisy samples. In Chapter 2 we study how to identify such low-dimensional structures through these classical models. In particular, we will see that all of these low-dimensional (piecewise) linear models can be learned efficiently by the same family of fast algorithms known as power iteration [ZMZ+20]. Although these linear or piecewise-linear models are somewhat idealized for most real-world data, understanding them is an essential first step toward understanding more general low-dimensional distributions.

General Distributions

The distributions of real-world data such as images, videos, and audio are too complex to be modeled by the above, somewhat idealistic, linear models or Gaussian processes. We normally do not know a priori from which family of parametric models they are generated. Historically, many attempts have been made to develop analytical models for these data. In particular, Fields Medalist David Mumford spent considerable effort in the 1990s trying to understand and model the statistics of natural images [Mum96]. He and his students, including Song-Chun Zhu, drew inspiration and techniques from statistical physics and proposed many statistical and stochastic models for the distribution of natural images, such as random fields or stochastic processes [ZWM97, ZM97a, ZM97, HM99, MG99, LPM03]. However, these analytical models achieved only limited success in producing samples that closely resemble natural images. Clearly, for real-world data like images, we need to develop more powerful and unifying methods to pursue their more general low-dimensional structures.

In practice, for a general distribution of real-world data, we typically only have many samples from the distribution—the so-called empirical distribution. In such cases, we normally cannot expect to have a clear analytical form for their low-dimensional structures, nor for the resulting denoising operators.171717This is unlike the cases of PCA, Wiener filter, and Kalman filter. We therefore need to develop a more general solution to these empirical distributions, not necessarily in closed form but at least efficiently computable. If we do this correctly, solutions to the aforementioned linear models should become their special cases.

Denoising.

In the 1950s, statisticians became interested in the problem of denoising data drawn from an arbitrary distribution. Let be a random variable with probability density function . Suppose we observe a noisy version of :

| (1.3.22) |

where is standard Gaussian noise and is the noise level of the observation. Let be the probability density function of .181818That is, , where is the density function of the Gaussian distribution . Remarkably, the posterior expectation of given can be calculated by an elegant formula, known as Tweedie’s formula [Rob56]:191919Herbert Robbins gave the credit for this formula to Maurice Kenneth Tweedie from their personal correspondence.

| (1.3.23) |

As can be seen from the formula, the function plays a very special role in denoising the observation here. The noise can be explicitly estimated as

| (1.3.24) |

for which we only need to know the distribution of , not the ground truth for . An important implication of this result is that if we add Gaussian noise to any distribution, the denoising process can be done easily if we can somehow obtain the function .

Because this is such an important and useful result, it has been rediscovered and used in many different contexts and areas. For example, after Tweedie’s formula [Rob56], it was rediscovered a few years later by [Miy61] where it was termed “empirical Bayesian denoising.” In the early 2000s, the function was rediscovered again in the context of learning a general distribution and was named the “score function” by Aapo Hyvärinen [Hyv05]. Its connection to (empirical Bayesian) denoising was soon recognized by [Vin11]. Generalizations to other measurement distributions (beyond Gaussian noise) have been made by Eero Simoncelli’s group [RS11]. Today, the most direct application of Tweedie’s formula and denoising is image generation via iterative denoising [KS21, HJA20].

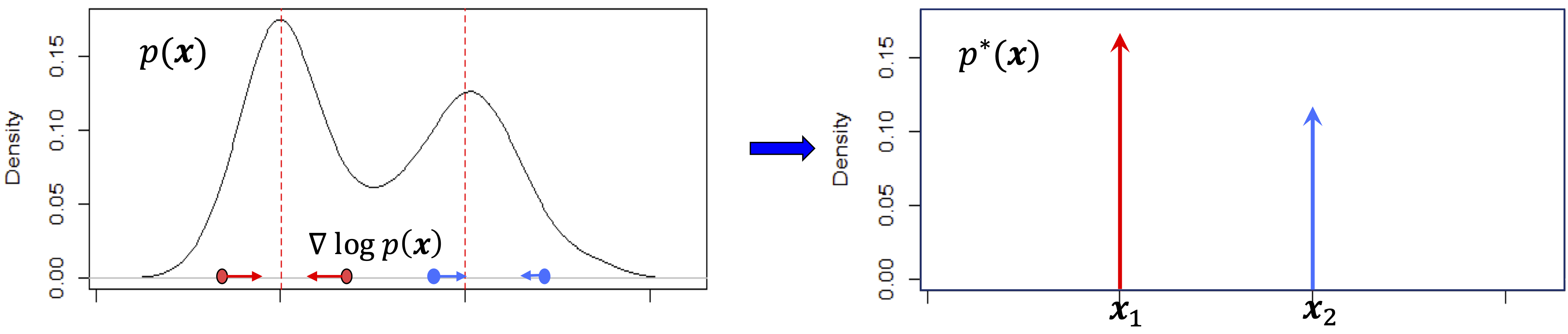

Entropy minimization.

The score has an intuitive information-theoretic and geometric interpretation. In information theory, corresponds to the number of bits needed to encode 202020at least for discrete variables, as detailed in Chapter 3.. The gradient points toward higher-probability-density regions, as shown in Figure 1.12 (left). Moving in this direction reduces the number of bits required to encode . Thus, the operator pushes the distribution to “shrink” toward high-density regions. Formally, one can show that the (differential) entropy

| (1.3.25) |

decreases under this operation (see Chapter 3 and Appendix B). With an optimal codebook, the resulting distribution achieves a lower coding rate and is thus more compressed. Repeating this denoising process indefinitely produces a distribution whose probability mass is concentrated on a support of lower dimension. For instance, the distribution in Figure 1.12 (left) converges to (right):212121Strictly speaking, is a generalized function: with .222222Notice that in this section we discuss the process of iteratively denoising and compressing a high-entropy noise distribution until it converges to the low-entropy data distribution. As we will see in Chapter 3, this problem is dual to the problem of learning an optimal encoding for the data distribution, which is also a useful application [RAG+24].

| (1.3.26) |

As the distribution converges to , its differential entropy approaches negative infinity due to a technical difference between continuous and discrete entropy definitions. Chapter 3 resolves this using a unified rate-distortion measure.

Later in this chapter and Chapter 3, we explore how this simple denoising-compression framework unifies powerful methods for learning low-dimensional distributions in high-dimensional spaces, including natural image distributions.

1.3.2 Empirical Approaches

In practice, it is difficult to model important real-world data—such as images, sounds, and text—with the idealized linear, piecewise-linear, or other analytical models discussed in the previous section. Historically, many empirical models and methods have therefore been proposed. These models often drew inspiration from the biological nervous system, because the brain of an animal or human processes such data with remarkable efficiency and effectiveness.

Classic Artificial Neural Networks

Artificial neuron.

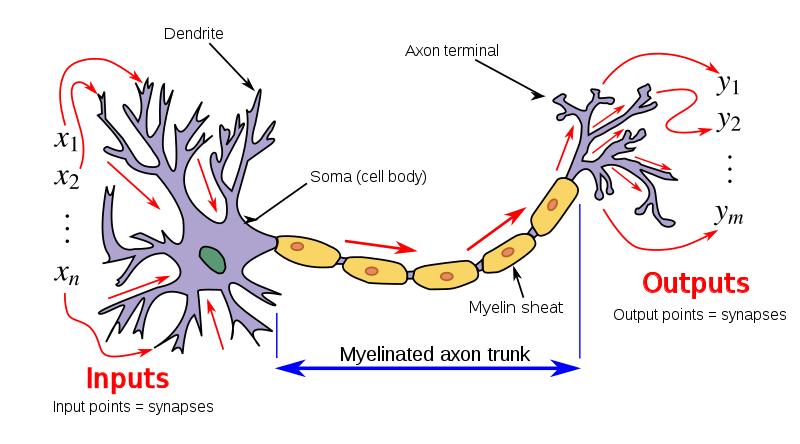

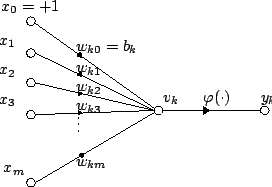

Inspired by the nervous system in the brain, the first mathematical model of an artificial neuron232323known as the Linear Threshold Unit, or perceptron. was proposed by Warren McCulloch242424a professor of psychiatry at the University of Chicago at the time and Walter Pitts in 1943 [MP43]. It describes the relationship between the input and output as:

| (1.3.27) |

where is some nonlinear activation, typically modeled by a threshold function. This model is illustrated in Figure 1.13. As we can see, this form already shares the main characteristics of a basic unit in modern deep neural networks. The model is derived from observations of how a single neuron works in our nervous system. However, researchers did not know exactly what functions a collection of such neurons could realize and perform. On a more technical level, they were also unsure which nonlinear activation function should be used. Hence, historically many variants have been proposed.252525Step function, hard or soft thresholding, rectified linear unit (ReLU), sigmoid, etc. [DSC22].

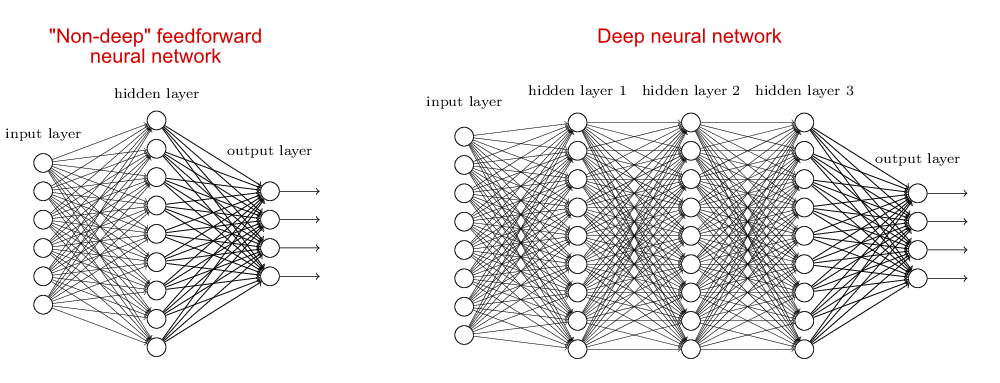

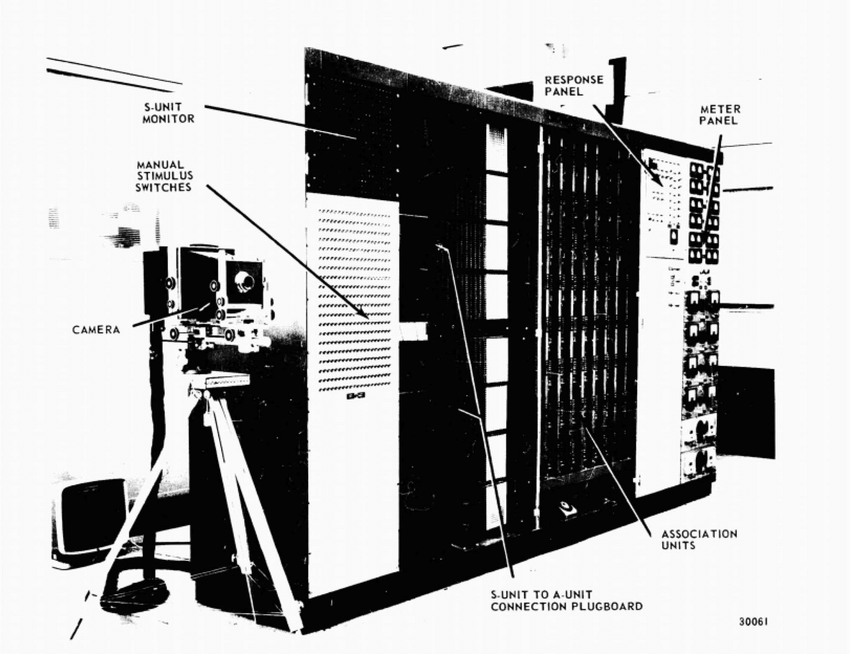

Artificial neural network.

In the 1950s, Frank Rosenblatt was the first to build a machine with a network of such artificial neurons, shown in Figure 1.15. The machine, called Mark I Perceptron, consists of an input layer, an output layer, and a single hidden layer of 512 artificial neurons, as shown in Figure 1.15 left, which is similar to what is illustrated in Figure 1.14 (left). It was designed to classify optical images of letters. However, the capacity of a single-layer network is limited and can only learn linearly separable patterns. In the 1969 book Perceptrons: An Introduction to Computational Geometry by Marvin Minsky and Seymour Papert [MP69], it was shown that the single-layer architecture of Mark I Perceptron cannot learn an XOR function. This result significantly dampened interest in artificial neural networks, even though it was later proven that a multi-layer network can learn an XOR function [RHW86]. In fact, a sufficiently large multi-layer network, as shown in Figure 1.14 (right), consisting of such simple neurons can simulate any finite-state machine, even the universal Turing machine.262626Do not confuse what neural networks are capable of doing in principle with whether it is tractable or easy to learn a neural network that realizes certain desired functions. Nevertheless, the study of artificial neural networks subsequently entered its first winter in the 1970s.

Convolutional neural networks.

Early experiments with artificial neural networks such as the Mark I Perceptron in the 1950s and 1960s were somewhat disappointing. They suggested that simply connecting neurons in a general fashion, as in multi-layer perceptrons (MLPs), might not suffice. To build effective and efficient networks, it is extremely helpful to understand the collective purpose or function neurons in the network must achieve so that they can be organized and learned in a specialized way. Thus, at this juncture, once again the study of machine intelligence turned to the animal nervous system for inspiration.

It is known that most of our brain is dedicated to processing visual information [Pla99]. In the 1950s and 1960s, David Hubel and Torsten Wiesel systematically studied the visual cortices of cats. They discovered that the visual cortex contains different types of cells—simple cells and complex cells—which are sensitive to visual stimuli of different orientations and locations [HW59]. Hubel and Wiesel won the 1981 Nobel Prize in Physiology or Medicine for this groundbreaking discovery.

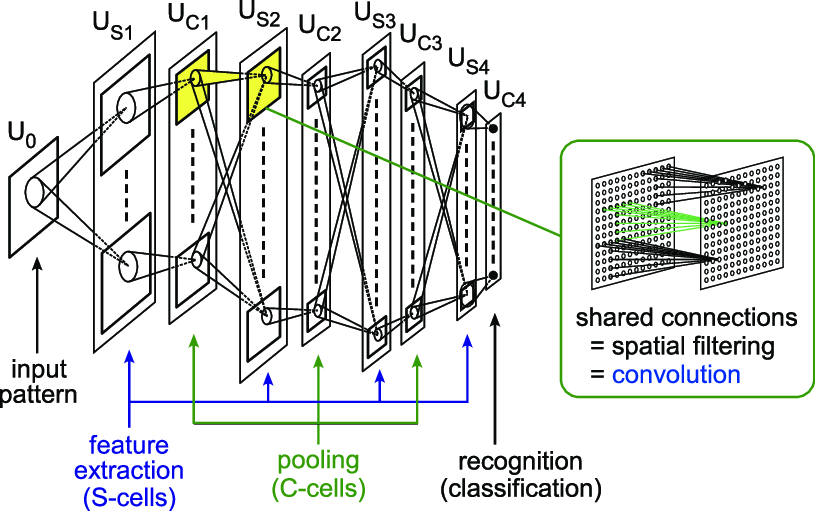

On the artificial neural network side, Hubel and Wiesel’s work inspired Kunihiko Fukushima to design the “neocognitron” network in 1980, which consists of artificial neurons that emulate biological neurons in the visual cortices [Fuk80]. This is known as the first convolutional neural network (CNN), and its architecture is illustrated in Figure 1.16. Unlike the perceptron, the neocognitron had more than one hidden layer and could be viewed as a deep network, as shown in Figure 1.14 (right).

Also inspired by how neurons work in the cat’s visual cortex, Fukushima was the first to introduce the rectified linear unit (ReLU):

| (1.3.28) |

as the activation function in 1969 [Fuk69]. However, it was not until recent years that ReLU became widely used in modern deep (convolutional) neural networks. This book will explain why ReLU is a good choice once we discuss the main operations deep networks implement: compression.

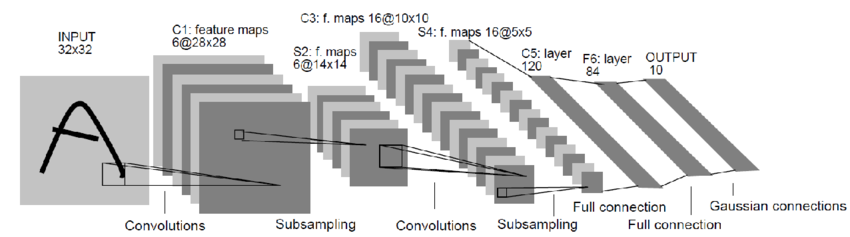

CNN-type networks continued to evolve in the 1980s, and many different variants were introduced and studied. However, despite the remarkable capacities of deep networks and the improved architectures inspired by neuroscience, it remained extremely difficult to train such deep networks for real tasks such as image classification. Getting a network to work depended on many unexplainable heuristics and tricks, which limited the appeal and applicability of neural networks. A major breakthrough came around 1989 when Yann LeCun successfully used back propagation (BP) to learn a deep convolutional neural network for recognizing handwritten digits [LBD+89], later known as LeNet (see Figure 1.17). After several years of persistent development, his perseverance paid off: LeNet’s performance eventually became good enough for practical use in the late 1990s [LBB+98]. It was used by the US Post Office for recognizing handwritten digits (for zip codes). LeNet was considered the “prototype” network for all modern deep neural networks, such as AlexNet [KSH12] and ResNet [HZR+16a], which we will discuss later. For this work, Yann LeCun was awarded the 2018 Turing Award.272727Together with two other pioneers of deep networks, Yoshua Bengio and Geoffrey Hinton.

Backpropagation.

Throughout history, the fate of deep neural networks has been tied to how easily and efficiently they can be trained. Backpropagation (BP) was introduced for this purpose. A multilayer perceptron can be expressed as a composition of linear mappings and nonlinear activations:

| (1.3.29) |

To train the network weights via gradient descent, we must evaluate the gradient . The chain rule in calculus shows that gradients can be computed efficiently for such functions—a technique later termed backpropagation or BP; see Appendix A for details. BP was already known in optimal control and dynamic programming during the 1960s and 1970s, appearing in Paul Werbos’s 1974 PhD thesis [Wer74, Wer94]. In 1986, David Rumelhart et al. were the first to apply it to train a multilayer perceptron (MLP) [RHW86]. Since then, BP has become the dominant technique for training deep networks, as it is scalable and can be efficiently implemented on parallel and distributed computing platforms. However, nature likely does not use BP,282828As we have discussed earlier, nature almost ubiquitously learns to correct errors via closed-loop feedback. as the mechanism is too expensive for physical implementation.292929End-to-end BP is computationally intractable for neural structures as complex as the brain, especially if the updates need to happen in real time. Instead, localized updates to small sections of the neural circuitry are much more biologically feasible. There is a relatively small amount of work on transplanting such “local learning” rules to training deep networks, circumventing BP [BS16, MSS+22, LTP25]. We believe this is an exciting opportunity to improve the scalability of training deep networks. This leaves ample room for future improvement.

Despite the aforementioned algorithmic advances, training deep networks remained finicky and computationally expensive in the 1980s and 1990s. By the late 1990s, support vector machines (SVMs) [CV95] had gained popularity as a superior alternative for classification tasks.303030Similar ideas for classification problems trace back to Thomas Cover’s 1964 PhD dissertation, which was condensed and published in a paper in 1964 [Cov64]. SVMs offered two advantages: a rigorous statistical learning framework known as the Vapnik–Chervonenkis (VC) theory and efficient convex optimization algorithms [BV04]. The rise of SVMs ushered in a second winter for neural networks in the early 2000s.

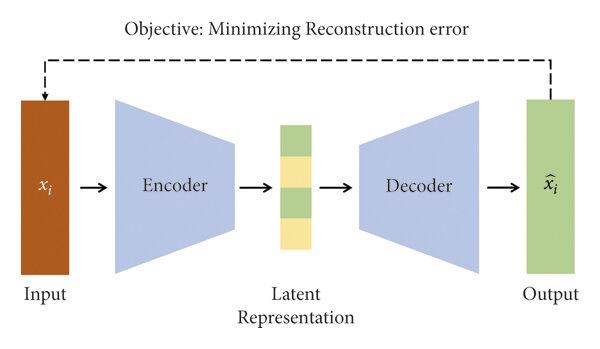

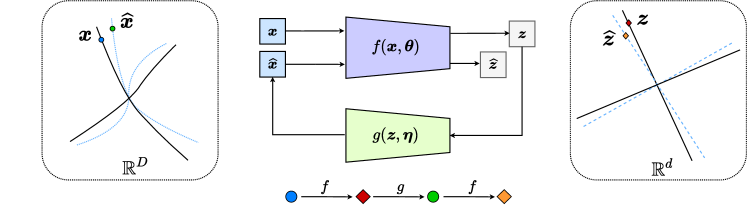

Compressive auto-encoding.

In the late 1980s and 1990s, artificial neural networks were already being used to learn low-dimensional representations of high-dimensional data such as images. It had been shown that neural networks could learn PCA directly from data [Oja82, BH89], rather than using the classic methods discussed in Section 1.3.1. It was also argued during this period that, due to their ability to model nonlinear transformations, neural networks could learn low-dimensional representations for data with nonlinear distributions. Similar to the linear PCA case, one can simultaneously learn a nonlinear dimension-reduction encoder and a decoder , each modeled by a deep neural network [RHW86, Kra91]:

| (1.3.30) |

By enforcing consistency between the decoded data and the original —for example, by minimizing a MSE-type reconstruction error313131MSE-type errors are known to be problematic for imagery data with complex nonlinear structures. As we will soon discuss, much recent work in generative methods, including GANs, has focused on finding better surrogate distance functions between the original data and the regenerated .:

| (1.3.31) |

an autoencoder can be learned directly from the data .

But how can we guarantee that such an auto-encoding indeed captures the true low-dimensional structures in rather than yielding a trivial redundant representation? For instance, one could simply choose and to be identity maps and set . To ensure the auto-encoding is worthwhile, the resulting representation should be compressive according to some computable measure of complexity. In 1993, Geoffrey Hinton and colleagues proposed using coding length as such a measure, transforming the auto-encoding objective into finding a representation that minimizes coding length [HZ93]. This work established a fundamental connection between the principle of minimum description length [Ris78] and free (Helmholtz) energy minimization. Later work from Hinton’s group [HS06] empirically demonstrated that such auto-encoding can learn meaningful low-dimensional representations for real-world images. Pierre Baldi provided a comprehensive survey of autoencoders in 2011 [Bal11], just before deep networks gained widespread popularity. We will discuss measures of complexity and auto-encoding further in Section 1.4, and present a systematic study of compressive auto-encoding in Chapter 5 from a more unified perspective.

![Figure 1.18 : Architecture of LeNet [ LBD+89 ] versus AlexNet [ KSH12 ] .](chapters/chapter1/figs/Comparison_image_neural_networks.svg.png)

Modern Deep Neural Networks

For nearly 30 years—from the 1980s to the 2010s—neural networks were not taken seriously by the mainstream machine learning community. Early deep networks such as LeNet showed promising performance on small-scale classification problems like digit recognition, yet their design was largely empirical, the available datasets were tiny, and back-propagation was computationally prohibitive for the hardware of the era. These factors led to waning interest and stagnant progress, with only a handful of researchers persisting.

Classification and recognition.

The tremendous potential of deep networks could be unleashed only once sufficient data and computational power became available. By the 2010s, large datasets such as ImageNet had emerged, and GPUs had become powerful enough to make back-propagation affordable even for networks far larger than LeNet. In 2012, the deep convolutional network AlexNet — named for Alex Krizhevsky, one of the authors [KSH12] — attracted widespread attention by surpassing existing classification methods on ImageNet by a significant margin.323232Deep networks had already achieved state-of-the-art results on speech-recognition tasks, but these successes received far less attention than the breakthrough on image classification. Figure 1.18 compares AlexNet with LeNet. AlexNet retains many characteristics of LeNet—it is simply larger and replaces LeNet’s sigmoid activations with ReLUs. This work contributed to Geoffrey Hinton’s 2018 Turing Award.

This early success inspired the machine intelligence community to explore new neural network architectures, variations, and improvements. Empirically, it was discovered that larger and deeper networks yield better performance on tasks such as image classification. Notable architectures that emerged include VGG [SZ15], GoogLeNet [SLJ+14], ResNet [HZR+16], and, more recently, Transformers [VSP+17]. Despite rapid empirically-driven performance improvements, theoretical explanations for these architectures—and the relationships among them, if any —remained scarce. One goal of this book is to uncover the common objective these networks optimize and to explain their shared characteristics, such as multiple layers of linear operators interleaved with nonlinear activations (see Chapter 4).

Reinforcement learning.

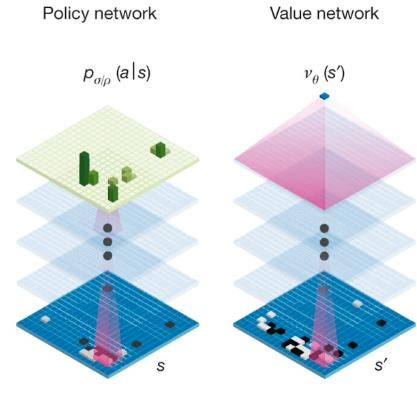

Early deep network successes were mainly in supervised classification tasks such as speech and image recognition. Later, deep networks were adopted to learn decision-making or control policies for game playing. In this context, deep networks model the optimal decision/control policy (i.e., a probability distribution over actions to take in order to maximize the expected reward) or the associated optimal value function (an estimate of the expected reward from the given state), as shown in Figure 1.19. Network parameters are incrementally optimized333333Typically via back-propagation (BP). based on rewards returned from the success or failure of playing the game with the current policy. This learning paradigm is called reinforcement learning [SB18]; it originated in control-systems practice of the late 1960s [WF65, MM70] and traces back through a long and rich history to Richard Bellman’s dynamic programming [Bel57] and Marvin Minsky’s trial-and-error learning [Min54] in the 1950s.

From an implementation standpoint, the marriage of deep networks and reinforcement learning proved powerful: deep networks can approximate control policies and value functions for real-world environments that are difficult to model analytically. This culminated in DeepMind’s AlphaGo system, which stunned the world in 2016 by defeating top Go player Lee Sedol and, in 2017, world champion Jie Ke.343434In 1996, IBM’s Deep Blue made history by defeating Russian grandmaster Garry Kasparov in chess using traditional machine learning techniques such as tree search and pruning, methods that have proven less scalable and unsuccessful for more complex games like Go.

AlphaGo’s success surprised the computing community, which had long regarded the game’s state space as too vast for any efficient solution in terms of computation and sample size. The only plausible explanation is that the optimal value and policy function of Go possess significant favorable structure: qualitatively speaking, their intrinsic dimensions are low enough so that they can be well approximated by neural networks learnable from a manageable number of samples.

Generation and prediction.

From a certain perspective, the early practice of deep networks in the 2010s was focused on extracting relevant information from the data and encoding it into a task-specific representation (say denotes class labels in classification tasks353535This is a highly atypical notation for labels — the usual notation is — but it is useful for our purposes to consider labels as highly compressed and sparse representations of the data. See Example 1.2 for more details.):

| (1.3.32) |

Here the learned mapping needs not preserve most distributional information about ; it suffices to retain only the sufficient statistics for the task. For example, a sample might be an image of an apple, mapped by to the class label .

In many modern settings—such as the so-called large general-purpose (“foundation”) models—we may need to also decode to recover the corresponding to a prescribed precision:

| (1.3.33) |

Because typically represents data observed from the external world, a good decoder allows us to simulate or predict what happens in the world. In a “text-to-image” or “text-to-video” task, for instance, is the text describing the desired image , and the decoder should generate an whose content matches . Given an object class , the decoder should produce an image that looks like an apple, though not necessarily identical to the original .

Generation via discriminative approaches.

For the generated images to resemble true natural images , we must be able to evaluate and minimize some distance:

| (1.3.34) |

As it turns out, most theoretically motivated distances are extremely difficult—if not impossible—to compute and optimize for distributions in high-dimensional space with low intrinsic dimension.363636This remains true even when a parametric family of distributions for is specified. The distance often becomes ill-conditioned or ill-defined for distributions with low-dimensional supports. Worse still, the chosen family might poorly approximate the true distribution of interest.

In 2007, Zhuowen Tu, disappointed by early analytical attempts to model and generate natural images, decided to try a drastically different approach. In a paper published at CVPR 2007 [Tu07], he first suggested learning a generative model for images via a discriminative approach. The idea is simple: if evaluating the distance proves difficult, one can instead learn a discriminator to separate from :

| (1.3.35) |

where labels and indicate whether an image is generated or real. Intuitively, the harder it becomes to separate and , the closer they likely are.

Tu’s work [Tu07] first demonstrated the feasibility of learning a generative model from a discriminative approach. However, the work employed traditional methods for image generation and distribution classification (such as boosting), which proved slow and difficult to implement. After 2012, deep neural networks gained popularity for image classification. In 2014, Ian Goodfellow and colleagues again proposed generating natural images with a discriminative approach [GPM+14]. They suggested modeling both the generator and discriminator with deep neural networks. Moreover, they proposed learning and via a minimax game:

| (1.3.36) |

where is some natural loss function associated with the classification task. In this formulation, the discriminator maximizes its success in separating and , while the generator does the opposite. This approach is named generative adversarial networks (GANs). It was shown that once trained on a large dataset, GANs can indeed generate photo-realistic images. Partially due to this work’s influence, Yoshua Bengio received the 2018 Turing Award.

The discriminative approach appears to cleverly bypass a fundamental difficulty in distribution learning. However, rigorously speaking, this approach does not fully resolve the fundamental difficulty. It is shown in [GPM+14] that with a properly chosen loss, the minimax formulation becomes mathematically equivalent to minimizing the Jensen-Shannon distance (see [CT91]) between and . This remains a hard problem for two low-dimensional distributions in high-dimensional space. Consequently, GANs typically rely on many heuristics and engineering tricks and often suffer from instability issues such as mode collapsing.373737Nevertheless, such a minimax formulation provides a practical approximation of the distance. It simplifies implementation and avoids certain caveats in directly computing the distance.

Generation via denoising and diffusion.

In 2015, shortly after GANs were introduced and gained popularity, Surya Ganguli and his students proposed that an iterative denoising process modeled by a deep network could be used to learn a general distribution, such as that of natural images [SWM+15]. Their method was inspired by properties of special Gaussian and binomial processes studied by William Feller in 1949 [Fel49].383838Again, in the magical era of the 1940s!

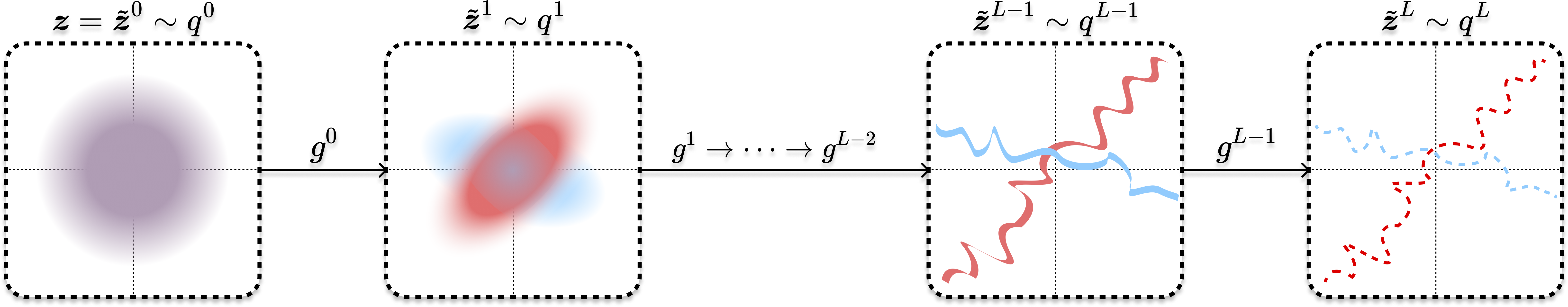

Soon, denoising operators based on the score function [Hyv05], briefly introduced in Section 1.3.1, were shown to be more general and unified the denoising and diffusion processes and algorithms [SE19, SSK+21, HJA20]. Figure 1.20 illustrates the process that transforms a generic Gaussian distribution to an (arbitrary) empirical distribution by performing a sequence of iterative denoising (or compressing) operations:

| (1.3.37) |

By now, denoising (and diffusion) has replaced GANs and become the mainstream method for learning distributions of images and videos, leading to popular commercial image generation engines such as Midjourney and Stability.ai. In Chapter 3 we will systematically introduce and study the denoising and diffusion method for learning a general low-dimensional distribution.

1.4 A Unifying Approach

So far, we have given a brief account of the main objective and history of machine intelligence, along with many important ideas and approaches associated with it. In recent years, following the empirical success of deep neural networks, tremendous efforts have been made to develop theoretical frameworks that help us understand empirically designed deep networks—whether specific seemingly necessary components (e.g., dropout [SHK+14], normalization [IS15, BKH16], attention [VSP+17]) or their overall behaviors (e.g., double descent [BHM+19], neural collapse [PHD20]).

Motivated in part by this trend, this book pursues several important and challenging goals:

-

•

Develop a theoretical framework that yields rigorous mathematical interpretations of deep neural networks.

-

•

Ensure correctness of the learned data distribution and consistency with the learned representation.

-

•

Demonstrate that the framework leads to performant architectures and guides further practical improvements.

In recent years, mounting evidence suggests these goals can indeed be achieved by leveraging the theory and solutions of the classical analytical low-dimensional models discussed earlier (treated more thoroughly in Chapter 2) and by integrating fundamental ideas from related fields—namely information/coding theory, control/game theory, and optimization. This book aims to provide a systematic introduction to this new approach.

1.4.1 Learning Parsimonious Representations

One necessary condition for any learning task to be possible is that the sequences of interest must be computable, at least in the sense of Alan Turing [Tur36]. That is, a sequence can be computed via a program on a typical computer.393939There are indeed well-defined sequences that are not computable. In addition to being computable, we require computation to be tractable.404040We do not need to consider predicting things whose computational complexity is intractable—say, grows exponentially in the length or dimension of the sequence. That is, the computational cost (space and time) for learning and computing the sequence should not grow exponentially in length. Furthermore, as we see in nature (and in the modern practice of machine intelligence), for most practical tasks an intelligent system needs to learn what is predictable from massive data in a very high-dimensional space, such as from vision, sound, and touch. Hence, for intelligence we do not need to consider all computable and tractable sequences or structures; we should focus only on predictable sequences and structures that admit scalable realizations of their learning and computing algorithms:

| (1.4.1) |

This is because whatever algorithms intelligent beings use to learn useful information must be scalable. More specifically, the computational complexity of the algorithms should scale gracefully—typically linear or even sublinear—in the size and dimension of the data. On the technical level, this requires that the operations the algorithms rely on to learn can only utilize oracle information that can be efficiently computed from the data. More specifically, when the dimension is high and the scale is large, the only oracle one can afford to compute is either the first-order geometric information about the data414141such as approximating a nonlinear structure locally with linear subspaces and computing the gradient of an objective function. or the second-order statistical information424242such as covariance or correlation of the data or their features.. The main goal of this book is to develop a theoretical and computational framework within which we can systematically develop efficient and effective solutions or algorithms with such scalable oracles and operations to learn low-dimensional structures from the sampled data and subsequently the predictive function.

Pursuing low-dimensionality via compression.

From the examples of sequences we gave in Section 1.2.1, it is clear that some sequences are easy to model and compute, while others are more difficult. The computational cost of a sequence depends on the complexity of the predicting function . The higher the degree of regression , the more costly it is to compute. The function could be a simple linear function, or it could be a nonlinear function that is arbitrarily difficult to specify and compute.

It is reasonable to believe that if a sequence is harder—by whatever measure we choose—to specify and compute, then it will also be more difficult to learn from its sampled segments. Nevertheless, for any given predictable sequence, there are in fact many, often infinitely many, ways to specify it. For example, for the simple sequence , we could also define the same sequence with . Hence it would be very useful to develop an objective and rigorous notion of “complexity” for any given computable sequence.

Andrey Kolmogorov, a Russian mathematician, was one of the first to define complexity for any computable sequence.434343Many contributed to this notion of sequence complexity, most notably Ray Solomonoff and Greg Chaitin. All three developed algorithmic information theory independently—Solomonoff in 1960, Kolmogorov in 1965 [Kol98], and Chaitin around 1966 [Cha66]. He proposed that among all programs computing the same sequence, the length of the shortest program measures its complexity. This aligns with Occam’s Razor—choose the simplest theory explaining the same observation. Formally, let be a program generating sequence on universal computer . The Kolmogorov complexity of is:

| (1.4.2) |

Thus, complexity measures how parsimoniously we can specify or compute the sequence. This definition is conceptually important and historically inspired profound studies in computational complexity and theoretical computer science.